Difference between revisions of "Social Media & Public Opinion - Final"

() |

|||

| Line 33: | Line 33: | ||

{|style="background-color:#c0deed; color:#F5F5F5; padding: 5 0 5 0;" width="100%" cellspacing="0" cellpadding="0" valign="top" border="0" | | {|style="background-color:#c0deed; color:#F5F5F5; padding: 5 0 5 0;" width="100%" cellspacing="0" cellpadding="0" valign="top" border="0" | | ||

| − | | style=" | + | | style="font-family:Segoe UI; font-size:110%; background-color:#F5F5F5; border-bottom:2px solid #3f3f3f; text-align:center; color:#F5F5F5" width="8%" | |

| − | [[Social Media & Public Opinion - Project Overview|<font color="#222" | + | [[Social Media & Public Opinion - Project Overview|<font color="#222"><b>PROPOSAL</b></font>]] |

| − | | style=" | + | | style="font-family:Segoe UI; font-size:110%; background-color:#F5F5F5; border-bottom:2px solid #3f3f3f; text-align:center; color:#F5F5F5" width="8%" | |

| − | [[Social Media & Public Opinion - Final|<font color="#222" | + | [[Social Media & Public Opinion - Final|<font color="#222"><b>FINAL</b></font>]] |

|} | |} | ||

| Line 45: | Line 45: | ||

<div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | ||

| − | + | Having consulted with our professor, we have decided to shift our focus away from developing a dashboard and delve deeper into the subject of text analysis of social media data, specifically Twitter data. Social media has changed the way how consumers provide feedback to the products they consume. Much social media data can be mined, analysed and turned into value propositions for change in ways companies brand themselves. Although anyone and everyone can easily attain such data, there are certain challenges faced that can hamper the effectiveness of such analysis. Through this project, we are going to explore what some of these challenges are and ways in which we can overcome them. | |

| − | Having consulted with our professor, we have decided to shift our focus away from developing a dashboard and delve into the subject of text analysis of social media data, | ||

</div> | </div> | ||

| Line 52: | Line 51: | ||

<div align="left"> | <div align="left"> | ||

| − | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Methodology: Text analytics using | + | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Methodology: Text analytics using RapidMiner</font></div>== |

<div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | ||

| − | + | '''''Download RapidMiner [https://rapidminer.com/signup/ here]''''' | |

| − | '''Download | + | {| class="wikitable" style="margin-left: 10px;" |

| − | {| class="wikitable" | + | |-! style="background: #0084b4; color: white; text-align: center;" colspan= "2" |

| − | |- | + | | width="50%" | '''Screenshots''' |

| − | | || Steps | + | | width="50%" | '''Steps''' |

|- | |- | ||

| − | | [[File:Text processing module.JPG|350px|]]|| | + | | [[File:Text processing module.JPG|center|350px|]]|| |

| − | + | ===Setting up RapidMiner for text analysis=== | |

| − | To | + | To carry out text processing in RapidMiner, we need to download the plugin required from the RapidMiner's plugin repository. |

Click on Help > Managed Extensions and search for the text processing module. | Click on Help > Managed Extensions and search for the text processing module. | ||

Once the plugin is installed, it should appear in the "Operators" window as seen below. | Once the plugin is installed, it should appear in the "Operators" window as seen below. | ||

|- | |- | ||

| − | | [[File:Tweets Jso.JPG|500px]] || | + | | [[File:Tweets Jso.JPG|center|500px]] <center>''(click to enlarge image)''</center>|| |

===Data Preparation=== | ===Data Preparation=== | ||

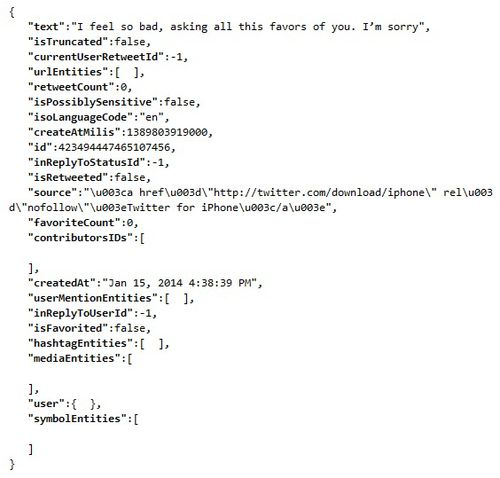

| − | In | + | In RapidMiner, there are a few ways in which we can read a file or data from a database. In our case, we will be reading from tweets provided by the LARC team. The format of the tweets given was in the JSON format. In RapidMiner, JSON strings can be read but it is unable to read nested arrays within the string. Thus, due to this restriction, we need to extract the text from the JSON string before we can use RapidMiner to do the text analysis. We did it by converting each JSON string into an javascript object and extracting only the Id and text of each tweet and write them onto a comma separated file (.csv) to be process later in RapidMiner. |

|- | |- | ||

| − | |||

| − | |||

|} | |} | ||

| − | ===Defining a | + | ===Defining a Standard=== |

| − | Before we can create a model for classifying tweets based on their polarity, we have to first define a standard for the classifier to learn from. | + | Before we can create a model for classifying tweets based on their polarity, we have to first define a standard for the classifier to learn from. In order to attain such a standard, we manually tag a random sample of 1000 tweets with 3 categories; '''Positive (P)''', '''Negative (N)''' and '''Neutral (X)''' through a mutual agreement between the 3 of us. One of the challenges faced is understanding irony as even humans sometimes face difficulty understanding someone who is being sarcastic. It is proven in a University of Pittsburgh study that humans can only agree on whether or not a sentence has the correct sentiment 80% of the time.<ref>Wiebe, J., Wilson, T., & Cardie, C. (2005). Annotating Expressions of Opinions and Emotions in Language. Language Resources and Evaluation, 165-210. Retrieved from http://people.cs.pitt.edu/~wiebe/pubs/papers/lre05.pdf</ref> |

| − | With the tweets and their respective classification, we were ready to create a model for machine learning of tweets sentiments. | + | With the tweets and their respective classification, we were ready to create a model for machine learning of tweets' sentiments. |

| − | ===Creating | + | ===Creating a Model=== |

| − | {| class="wikitable" width=" | + | {| class="wikitable" style="margin-left: 10px;" |

| + | |-! style="background: #0084b4; color: white; text-align: center;" colspan= "2" | ||

| + | | width="50%" | '''Screenshots''' | ||

| + | | width="50%" | '''Steps''' | ||

|- | |- | ||

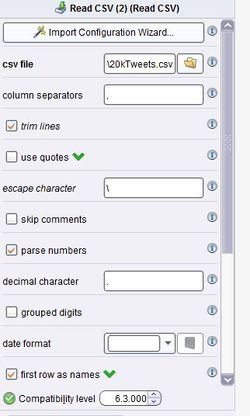

| − | |[[File:ReadCsv.JPG|100px]]|| | + | |[[File:ReadCsv.JPG|center|100px]]|| |

#We first used the "read CSV" operator to read the text from the prepared CSV file that was done earlier. This can be done via an "Import Configuration Wizard" or set manually. | #We first used the "read CSV" operator to read the text from the prepared CSV file that was done earlier. This can be done via an "Import Configuration Wizard" or set manually. | ||

|- | |- | ||

| − | |[[File:ReadCsv configuration.JPG|250px]]|| | + | |[[File:ReadCsv configuration.JPG|center|250px]]|| |

| − | #Each column is separated by a ","<br> | + | #Each column is separated by a ",".<br> |

| − | #Trim the lines to remove any white space before and after the tweet<br> | + | #Trim the lines to remove any white space before and after the tweet.<br> |

| − | #Check the "first row as names" if there a | + | #Check the "first row as names" if there a header is specified. |

|- | |- | ||

| − | |[[File:Normtotext.JPG|100px]]|| | + | |[[File:Normtotext.JPG|center|100px]]|| |

| − | #To check | + | #To check the results at any point of the process, right click on any operators and add a breakpoint. |

| − | #To process the document, we | + | #To process the document, we convert the data from nominal to text. |

|- | |- | ||

| − | |[[File:DataToDoc.JPG|100px]]|| | + | |[[File:DataToDoc.JPG|center|100px]]|| |

| − | #We | + | #We convert the text data into documents. In our case, each tweet is converted in a document. |

|- | |- | ||

| − | |[[File:ProcessDocument.JPG|100px]]|| | + | |[[File:ProcessDocument.JPG|center|100px]]|| |

| − | #The "process document" operator is a multi step process to break down each document into single words. The number of frequency of each word | + | #The "process document" operator is a multi-step process to break down each document into single words. The number of frequency of each word as well as their occurrences (in documents) are calculated and used when formulating the model.<br> |

| − | #To begin the process, double click on the operator. | + | #To begin the process, double-click on the operator. |

|- | |- | ||

| − | |[[File:Tokenize.JPG|500px]]|| | + | |[[File:Tokenize.JPG|center|500px]]|| |

| − | 1.'''Tokenizing the tweet by word''' | + | 1. '''Tokenizing the tweet by word''' |

Tokenization is the process of breaking a stream of text up into words or other meaningful elements called tokens to explore words in a sentence. Punctuation marks as well as other characters like brackets, hyphens, etc are removed. | Tokenization is the process of breaking a stream of text up into words or other meaningful elements called tokens to explore words in a sentence. Punctuation marks as well as other characters like brackets, hyphens, etc are removed. | ||

| − | 2.'''Converting words to lowercase''' | + | 2. '''Converting words to lowercase''' |

All words are transformed to lowercase as the same word would be counted differently if it was in uppercase vs. lowercase. | All words are transformed to lowercase as the same word would be counted differently if it was in uppercase vs. lowercase. | ||

| − | 3.'''Eliminating stopwords''' | + | 3. '''Eliminating stopwords''' |

The most common words such as prepositions, articles and pronouns are eliminated as it helps to improve system performance and reduces text data. | The most common words such as prepositions, articles and pronouns are eliminated as it helps to improve system performance and reduces text data. | ||

| − | 4.'''Filtering tokens that are smaller than 3 letters in length''' | + | 4. '''Filtering tokens that are smaller than 3 letters in length''' |

Filters tokens based on their length (i.e. the number of characters they contain). We set a minimum number of characters to be 3. | Filters tokens based on their length (i.e. the number of characters they contain). We set a minimum number of characters to be 3. | ||

| − | 5.'''Stemming using Porter2’s stemmer''' | + | 5. '''Stemming using Porter2’s stemmer''' |

| + | |||

| + | Stemming is a technique for the reduction of words into their stems, base or root. When words are stemmed, we are keeping the core of the characters which convey effectively the same meaning. | ||

| + | |||

| + | <u>Porter Stemmer vs Snowball (Porter2)</u><ref>Tyranus, S. (2012, June 26). What are the major differences and benefits of Porter and Lancaster Stemming algorithms? Retrieved from http://stackoverflow.com/questions/10554052/what-are-the-major-differences-and-benefits-of-porter-and-lancaster-stemming-alg</ref> | ||

| − | + | ''Porter'': Most commonly used stemmer without a doubt, also one of the most gentle stemmers. It is one of the most computationally intensive of the algorithms(Granted not by a very significant margin). It is also the oldest stemming algorithm by a large margin. | |

| + | |||

| + | ''Snowball (Porter2)'': Nearly universally regarded as an improvement over porter, and for good reason. Porter himself in fact admits that Snowball is better than his original algorithm. Slightly faster computation time than snowball, with a fairly large community around it. | ||

| + | |||

| + | We use the Porter2 stemmer. | ||

|- | |- | ||

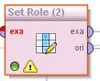

| − | |[[File:Setrole.JPG|100px]]|| | + | |[[File:Setrole.JPG|center|100px]]|| |

# Return to the main process. | # Return to the main process. | ||

| − | # We | + | # We need to add the "Set Role" process to indicate the label for each tweet. We have a column called "Classification" to assign the label for that. |

|- | |- | ||

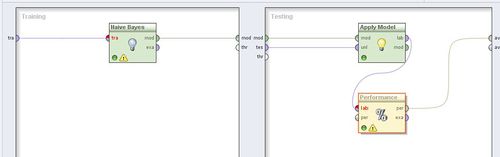

| − | |[[File:Validation.JPG|100px]]|| | + | |[[File:Validation.JPG|center|100px]]|| |

| − | # The | + | # The "X-validation" operator creates a model based on our manual classification which can later be used on another set of data. |

# To begin, double click on the operator. | # To begin, double click on the operator. | ||

|- | |- | ||

| − | |[[File:ValidationX.JPG|500px]]|| | + | |[[File:ValidationX.JPG|center|500px]]|| |

| − | # We | + | # We carry out an X-validation using the Naive Bayes model classification, a simple probabilistic classifier based on applying Bayes' theorem (from Bayesian statistics) with strong (naive) independence assumptions. In simple terms, a Naive Bayes classifier assumes that the presence (or absence) of a particular feature of a class (i.e. attribute) is unrelated to the presence (or absence) of any other feature. The advantage of the Naive Bayes classifier is that it only requires a small amount of training data to estimate the means and variances of the variables necessary for classification. Because independent variables are assumed, only the variances of the variables for each label need to be determined and not the entire covariance matrix. |

| − | |||

| − | |||

|- | |- | ||

| − | |[[File:5000Data.JPG|500px]]|| | + | |[[File:5000Data.JPG|center|500px]]|| |

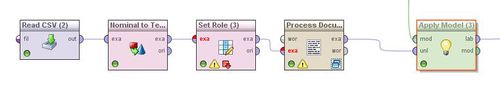

| − | # To apply this model to a new set of data, we | + | # To apply this model to a new set of data, we repeat the above steps of reading a CSV file, converting it the input to text, set the role and processing each document before applying the model to the new set of tweets. |

|- | |- | ||

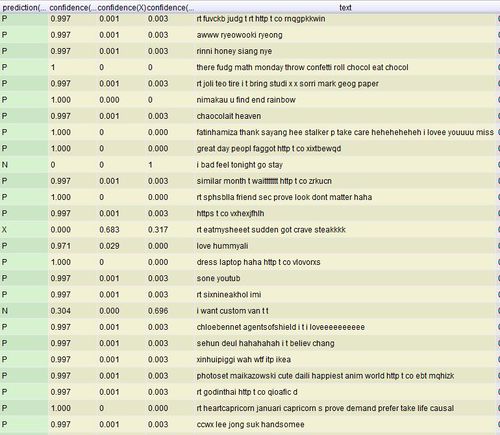

| − | |[[File:Prediction.JPG|500px]]|| | + | |[[File:Prediction.JPG|center|500px]]|| |

| − | # From the performance output, we achieved | + | # From the performance output, we achieved 44.6% accuracy when the model was cross validated with the original 1000 tweets that were manually tagged. To affirm this accuracy, we randomly extracted 100 tweets from the fresh set of 5000 tweets and manually tag these tweets and cross validated with the predicted values by the model. The predicted model did in fact have an accuracy of '''46%''', a close percentage to the 44.2% accuracy using the X-validation module. |

|} | |} | ||

===Improving accuracy=== | ===Improving accuracy=== | ||

| − | One of the ways to improve the accuracy of the model is to remove words that | + | One of the ways to improve the accuracy of the model is to remove words that do not appear frequently within the given set of documents. By removing these words, we can ensure that the resulting words that are classified are mentioned a significant number of times. However, the challenge is to determine what the number of occurrences required is before a word can be taken into account for classification. It is important to note that the higher the threshold, the smaller the result and word list would be. |

| − | We experimented with multiple values to determine the most appropriate amount of words to be pruned off, bearing in mind that we need a sizeable number of words with a high enough accuracy yield | + | We experimented with multiple values to determine the most appropriate amount of words to be pruned off, bearing in mind that we need a sizeable number of words with a high enough accuracy yield. |

| − | *Percentage pruned refers to the words that are removed from the | + | *Percentage pruned refers to the words that are removed from the word list that do not occur within the said amount of documents. e.g. for 1% pruned out of the set of 1000 documents, words that appeared in less than 10 documents are removed from the word list. |

| − | |||

| − | {| class="wikitable" width=" | + | [[File:PercentagePruned.JPG|center|500px]] |

| + | |||

| + | {| class="wikitable" width="600px" style="margin: 1em auto 1em auto; text-align:right;" | ||

|- | |- | ||

! Percentage Pruned !! Percentage Accuracy !! Deviation !! Size of resulting word list | ! Percentage Pruned !! Percentage Accuracy !! Deviation !! Size of resulting word list | ||

| Line 173: | Line 180: | ||

From the results, we could infer that a large number of words (3680) appears only in less than 5 documents as we see the resulting size of the word list falls from 3833 to 153 when we set the percentage pruned at 0.5% | From the results, we could infer that a large number of words (3680) appears only in less than 5 documents as we see the resulting size of the word list falls from 3833 to 153 when we set the percentage pruned at 0.5% | ||

| + | </div> | ||

==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Pitfalls of using conventional text analysis on social media data</font></div>== | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Pitfalls of using conventional text analysis on social media data</font></div>== | ||

| Line 178: | Line 186: | ||

===Multiple languages=== | ===Multiple languages=== | ||

| − | ===Misspelled words and | + | ===Misspelled words and abbreviations=== |

===Length of status=== | ===Length of status=== | ||

===Other media types=== | ===Other media types=== | ||

| Line 193: | Line 201: | ||

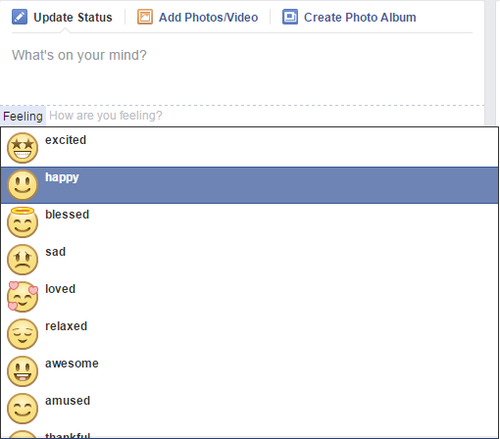

One of the ways in which Facebook may make such analysis easier is by allowing the user to specify how he/she is feeling at the moment of posting a status. With this option, Facebook has effectively increase the probability of determining the right sentiment of the user at the point in time. This mitigates the possibility of sarcasm or other inferred sentiments within that post itself | One of the ways in which Facebook may make such analysis easier is by allowing the user to specify how he/she is feeling at the moment of posting a status. With this option, Facebook has effectively increase the probability of determining the right sentiment of the user at the point in time. This mitigates the possibility of sarcasm or other inferred sentiments within that post itself | ||

| − | [[File:Fb sentiment tagging.png|500px]] | + | [[File:Fb sentiment tagging.png|center|500px]] |

</div> | </div> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Future extension</font></div>== | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Future extension</font></div>== | ||

<div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

</div> | </div> | ||

Revision as of 16:47, 17 April 2015

Contents

Change in project scope

Having consulted with our professor, we have decided to shift our focus away from developing a dashboard and delve deeper into the subject of text analysis of social media data, specifically Twitter data. Social media has changed the way how consumers provide feedback to the products they consume. Much social media data can be mined, analysed and turned into value propositions for change in ways companies brand themselves. Although anyone and everyone can easily attain such data, there are certain challenges faced that can hamper the effectiveness of such analysis. Through this project, we are going to explore what some of these challenges are and ways in which we can overcome them.

Methodology: Text analytics using RapidMiner

Download RapidMiner here

| Screenshots | Steps |

Setting up RapidMiner for text analysisTo carry out text processing in RapidMiner, we need to download the plugin required from the RapidMiner's plugin repository. Click on Help > Managed Extensions and search for the text processing module. Once the plugin is installed, it should appear in the "Operators" window as seen below. | |

| |

Data PreparationIn RapidMiner, there are a few ways in which we can read a file or data from a database. In our case, we will be reading from tweets provided by the LARC team. The format of the tweets given was in the JSON format. In RapidMiner, JSON strings can be read but it is unable to read nested arrays within the string. Thus, due to this restriction, we need to extract the text from the JSON string before we can use RapidMiner to do the text analysis. We did it by converting each JSON string into an javascript object and extracting only the Id and text of each tweet and write them onto a comma separated file (.csv) to be process later in RapidMiner. |

Defining a Standard

Before we can create a model for classifying tweets based on their polarity, we have to first define a standard for the classifier to learn from. In order to attain such a standard, we manually tag a random sample of 1000 tweets with 3 categories; Positive (P), Negative (N) and Neutral (X) through a mutual agreement between the 3 of us. One of the challenges faced is understanding irony as even humans sometimes face difficulty understanding someone who is being sarcastic. It is proven in a University of Pittsburgh study that humans can only agree on whether or not a sentence has the correct sentiment 80% of the time.[1]

With the tweets and their respective classification, we were ready to create a model for machine learning of tweets' sentiments.

Creating a Model

| Screenshots | Steps |

| |

| |

| |

| |

| |

|

1. Tokenizing the tweet by word Tokenization is the process of breaking a stream of text up into words or other meaningful elements called tokens to explore words in a sentence. Punctuation marks as well as other characters like brackets, hyphens, etc are removed. 2. Converting words to lowercase All words are transformed to lowercase as the same word would be counted differently if it was in uppercase vs. lowercase. 3. Eliminating stopwords The most common words such as prepositions, articles and pronouns are eliminated as it helps to improve system performance and reduces text data. 4. Filtering tokens that are smaller than 3 letters in length Filters tokens based on their length (i.e. the number of characters they contain). We set a minimum number of characters to be 3. 5. Stemming using Porter2’s stemmer Stemming is a technique for the reduction of words into their stems, base or root. When words are stemmed, we are keeping the core of the characters which convey effectively the same meaning. Porter Stemmer vs Snowball (Porter2)[2] Porter: Most commonly used stemmer without a doubt, also one of the most gentle stemmers. It is one of the most computationally intensive of the algorithms(Granted not by a very significant margin). It is also the oldest stemming algorithm by a large margin. Snowball (Porter2): Nearly universally regarded as an improvement over porter, and for good reason. Porter himself in fact admits that Snowball is better than his original algorithm. Slightly faster computation time than snowball, with a fairly large community around it. We use the Porter2 stemmer. | |

| |

| |

| |

| |

|

Improving accuracy

One of the ways to improve the accuracy of the model is to remove words that do not appear frequently within the given set of documents. By removing these words, we can ensure that the resulting words that are classified are mentioned a significant number of times. However, the challenge is to determine what the number of occurrences required is before a word can be taken into account for classification. It is important to note that the higher the threshold, the smaller the result and word list would be.

We experimented with multiple values to determine the most appropriate amount of words to be pruned off, bearing in mind that we need a sizeable number of words with a high enough accuracy yield.

- Percentage pruned refers to the words that are removed from the word list that do not occur within the said amount of documents. e.g. for 1% pruned out of the set of 1000 documents, words that appeared in less than 10 documents are removed from the word list.

| Percentage Pruned | Percentage Accuracy | Deviation | Size of resulting word list |

|---|---|---|---|

| 0% | 39.3% | 5.24% | 3833 |

| 0.5% | 44.2% | 4.87% | 153 |

| 1% | 42.2% | 2.68% | 47 |

| 2% | 45.1% | 1.66% | 15 |

| 5% | 43.3% | 2.98% | 1 |

From the results, we could infer that a large number of words (3680) appears only in less than 5 documents as we see the resulting size of the word list falls from 3833 to 153 when we set the percentage pruned at 0.5%

Pitfalls of using conventional text analysis on social media data

Multiple languages

Misspelled words and abbreviations

Length of status

Other media types

Improving the effectiveness of sentiment analysis of social media data

Allowing the user to tag their feelings to their status

One of the ways in which Facebook may make such analysis easier is by allowing the user to specify how he/she is feeling at the moment of posting a status. With this option, Facebook has effectively increase the probability of determining the right sentiment of the user at the point in time. This mitigates the possibility of sarcasm or other inferred sentiments within that post itself

Future extension

References

- ↑ Wiebe, J., Wilson, T., & Cardie, C. (2005). Annotating Expressions of Opinions and Emotions in Language. Language Resources and Evaluation, 165-210. Retrieved from http://people.cs.pitt.edu/~wiebe/pubs/papers/lre05.pdf

- ↑ Tyranus, S. (2012, June 26). What are the major differences and benefits of Porter and Lancaster Stemming algorithms? Retrieved from http://stackoverflow.com/questions/10554052/what-are-the-major-differences-and-benefits-of-porter-and-lancaster-stemming-alg