Social Media & Public Opinion - Project Overview

Contents

Introduction and Project Background

Related Work

The paper Sentiment Analysis of Twitter Audiences: Measuring the Positive or Negative Influence of Popular Twitterers (Bae et al., 2012)[7] analysed over 3 million tweets mentioning, retweeting or replying to the 13 most influential users and investigate whether popular users influence the sentiment changes of their audiences positively or negatively. Results show that most of the popular users such as celebrities have larger positive audiences than negative, while news media such as CNN Breaking News and BBC Breaking News have larger negative audiences. Reason given was that western news agencies mostly report the downside of human nature rather than upbeat and hopeful happenings, whereas social media like Mashable and TechCrunch have audiences that are more positive because they publish useful information and news. In Singapore, the top 20 accounts with the most followers consist of a mix of notable people such as JJ Lin and Lee Hsien Loong and organisations like Straits Times and Singapore Airlines (Chin, 2014)[8]. We can see if the same is observed in Singapore, by looking at the specific peaks in the trend graph, if it is caused by a certain influential Twitter user, such as from a news agency, a prominent blogger or celebrity, or a government leader.

Based on retweets of more than 165 000 tweets, the paper Emotions and Information Diffusion in Social Media—Sentiment of Microblogs and Sharing Behavior (Stieglitz et al., 2013)[9] examines whether sentiment occurring in social media content is associated with a user’s information sharing behaviour. Results show that emotionally charged tweets, in particular those containing political content, are more likely to be disseminated compared to neutral ones. In our project, we can examine if political news or messages from news agency and politicians incite cause emotions to run high and create conversation among Twitter users. In A Sentiment Analysis of Singapore Presidential Election 2011 using Twitter Data with Census Correction (Choy et al., 2011)[10], sentiment analysis translate into rather accurate information about the political landscape in Singapore. It predicts correctly the top two contenders in a four-corner fight and that there would be a thin margin between them. This proves that there is value in sentiment analysis in anticipating future events.

A real-time text-based Hedonometer was built to measure happiness of over 63 million Twitter users over 33 months, as recorded in the paper Temporal Patterns of Happiness and Information in a Global Social Network: Hedonometrics and Twitter (Dodds et al., 2011)[11]. It shows how a highly robust and tunable metric can be constructed with the word list chosen solely by frequency of usage.

Motivation & Project Scope

High-Level Requirements

The system will include the following:

- A timeline based on the tweets provided

- The timeline will display the level of happiness as well as the volume of tweets.

- Each point on the timeline will provide additional information like the overall happiness scores, the level of sentiments for each specific category etc.

- Linked graphical representations based on the time line

- Graphs to represent the aggregated user attributes (gender, age groups etc.)

- Comparison between 2 different user defined time periods

- Optional toggling of volume of tweets with sentiment timeline graph

Work Scope

- Data Collection – Collect Twitter data to be analysed from LARC

- Data Preparation – Clean and transform the data into a readable CSV for upload

- Application Calculations and Filtering – Perform calculations and filters on the data in the app

- Dashboard Construction – Build the application’s dashboard and populate with data

- Dashboard Calibration – Finalize and verify the accuracy of dashboard visualizations

- Stress Testing and Refinement – Test software performance whether it meets the minimum requirements of the clients and * perform any optimizations to meet these.

- Literature Study – Understand sentiment and text analysis in social media

- Software Learning – Learn how to use and integrate various D3.js / Hicharts libraries, and the dictionary word search provided by the client.

Deliverables

- Project Proposal

- Mid-term presentation

- Mid-term report

- Final presentation

- Final report

- Project poster

- A web-based platform hosted on OpenShift.

Dashboard Prototype

Methodology

Dashboard

The interactive visual model prototype should allow the user to be able to see the past tweets based upon certain significant events and derive a conclusion from the results shown. To be able to do this, we will propose the following methodology. Tweet data will be provided to us from the user via uploading a csv file containing the tweets in the JSON format.

First, we will first display an overview of the tweets that we are looking at. Tweets will be aggregated into intervals based upon the span of tweets’ duration as given in the file upload. Each tweet will have a ‘happiness’ score tagged to it. “Happiness” score is derived from the study at Hedometer.org. Out of the 10,100 words that have a score tagged to it, some of them may not be applicable to words on Twitter. (Please refer to the study to find out how the score is derived). Words that are not applicable will not be used to calculate the score of the tweet and will be considered as a stop/neutral word on the application.

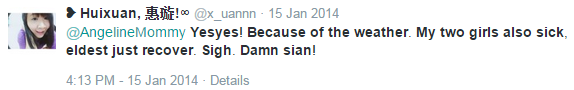

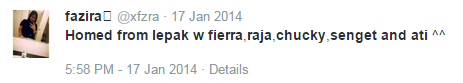

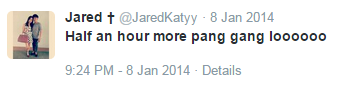

To visualise the words that are mentioned in these tweets, we will use a dynamically generated word cloud. A word cloud is useful in showing the users which are the words that are commonly mentioned in the tweets. The more a particular word is mentioned, the bigger it will appear on the word cloud. Stop/neutral words will be removed to ensure that only relevant words show up on the tag cloud. One thing to note is that the source of the text is from Twitter, which means that depending on the users, these tweets may contain localized words which may be hard to filter out. The list of stop words that we will be using to filter will be based upon this list.

Secondly, there is a list of predicted user attributes that is provided by the client. Each line contains attributes of one user in JSON format. The information is shown below:

- id: refers to twitter id

- gender

- ethnicity

- religion

- age_group

- marital_status

- sleep

- emotions

- topics

This predicted user attributes will be displayed in the 2nd segment where the application allows users to have a quick glance of the demographics of the users.

Third, we will also display the score of the words mentioned based upon the happiness level. This will allow the user to quickly identify the words that are attributing to the negativity or positivity of the set of tweets.

The entire application will entirely be browser based and some of the benefits of doing so include:

- Client does not need to download any software to run the application

- It clean and fast as most of the people who own a computer would probably have a browser installed by default

- It is highly scalable. Work is done on the front-end rather than on the server which may be choked when handling too many requests.

HTML5 and CSS3 will be used primarily for the display. Javascript will be used for the manipulation of the document objects front-end. Some of the open source plugins that we will be using includes:

- Highchart.js – a visualisation plugin to create charts quickly.

- Jquery – a cross-platform JavaScript library designed to simplify the client-side scripting of HTML

- Openshift – Online free server for live deployment

- Moment.js – date manipulation plugin

Machine Learning

Lexical Affinity

Lexical Affinity assigns arbitrary words a probabilistic affinity for a particular topic or emotion. For example, ‘accident’ might be assigned a 75% probability of indicating a negative event, as in ‘car accident’ or ‘hurt in an accident’. There are a few lexical affinity types that share high co-occurrence frequency of their constituents [1]:

- grammatical constructs (e.g. “due to”)

- semantic relations (e.g. “nurse” and “doctor”)

- compounds (e.g. “New York”)

- idioms and metaphors (e.g. “dead serious)

The way to do this is to first determine or define the support and confidence threshold that we are willing to accept before associating words with one another. As a rule of thumb, we will go ahead with 75%.

The support of a bigram (2 words) is defined as the proportion of all the set of words which contains these 2 words. Essentially, it is to see if these 2 words occur sufficient number of time to consider the pairing significant. The confidence of a rule is defined by the proportion of these 2 words occurring over the number of times tweets containing the former of these words occurs. Each tweet may contain more than 1 pairing. For example, "It's a pleasant and wonderful experience" yields 3 pairings where "[Pleasant,wonderful][pleasant,experience][wonderful,experience]" can be grouped. Once we have determine the support and confidence level of each of these pairings, we will be able to generate a new dictionary containing these pairings to be run onto new data.Testing our New Dictionary

Limitations & Assumptions

What Hedonometer Cannot Detect

Negation Handling

Abbreviations, Smileys/Emoticons & Special Symbols

Local Languages & Slangs (Singlish)

Ambiguity

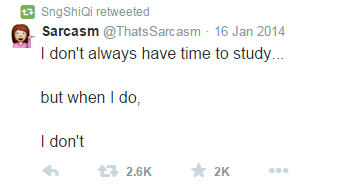

Sarcasm

Project Overall

| Limitations | Assumptions |

| Insufficient predicted information on the users (location, age etc.) | Data given by LARC is sufficiently accurate for the user |

| Fake Twitter users | LARC will determine whether or not the users are real or not |

| Ambiguity of the emotions | Emotions given by the dictionary (as instructed by LARC) is conclusive for the Tweets that is provided |

| Dictionary words limited to the ones instructed by LARC | A comprehensive study has been done to come up with the dictionary |

ROI Analysis

Future Work

- Scalable larger sets of data without hindering on time and performance

- Able to accommodate real-time data to provide instantaneous analytics on-the-go

References

- ↑ About Twitter. (2014, December). Retrieved from https://about.twitter.com/company

- ↑ Kemp, S. (2015, January 21). Digital, Social & Mobile in 2015. Retrieved from http://wearesocial.sg/blog/2015/01/digital-social-mobile-2015/

- ↑ Yap, J. (2014, June 4). How many Twitter users are there in Singapore? Retrieved April 22, 2015, from https://vulcanpost.com/10812/many-twitter-users-singapore/

- ↑ Gaza takes Twitter by storm. (2014, August 20). Retrieved April 22, 2015, from http://www.vocfm.co.za/gaza-takes-twitter-by-storm/

- ↑ MasterMineDS. (2014, August 6). 2014 Israel – Gaza Conflict: Twitter Sentiment Analysis. Retrieved April 22, 2015, from http://www.wesaidgotravel.com/2014-israel-gaza-conflict-twitter-sentiment-analysis-mastermineds

- ↑ [12] Thelwall, M., Buckley, K., & Paltoglou, G. (2011). Sentiment in Twitter events. Journal of the American Society for Information Science and Technology, 62(2), 406-418.

- ↑ [2] Bae, Y., & Lee, H. (2012). Sentiment analysis of twitter audiences: Measuring the positive or negative influence of popular twitterers. Journal of the American Society for Information Science and Technology, 63(12), 2521-2535.

- ↑ Chin, D. (2014, December 10). The Straits Times tops Twitter's list for news in Singapore. Retrieved from http://www.straitstimes.com/news/singapore/more-singapore-stories/story/the-straits-times-trumps-twitter’s-list-news-singapore-2

- ↑ Stieglitz, S., & Dang-Xuan, L. (2013). Emotions and Information Diffusion in Social Media—Sentiment of Microblogs and Sharing Behavior. Journal of Management Information Systems, 29(4), 217-248.

- ↑ Choy, M., Cheong, M. L., Laik, M. N., & Shung, K. P. (2011). A sentiment analysis of Singapore Presidential Election 2011 using Twitter data with census correction. arXiv preprint arXiv:1108.5520.

- ↑ Dodds PS, Harris KD, Kloumann IM, Bliss CA, Danforth CM (2011) Temporal Patterns of Happiness and Information in a Global Social Network: Hedonometrics and Twitter. PLoS ONE 6(12): e26752. doi:10.1371/journal.pone.0026752

- ↑ Clifton, J. (2012, November 21). Singapore Ranks as Least Emotional Country in the World. Retrieved from http://www.gallup.com/poll/158882/singapore-ranks-least-emotional-country-world.aspx

- ↑ Clifton, J. (2012, December 19). Latin Americans Most Positive in the World, Singaporeans are the least positive worldwide. Retrieved February 9, 2015, from http://www.gallup.com/poll/159254/latin-americans-positive-world.aspx