Difference between revisions of "ANLY482 AY2017-18T2 Group03 Project Overview"

Jytay.2014 (talk | contribs) |

|||

| (77 intermediate revisions by 3 users not shown) | |||

| Line 4: | Line 4: | ||

<br/> | <br/> | ||

<!--Main Navigation--> | <!--Main Navigation--> | ||

| − | |||

<!--Insert Group Image later--> | <!--Insert Group Image later--> | ||

{|style="background-color:#ffffff; color:#000000; width="100%" cellspacing="0" cellpadding="10" border="0" | | {|style="background-color:#ffffff; color:#000000; width="100%" cellspacing="0" cellpadding="10" border="0" | | ||

| Line 12: | Line 11: | ||

|style="font-size:88%; border-left:1px solid #ffffff; border-right:1px solid #ffffff; text-align:center; background-color:#149de7; " width="13%" | [[ANLY482_AY2017-18T2_Group03 About Us|<font color="#FFFFFF" size=3><b>ABOUT US</b></font>]] | |style="font-size:88%; border-left:1px solid #ffffff; border-right:1px solid #ffffff; text-align:center; background-color:#149de7; " width="13%" | [[ANLY482_AY2017-18T2_Group03 About Us|<font color="#FFFFFF" size=3><b>ABOUT US</b></font>]] | ||

| − | |style="font-size:88%; border-left:1px solid #149de7; border-right:1px solid #149de7; text-align:center; border-bottom:1px solid #149de7; border-top:1px solid #149de7;" width=" | + | |style="font-size:88%; border-left:1px solid #149de7; border-right:1px solid #149de7; text-align:center; border-bottom:1px solid #149de7; border-top:1px solid #149de7;" width="15%" |[[ANLY482_AY2017-18T2_Group03 Project Overview |<font color="#149de7" size=3><b>PROJECT OVERVIEW</b></font>]] |

|style="font-size:88%; border-left:1px solid #ffffff; border-right:1px solid #ffffff; text-align:center; background-color:#149de7; " width="18%" |[[ANLY482_AY2017-18T2_Group03 Data Analysis |<font color="#FFFFFF" size=3><b>DATA ANALYSIS </b></font>]] | |style="font-size:88%; border-left:1px solid #ffffff; border-right:1px solid #ffffff; text-align:center; background-color:#149de7; " width="18%" |[[ANLY482_AY2017-18T2_Group03 Data Analysis |<font color="#FFFFFF" size=3><b>DATA ANALYSIS </b></font>]] | ||

| − | |style="font-size:88%; border-left:1px solid #ffffff; border-right:1px solid #ffffff; text-align:center; background-color:#149de7; " width=" | + | |style="font-size:88%; border-left:1px solid #ffffff; border-right:1px solid #ffffff; text-align:center; background-color:#149de7; " width="15%" |[[ANLY482_AY2017-18T2_Group03 Project Management |<font color="#FFFFFF" size=3><b>PROJECT MANAGEMENT</b></font>]] |

|style="font-size:88%; border-left:1px solid #ffffff; border-right:1px solid #ffffff; text-align:center; background-color:#149de7; " width="17%" |[[ANLY482_AY2017-18T2_Group03 Documentation | <font color="#FFFFFF" size=3><b>DOCUMENTATION</b></font>]] | |style="font-size:88%; border-left:1px solid #ffffff; border-right:1px solid #ffffff; text-align:center; background-color:#149de7; " width="17%" |[[ANLY482_AY2017-18T2_Group03 Documentation | <font color="#FFFFFF" size=3><b>DOCUMENTATION</b></font>]] | ||

| + | |||

| + | |style="font-size:88%; border-left:1px solid #ffffff; border-right:1px solid #ffffff; text-align:center; background-color:#149de7; " width="17%" |[[ANLY482_AY2017-18_Term_2 | <font color="#FFFFFF" size=3><b>MAIN PAGE</b></font>]] | ||

|} | |} | ||

| − | |||

| − | < | + | <!---------------START of sub menu ----------------------> |

| + | {| style="background-color:#ffffff; margin: 3px auto 0 auto" width="55%" | ||

| + | |- | ||

| + | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="150px"| [[ANLY482_AY2017-18T2_Group03 Project Overview Old| <span style="color:#149de7">Previous</span>]] | ||

| + | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ||

| + | |||

| + | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #149de7" width="150px"| [[ANLY482_AY2017-18T2_Group03 Project Overview| <span style="color:#149de7">Current</span>]] | ||

| + | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ||

| − | <div style="border-style: solid; border-width:0; background: #149de7; padding: 7px; font-weight: bold; text-align:left; line-height: wrap_content; text-indent: 20px; font-size:20px; font-family:Trebuchet MS;border-bottom:5px solid white; border-top:5px solid black"><font color= #FFFFFF>Motivation</font></div> | + | |} |

| + | <!---------------END of sub menu ----------------------> | ||

| + | <br/> | ||

| + | ==<div style="border-style: solid; border-width:0; background: #149de7; padding: 7px; font-weight: bold; text-align:left; line-height: wrap_content; text-indent: 20px; font-size:20px; font-family:Trebuchet MS;border-bottom:5px solid white; border-top:5px solid black"><font color= #FFFFFF>Motivation</font></div>== | ||

| − | + | The company has stayed ahead of their competition by embracing technology in the early days and now, they are starting to dabble into analytics in order to help make use of the large amount of data that they have generated throughout the course of their business. Currently, these information is being stored in their proprietary Warehouse Management System (WMS) which captures transaction information whenever goods are moving in, out or within the warehouse. | |

| − | |||

| − | |||

| − | |||

| − | + | The amount of information being managed by the system is massive and it is very complicated, thus managers have to spend time to use pivot tables in excel to better visualize the information being generated in order to get a better view of how the current operations is being run. This uses up valuable time which could have been used for other more important operational matters. | |

| − | + | One important information that is very useful to a warehouse manager is an ABC analysis which is an inventory categorization technique. The purpose of this analysis is to classify the inventory into 3 categories. | |

| − | + | The most general way of categorization is: | |

| + | *‘A’ items – 20% of the items accounts for 80% of the annual consumption value of the items. | ||

| + | *‘B’ items - 30% of the items accounts for 15% of the annual consumption value of the items. | ||

| + | *‘C’ items - 50% of the items accounts for 5% of the annual consumption value of the items. | ||

| − | + | Not only that, in order to provide even more better insight for their customers, they would also like to be able to have a better view of the <b>seasonality</b> patterns of the products, as well as an <b>affinity analysis</b> of the products to further improve the way the products can be organized in the warehouse to increase the efficiency of the warehouse operations. | |

| − | </font></div> | + | =<div style="border-style: solid; border-width:0; background: #149de7; padding: 7px; font-weight: bold; text-align:left; line-height: wrap_content; text-indent: 20px; font-size:20px; font-family:Trebuchet MS;border-bottom:5px solid white; border-top:5px solid black"><font color= #FFFFFF>Objective and Goals</font></div>= |

| − | + | The main objectives of the project would be to do the following: | |

| − | + | 1. Create a Operations Dashboard to visualize the following information | |

| − | + | *Inbound / Outbound Volume Time Series Chart | |

| − | + | **The purpose of this chart is to visualize the operations performance at various time slices so as to allow operations to have a clear view of the inventory movement patterns for the various SKUs | |

| + | **X-axis: Time unit (day, week, month, quarter, year) | ||

| + | **Y-axis: No. of units of product | ||

| + | **Lines: Each line would symbolize the trend for a different SKU. | ||

| − | + | *Product Ranking Chart | |

| − | * | + | **The purpose of this chart is to find out which are the best-selling items and which are the least popular items. |

| − | * | + | **Treemap view of the products filtered by month / year |

| − | |||

| − | |||

| − | |||

| − | + | *Product Seasonality Chart | |

| + | **The purpose of this chart is to see the seasonality factors of the different products. | ||

| + | **X-axis: Month of the year | ||

| + | **Y-axis: Number of units of product | ||

| + | **Lines: Each line would symbolize the trend for a different SKU. | ||

| − | + | 2. Conduct Associative Rule learning so as to attempt to see if there are affinities within the products within the warehouse so that inventory can be better organized within the warehouse. | |

| − | |||

| − | <div style="border-style: solid; border-width:0; background: #149de7; padding: 7px; font-weight: bold; text-align:left; line-height: wrap_content; text-indent: 20px; font-size:20px; font-family:Trebuchet MS;border-bottom:5px solid white; border-top:5px solid black"><font color= #FFFFFF> | + | =<div style="border-style: solid; border-width:0; background: #149de7; padding: 7px; font-weight: bold; text-align:left; line-height: wrap_content; text-indent: 20px; font-size:20px; font-family:Trebuchet MS;border-bottom:5px solid white; border-top:5px solid black"><font color= #FFFFFF>Provided Data</font></div>= |

| + | The data provided by our sponsor are obtained from their proprietary Warehouse Management System (WMS) which is developed in-house. The Warehouse Management System is used to keep track of the movement of the goods coming in, moving out and within the warehouse itself. The process of goods coming into the warehouse is known as the Inbound process while the process of goods moving out of the warehouse is known as the Outbound process. | ||

| + | |||

| + | For this project, our sponsor has provided us with data for one of their client, which is a company selling cables and connectors. We will be provided with 3 years worth of data from year 2015 to 2017. There are 2 Excel workbooks provided, one for the Inbound goods and the other for the Outbound goods. A more in-depth explanation of the Excel workbooks will be explained below. | ||

| − | < | + | ===Inbound Report<br/>=== |

| − | + | There is a total of 308,730 rows of data spanning across January 2015 to December 2017 for the Inbound orders for this client. In 2015, there were 99,586 rows of data and this increased in 2016 to 102,379. In 2017, the number of rows of data was 106,765. | |

| − | + | Upon further clarifications with our sponsor, we realized that they have moved warehouse at the beginning of September 2016 and this lasted for around 2 weeks. Therefore, for the analysis on the products’ locations in the warehouse, we can only use the data from October 2016 to December 2017. However, for seasonality analysis, we will still use the data from January 2015 to December 2017 to find out trends in the volume of goods. From October 2016 to December 2017, there are 133,620 rows of data. | |

| − | + | The image below shows a Sample Inbound Report Excel spreadsheet. | |

| + | [[Image:Sample Inbound Report.png|800px]] | ||

| − | + | <b><i>Metadata Dictionary</i></b> | |

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Terminology !! Description | ||

| + | |- | ||

| + | | Doc_Num || Unique identifier for an inbound order. | ||

| + | |- | ||

| + | | ASN Date || Advanced Shipping Notice. ASN date represents the date when the ASN is downloaded. | ||

| + | |- | ||

| + | | GRN Date || Goods Received Note. The date when the GRN job is created. | ||

| + | |- | ||

| + | | GRN Post Date || The date when the GRN job is completed. | ||

| + | |- | ||

| + | | PA Created Date || The date when the putaway job is created. | ||

| + | |- | ||

| + | | PA Post Date || The date when the putaway job is completed. | ||

| + | |- | ||

| + | | Owner_Short_Name || The short name of the client. | ||

| + | |- | ||

| + | | Loc_Short_Name || The identifier of the location which the product is placed at. | ||

| + | |- | ||

| + | | Product_Code || Unique identifier of a product. | ||

| + | |- | ||

| + | | Sum (D.Actual_Movement_Qty) || The quantity of products received. | ||

| + | |- | ||

| + | | Conv_Factor || The number of products in each carton. | ||

| + | |- | ||

| + | | Full Carton || The number of full cartons received. | ||

| + | |- | ||

| + | | Loose Carton || The number of loose cartons received (if any). | ||

| + | |- | ||

| + | | Remarks || Remarks to inform of any loose cartons and how they are handled. | ||

| + | |} | ||

| − | + | ===Outbound Report<br/>=== | |

| + | The number of Outbound orders for this client from January 2015 to December 2017 is approximately 657,578 rows of data. In 2015, there were 220,387 rows of data and this increased in 2016 to 221,498. In 2017, the number of rows of data was 215,667. | ||

| − | + | From October 2016 to December 2017, there are 270,815 rows of data. | |

| − | + | The image below shows a Sample Outbound Report Excel spreadsheet. | |

| − | + | [[Image:Sample Outbound Report.png|800px]] | |

| − | < | + | <b><i>Metadata Dictionary</i></b> |

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Terminology !! Description | ||

| + | |- | ||

| + | | Doc_Num || Unique identifier for an outbound order. | ||

| + | |- | ||

| + | | Trans_Code || There are several trans codes: | ||

| + | * OSA (Outbound Stock Adjustment – Usually when there is a shortage or excess physical goods in the warehouse, we need to perform this in the system to ensure that the quantity tallies between system and physical) | ||

| + | * OSO (For Outbound Shipment) | ||

| + | * OOT (Outbound Ownership Transfer - When there is a change of plant code (example: from plant code 1201 to plant code 1402)) | ||

| + | * OTA (Outbound Transaction Adjustment - When there is a data entry error and this transaction code is used to reverse the wrong transaction out) | ||

| + | * OVT (Outbound Vendor Transfer - When there is short shipment) | ||

| − | + | However, we will only focus on OSO. | |

| − | + | |- | |

| + | | Product_Code || Unique identifier of a product. | ||

| + | |- | ||

| + | | Qty || The total quantity of products needed for the order. | ||

| + | |- | ||

| + | | No of CTN || Number of cartons for the order. | ||

| + | |- | ||

| + | | SO Created Date || The date when the sales order is downloaded. | ||

| + | |- | ||

| + | | SO Post Date || It refers to the date when the SO is posted. Most SO Created Dates = SO Post Date. However, when users go into WMS to make changes, the SO Post Dates will change accordingly. | ||

| + | |- | ||

| + | | Expected Delivery Date || The date which the goods is expected to be shipped out. | ||

| + | |- | ||

| + | | PlannedPGIDate || Planned DP post date. | ||

| + | |- | ||

| + | | DP Creation Date || The date when the picking list is printed for the picker to pick the goods in warehouse. | ||

| + | |- | ||

| + | | DP Commit Date || The date when the picker has finished picking. | ||

| + | |- | ||

| + | | DO Created Date || The date which the delivery order is created. It indicates that we are ready for shipment and are waiting for the transporter to load our goods. | ||

| + | |- | ||

| + | | DO Commit || The date when the delivery order is committed. This happens after the goods has left the warehouse. | ||

| + | |- | ||

| + | | LocName || The name of the location in our warehouse. | ||

| + | * Locations like 2R15C13 are the locations of our previous warehouse which has a different layout as the current warehouse. | ||

| + | |- | ||

| + | | Ship_To / TP_Full_Name || Outbound customer name. | ||

| + | |} | ||

| − | + | =<div style="border-style: solid; border-width:0; background: #149de7; padding: 7px; font-weight: bold; text-align:left; line-height: wrap_content; text-indent: 20px; font-size:20px; font-family:Trebuchet MS;border-bottom:5px solid white; border-top:5px solid black"><font color= #FFFFFF>Limitations</font></div>= | |

| + | As with any other real life projects, there are limitations which we face especially with regards to the availability of data. | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! S/N || Limitation !! Conclusion | ||

| + | |- | ||

| + | | 1 || There is no way to accurate link an Outbound shipment to an Inbound shipment. We can only link the Outbound product and Inbound product but not the exact shipment where the product is being brought into the warehouse. || We will assume a First In First Out (FIFO) order of shipment out of the warehouse as that is usually how logistics company operates. This means that the first Outbound in 2015 will be linked to the first Inbound in 2015, unless there are excess goods brought over from 2014. If so, calculations will be done based on the quantity to determine the number of each products left after Outbound shipment. | ||

| + | |- | ||

| + | | 2 || The current data provided only consists of information from 1 company, which cables and connectors, that our sponsor company is handling. || We assume that the same analysis can be replicated for the logistic warehousing of other companies’ products which are similar in nature. | ||

| + | |- | ||

| + | | 3 || The data provided from WMS might not contain all the transactions that happened due to human errors such as missed barcode scanning. || With a large enough dataset, it can be assumed that the results found by our team can be representative and considered accurate. | ||

| + | |} | ||

| − | + | =<div style="border-style: solid; border-width:0; background: #149de7; padding: 7px; font-weight: bold; text-align:left; line-height: wrap_content; text-indent: 20px; font-size:20px; font-family:Trebuchet MS;border-bottom:5px solid white; border-top:5px solid black"><font color= #FFFFFF>Important Information about the Business Process</font></div>= | |

| + | The image below shows the business workflow for the Inbound process of inventory. It also states when each date attribute is collected throughout the entire process. | ||

| + | [[Image:Inbound Business Process.png|800px]] | ||

| − | + | The image below shows the business workflow for the Outbound process of inventory. It also states when each date attribute is collected throughout the entire process. | |

| + | [[Image:Outbound Business Process.png|800px]] | ||

| − | + | =<div style="border-style: solid; border-width:0; background: #149de7; padding: 7px; font-weight: bold; text-align:left; line-height: wrap_content; text-indent: 20px; font-size:20px; font-family:Trebuchet MS;border-bottom:5px solid white; border-top:5px solid black"><font color= #FFFFFF>Project Scope</font></div>= | |

| + | '''<big>1. Data Gathering and Scoping</big>'''<br/> | ||

| + | Our sponsor has given us various excel spreadsheets, both Inbound and Outbound, with data from 2015 to 2017, for a client selling cables and connectors. | ||

| − | + | Our project scope includes analysing the products ranking, products seasonality and products inbound and outbound volumes. Additionally, we will also look at the affinity between products to see if there are trends of any products frequently being shipped together. With the data, we will perform Exploratory Data Analysis using Python. | |

| − | '''<big> | + | '''<big>2. Tools to be Used</big>'''<br/> |

| + | We will be utilising several tools to aid us in the development of our dashboard. This includes: | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Technology !! Description | ||

| + | |- | ||

| + | | d3.js, dc.js and JavaScript || d3.js and dc.js are simple-to-use charting library written in JavaScript which allows us to add interactive charts to our web application for the dashboard. It will be used to develop our dashboard for the various visualizations mentioned above. | ||

| + | |- | ||

| + | | Python and Jupyter || Python is a programming language which we will use to create a script using Jupyter meant for cleaning the dataset when it is uploaded. It is also used to handle the missing and duplicated data. | ||

| + | |- | ||

| + | | Tableau Software || Tableau will be utilized for Exploratory Data Analysis. It will also be used to create a dashboard for our sponsor. | ||

| + | |- | ||

| + | | PostgreSQL || PostgreSQL will be used as the database for the dashboard and web service. | ||

| + | |} | ||

| − | + | '''<big>3. Data Cleaning, Wrangling and Restructuring</big>'''<br/> | |

| + | Our team aims to combine the 3 years data for Inbound products into 1 excel spreadsheet. This will be the same for the Outbound report. We will also try to combine the Inbound and Outbound reports by using their unique identifier to link the data together. Data cleaning will be done using Python to handling missing values, duplicate data and non-uniform data, after integrating the data. | ||

| − | + | However, as the company shifted their warehouse in September 2016 and this lasted for 2 weeks, we will be using another dataset which only consists of data from October 2016 to December 2017 for both Inbound and Outbound. | |

| + | |||

| + | The team will also use Python to write a script which will combine the various excel spreadsheets and extract the relevant fields before consolidating them into a single spreadsheet that will be used as an input for the analysis and the Operations Dashboard which will be done using Tableau or as a web application using JavaScript. | ||

| − | The | + | '''<big>4. Data Visualization and Reporting</big>'''<br/> |

| + | Once the data is cleaned and combined, we will extract the data and create a dashboard with various visualizations as mentioned earlier. This will be done using d3.js, dc.js and JavaScript. The interactive dashboard will allow managers to have easy access to visualizations showing the performance of the current operations as compared to past operations. For the visualisations, we are also considering to use Tableau to do up the dashboard. Both options have been communicated to our sponsor, but the final deliverable format has yet to be finalised. | ||

| − | + | On top of the visualizations, we will also do Market Basket Analysis to find out the affinity between products. Also, we will report all our findings to our sponsor and propose how the goods should be arranged in the warehouse based on their categories (A, B or C). | |

| + | <!--End of Navigation Bar--> | ||

| − | + | ===Software Used<br/>=== | |

| + | We used Python as a tool for the data cleaning, as well as loading of data into the database after certain processing. Specific python libraries used can be seen from the table below. | ||

| − | + | {| class="wikitable" | |

| + | |- | ||

| + | ! Python Library !! Purpose | ||

| + | |- | ||

| + | | pandas || pandas is an open source, BSD-licensed library providing high-performance, easy-to-use data structures and data analysis tools for the Python programming language. It helps to convert data that is being uploaded into dataframes for easier processing. | ||

| + | |- | ||

| + | | datetime || The datetime module supplies classes for manipulating dates and times in both simple and complex ways. It helped to convert certain data into the correct date and time format for creation of new variables that deals with date and time. | ||

| + | |- | ||

| + | | math || It provides access to the mathematical functions defined by the C standard. Particularly in our project, it is used to round values off. | ||

| + | |- | ||

| + | | glob || The glob module finds all the pathnames matching a specified pattern according to the rules used by the Unix shell. It is used during file reading when files are being uploaded onto the database. | ||

| + | |- | ||

| + | | re || This module provides regular expression matching operations similar to those found in Perl. It is used for file reading when files are being uploaded onto the database. | ||

| + | |- | ||

| + | | configparser || This module provides the ConfigParser class which implements a basic configuration language which provides a structure similar to what’s found in Microsoft Windows INI files. You can use this to write Python programs which can be customized by end users easily. In this case, it is used to help connect the python scripts to the postgres database by setting the configurations in the ini files. | ||

| + | |- | ||

| + | | psycopg2 || Psycopg is a PostgreSQL database adapter for the Python programming language. | ||

| + | |- | ||

| + | | os || This module provides a portable way of using operating system dependent functionality. For our project, it is used by the scripts to delete files after they are being uploaded onto the database. | ||

| + | |} | ||

| − | + | For the operational dashboard, we made use of various JavaScript libraries including d3.js, dc.js, crossfilter.js and sigma.js. It is a node.js application which is a server used to host the Angular web dashboard application and serves as the web service layer where the front-end communicates with the back-end to retrieve data from the database. Python programming language is also used for bootstrapping of data and the data cleaning process. | |

| − | |||

| − | |||

| − | |||

Latest revision as of 19:46, 11 April 2018

| HOME | ABOUT US | PROJECT OVERVIEW | DATA ANALYSIS | PROJECT MANAGEMENT | DOCUMENTATION | MAIN PAGE |

| Previous | Current |

|---|

Contents

Motivation

The company has stayed ahead of their competition by embracing technology in the early days and now, they are starting to dabble into analytics in order to help make use of the large amount of data that they have generated throughout the course of their business. Currently, these information is being stored in their proprietary Warehouse Management System (WMS) which captures transaction information whenever goods are moving in, out or within the warehouse.

The amount of information being managed by the system is massive and it is very complicated, thus managers have to spend time to use pivot tables in excel to better visualize the information being generated in order to get a better view of how the current operations is being run. This uses up valuable time which could have been used for other more important operational matters.

One important information that is very useful to a warehouse manager is an ABC analysis which is an inventory categorization technique. The purpose of this analysis is to classify the inventory into 3 categories. The most general way of categorization is:

- ‘A’ items – 20% of the items accounts for 80% of the annual consumption value of the items.

- ‘B’ items - 30% of the items accounts for 15% of the annual consumption value of the items.

- ‘C’ items - 50% of the items accounts for 5% of the annual consumption value of the items.

Not only that, in order to provide even more better insight for their customers, they would also like to be able to have a better view of the seasonality patterns of the products, as well as an affinity analysis of the products to further improve the way the products can be organized in the warehouse to increase the efficiency of the warehouse operations.

Objective and Goals

The main objectives of the project would be to do the following:

1. Create a Operations Dashboard to visualize the following information

- Inbound / Outbound Volume Time Series Chart

- The purpose of this chart is to visualize the operations performance at various time slices so as to allow operations to have a clear view of the inventory movement patterns for the various SKUs

- X-axis: Time unit (day, week, month, quarter, year)

- Y-axis: No. of units of product

- Lines: Each line would symbolize the trend for a different SKU.

- Product Ranking Chart

- The purpose of this chart is to find out which are the best-selling items and which are the least popular items.

- Treemap view of the products filtered by month / year

- Product Seasonality Chart

- The purpose of this chart is to see the seasonality factors of the different products.

- X-axis: Month of the year

- Y-axis: Number of units of product

- Lines: Each line would symbolize the trend for a different SKU.

2. Conduct Associative Rule learning so as to attempt to see if there are affinities within the products within the warehouse so that inventory can be better organized within the warehouse.

Provided Data

The data provided by our sponsor are obtained from their proprietary Warehouse Management System (WMS) which is developed in-house. The Warehouse Management System is used to keep track of the movement of the goods coming in, moving out and within the warehouse itself. The process of goods coming into the warehouse is known as the Inbound process while the process of goods moving out of the warehouse is known as the Outbound process.

For this project, our sponsor has provided us with data for one of their client, which is a company selling cables and connectors. We will be provided with 3 years worth of data from year 2015 to 2017. There are 2 Excel workbooks provided, one for the Inbound goods and the other for the Outbound goods. A more in-depth explanation of the Excel workbooks will be explained below.

Inbound Report

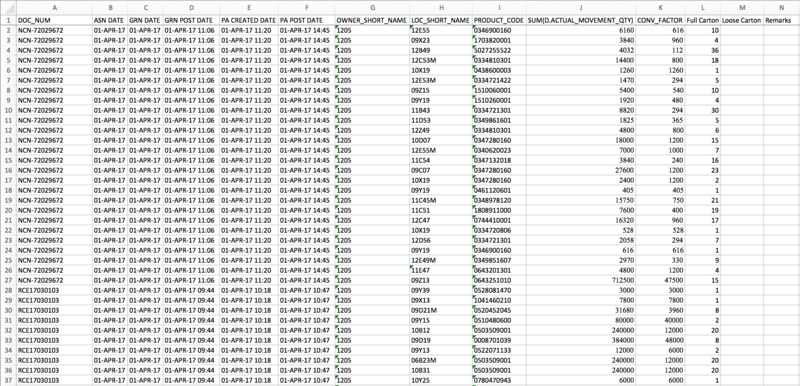

There is a total of 308,730 rows of data spanning across January 2015 to December 2017 for the Inbound orders for this client. In 2015, there were 99,586 rows of data and this increased in 2016 to 102,379. In 2017, the number of rows of data was 106,765.

Upon further clarifications with our sponsor, we realized that they have moved warehouse at the beginning of September 2016 and this lasted for around 2 weeks. Therefore, for the analysis on the products’ locations in the warehouse, we can only use the data from October 2016 to December 2017. However, for seasonality analysis, we will still use the data from January 2015 to December 2017 to find out trends in the volume of goods. From October 2016 to December 2017, there are 133,620 rows of data.

The image below shows a Sample Inbound Report Excel spreadsheet.

Metadata Dictionary

| Terminology | Description |

|---|---|

| Doc_Num | Unique identifier for an inbound order. |

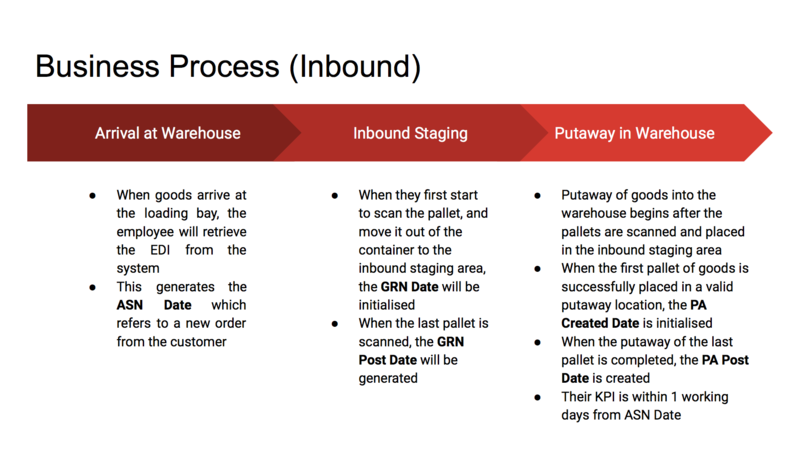

| ASN Date | Advanced Shipping Notice. ASN date represents the date when the ASN is downloaded. |

| GRN Date | Goods Received Note. The date when the GRN job is created. |

| GRN Post Date | The date when the GRN job is completed. |

| PA Created Date | The date when the putaway job is created. |

| PA Post Date | The date when the putaway job is completed. |

| Owner_Short_Name | The short name of the client. |

| Loc_Short_Name | The identifier of the location which the product is placed at. |

| Product_Code | Unique identifier of a product. |

| Sum (D.Actual_Movement_Qty) | The quantity of products received. |

| Conv_Factor | The number of products in each carton. |

| Full Carton | The number of full cartons received. |

| Loose Carton | The number of loose cartons received (if any). |

| Remarks | Remarks to inform of any loose cartons and how they are handled. |

Outbound Report

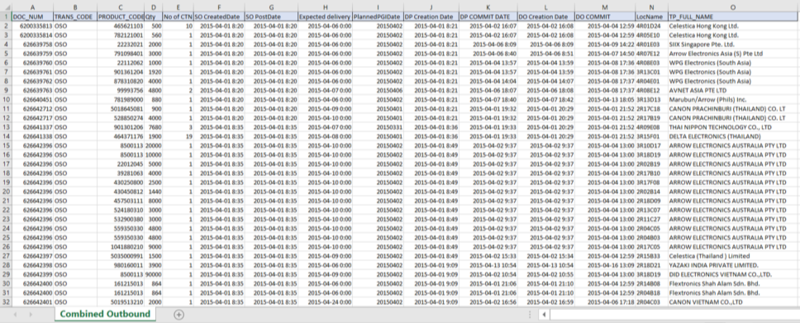

The number of Outbound orders for this client from January 2015 to December 2017 is approximately 657,578 rows of data. In 2015, there were 220,387 rows of data and this increased in 2016 to 221,498. In 2017, the number of rows of data was 215,667.

From October 2016 to December 2017, there are 270,815 rows of data.

The image below shows a Sample Outbound Report Excel spreadsheet.

Metadata Dictionary

| Terminology | Description |

|---|---|

| Doc_Num | Unique identifier for an outbound order. |

| Trans_Code | There are several trans codes:

However, we will only focus on OSO. |

| Product_Code | Unique identifier of a product. |

| Qty | The total quantity of products needed for the order. |

| No of CTN | Number of cartons for the order. |

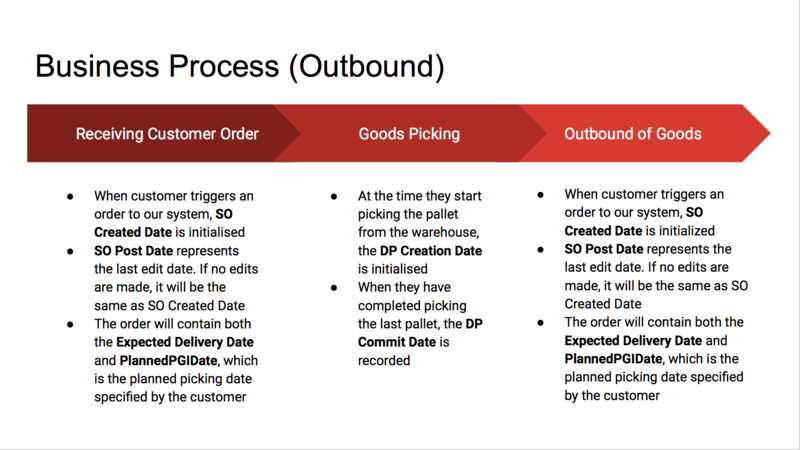

| SO Created Date | The date when the sales order is downloaded. |

| SO Post Date | It refers to the date when the SO is posted. Most SO Created Dates = SO Post Date. However, when users go into WMS to make changes, the SO Post Dates will change accordingly. |

| Expected Delivery Date | The date which the goods is expected to be shipped out. |

| PlannedPGIDate | Planned DP post date. |

| DP Creation Date | The date when the picking list is printed for the picker to pick the goods in warehouse. |

| DP Commit Date | The date when the picker has finished picking. |

| DO Created Date | The date which the delivery order is created. It indicates that we are ready for shipment and are waiting for the transporter to load our goods. |

| DO Commit | The date when the delivery order is committed. This happens after the goods has left the warehouse. |

| LocName | The name of the location in our warehouse.

|

| Ship_To / TP_Full_Name | Outbound customer name. |

Limitations

As with any other real life projects, there are limitations which we face especially with regards to the availability of data.

| S/N | Limitation | Conclusion |

|---|---|---|

| 1 | There is no way to accurate link an Outbound shipment to an Inbound shipment. We can only link the Outbound product and Inbound product but not the exact shipment where the product is being brought into the warehouse. | We will assume a First In First Out (FIFO) order of shipment out of the warehouse as that is usually how logistics company operates. This means that the first Outbound in 2015 will be linked to the first Inbound in 2015, unless there are excess goods brought over from 2014. If so, calculations will be done based on the quantity to determine the number of each products left after Outbound shipment. |

| 2 | The current data provided only consists of information from 1 company, which cables and connectors, that our sponsor company is handling. | We assume that the same analysis can be replicated for the logistic warehousing of other companies’ products which are similar in nature. |

| 3 | The data provided from WMS might not contain all the transactions that happened due to human errors such as missed barcode scanning. | With a large enough dataset, it can be assumed that the results found by our team can be representative and considered accurate. |

Important Information about the Business Process

The image below shows the business workflow for the Inbound process of inventory. It also states when each date attribute is collected throughout the entire process.

The image below shows the business workflow for the Outbound process of inventory. It also states when each date attribute is collected throughout the entire process.

Project Scope

1. Data Gathering and Scoping

Our sponsor has given us various excel spreadsheets, both Inbound and Outbound, with data from 2015 to 2017, for a client selling cables and connectors.

Our project scope includes analysing the products ranking, products seasonality and products inbound and outbound volumes. Additionally, we will also look at the affinity between products to see if there are trends of any products frequently being shipped together. With the data, we will perform Exploratory Data Analysis using Python.

2. Tools to be Used

We will be utilising several tools to aid us in the development of our dashboard. This includes:

| Technology | Description |

|---|---|

| d3.js, dc.js and JavaScript | d3.js and dc.js are simple-to-use charting library written in JavaScript which allows us to add interactive charts to our web application for the dashboard. It will be used to develop our dashboard for the various visualizations mentioned above. |

| Python and Jupyter | Python is a programming language which we will use to create a script using Jupyter meant for cleaning the dataset when it is uploaded. It is also used to handle the missing and duplicated data. |

| Tableau Software | Tableau will be utilized for Exploratory Data Analysis. It will also be used to create a dashboard for our sponsor. |

| PostgreSQL | PostgreSQL will be used as the database for the dashboard and web service. |

3. Data Cleaning, Wrangling and Restructuring

Our team aims to combine the 3 years data for Inbound products into 1 excel spreadsheet. This will be the same for the Outbound report. We will also try to combine the Inbound and Outbound reports by using their unique identifier to link the data together. Data cleaning will be done using Python to handling missing values, duplicate data and non-uniform data, after integrating the data.

However, as the company shifted their warehouse in September 2016 and this lasted for 2 weeks, we will be using another dataset which only consists of data from October 2016 to December 2017 for both Inbound and Outbound.

The team will also use Python to write a script which will combine the various excel spreadsheets and extract the relevant fields before consolidating them into a single spreadsheet that will be used as an input for the analysis and the Operations Dashboard which will be done using Tableau or as a web application using JavaScript.

4. Data Visualization and Reporting

Once the data is cleaned and combined, we will extract the data and create a dashboard with various visualizations as mentioned earlier. This will be done using d3.js, dc.js and JavaScript. The interactive dashboard will allow managers to have easy access to visualizations showing the performance of the current operations as compared to past operations. For the visualisations, we are also considering to use Tableau to do up the dashboard. Both options have been communicated to our sponsor, but the final deliverable format has yet to be finalised.

On top of the visualizations, we will also do Market Basket Analysis to find out the affinity between products. Also, we will report all our findings to our sponsor and propose how the goods should be arranged in the warehouse based on their categories (A, B or C).

Software Used

We used Python as a tool for the data cleaning, as well as loading of data into the database after certain processing. Specific python libraries used can be seen from the table below.

| Python Library | Purpose |

|---|---|

| pandas | pandas is an open source, BSD-licensed library providing high-performance, easy-to-use data structures and data analysis tools for the Python programming language. It helps to convert data that is being uploaded into dataframes for easier processing. |

| datetime | The datetime module supplies classes for manipulating dates and times in both simple and complex ways. It helped to convert certain data into the correct date and time format for creation of new variables that deals with date and time. |

| math | It provides access to the mathematical functions defined by the C standard. Particularly in our project, it is used to round values off. |

| glob | The glob module finds all the pathnames matching a specified pattern according to the rules used by the Unix shell. It is used during file reading when files are being uploaded onto the database. |

| re | This module provides regular expression matching operations similar to those found in Perl. It is used for file reading when files are being uploaded onto the database. |

| configparser | This module provides the ConfigParser class which implements a basic configuration language which provides a structure similar to what’s found in Microsoft Windows INI files. You can use this to write Python programs which can be customized by end users easily. In this case, it is used to help connect the python scripts to the postgres database by setting the configurations in the ini files. |

| psycopg2 | Psycopg is a PostgreSQL database adapter for the Python programming language. |

| os | This module provides a portable way of using operating system dependent functionality. For our project, it is used by the scripts to delete files after they are being uploaded onto the database. |

For the operational dashboard, we made use of various JavaScript libraries including d3.js, dc.js, crossfilter.js and sigma.js. It is a node.js application which is a server used to host the Angular web dashboard application and serves as the web service layer where the front-end communicates with the back-end to retrieve data from the database. Python programming language is also used for bootstrapping of data and the data cleaning process.