Difference between revisions of "Social Media & Public Opinion - Final"

| Line 40: | Line 40: | ||

<!--Content Start--> | <!--Content Start--> | ||

| + | |||

| + | |||

| + | <div align="left"> | ||

| + | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Abstract</font></div>== | ||

| + | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | ||

| + | Sentiment analysis on social media provides organisations an opportunity to monitor their reputation and brands by extracting and analysing online comments posted by Internet users about them or the market in general. There are various existing methods to carry out sentiment analysis on social media data, with varying results. In this paper, we show how to use Twitter as a corpus for sentiment analysis and investigate the effectiveness of sentiment text analysis on tweets. We adopt a supervised approach, by labelling the training data and using it to train a model for predicting the responses on the unlabelled testing data. | ||

| + | </div> | ||

| + | |||

<div align="left"> | <div align="left"> | ||

| Line 45: | Line 53: | ||

<div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | ||

| − | Having consulted with our professor, we have decided to shift our focus away from developing a dashboard and delve deeper into the subject of text analysis of social media data, specifically Twitter data. Social media has changed the way how consumers provide feedback to the products they consume. Much social media data can be mined, analysed and turned into value propositions for change in ways companies brand themselves. | + | <div style="text-align: justify;">Having consulted with our professor, we have decided to shift our focus away from developing a dashboard and delve deeper into the subject of text analysis of social media data, specifically Twitter data. Social media has changed the way how consumers provide feedback to the products they consume. Much social media data can be mined, analysed and turned into value propositions for change in ways companies brand themselves. |

Although anyone and everyone can easily attain such data, there are certain challenges faced that can hamper the effectiveness of such analysis. | Although anyone and everyone can easily attain such data, there are certain challenges faced that can hamper the effectiveness of such analysis. | ||

| Line 52: | Line 60: | ||

# What are some of the unique features of social media that we need to take note of when doing text analysis on them? | # What are some of the unique features of social media that we need to take note of when doing text analysis on them? | ||

| − | Through this project, we are going to explore what some of these challenges are and ways in which we can overcome them. | + | Through this project, we are going to explore what some of these challenges are and ways in which we can overcome them.</div> |

</div> | </div> | ||

<div align="left"> | <div align="left"> | ||

| + | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Related Work</font></div>== | ||

| + | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px;"> | ||

| + | |||

| + | <div style="text-align: justify;">With the proliferation of blogs and social networks, opinion mining and sentiment analysis became a field of interest for many researches. It is considered more challenging than conventional text such as review documents due to the nature of tweets: short length, frequent use of informal and irregular words, and the rapid evolution of language on Twitter. | ||

| + | |||

| + | A real-time text-based hedonometer was built to measure happiness of over 63 million Twitter users over 33 months, as recorded in the paper Temporal Patterns of Happiness and Information in a Global Social Network: Hedonometrics and Twitter (Dodds et al., 2011)<ref>Dodds PS, Harris KD, Kloumann IM, Bliss CA, Danforth CM (2011) Temporal Patterns of Happiness and Information in a Global Social Network: Hedonometrics and Twitter. PLoS ONE 6(12): e26752. doi:10.1371/journal.pone.0026752</ref>. It shows how a highly robust and tunable metric can be constructed with the word list chosen solely by frequency of usage. | ||

| + | |||

| + | In the paper Twitter as a Corpus for Sentiment Analysis and Opinion Mining (Pak & Paroubek, 2010)<ref>Pak, A., & Paroubek, P. (2010). Twitter as a Corpus for Sentiment Analysis and Opinion Mining.</ref>, the authors show how to use Twitter as a corpus for sentiment analysis and opinion mining, perform linguistic analysis of the collected corpus and build a sentiment classifier that is able to determine positive, negative and neutral sentiments for a document. The authors build a sentiment classifier using the multinomial Naïve Bayes classifier that uses N-gram and part-of-speech tags as features as it yielded the best results as compared to Support Vector Machines (SVMs) and Conditional Random Fields (CRFs) classifiers. We will be using the Naive Bayes classifier too. | ||

| + | |||

| + | For the paper Twitter Sentiment Analysis: The Good the Bad and the OMG! (Kouloumpis et al., 2011)<ref>Kouloumpis, E., Wilson, T., & Moore, J. (2011). . In International AAAI Conference on Weblogs and Social Media. Retrieved from https://www.aaai.org/ocs/index.php/ICWSM/ICWSM11/paper/view/2857/3251</ref>, the authors investigate the usefulness of linguistic features for detecting the sentiment of tweets. The results show that show that part-of-speech features may not be useful for sentiment analysis in the microblogging domain. | ||

| + | The authors mentioned in the paper Exploiting Emoticons in Sentiment Analysis (Hogenboom et al., 2013)<ref>Hogenboom, A. and Bal, D. and Frasincar, F. and Bal, M. and de Jong, F.M.G. and Kaymak, U. (2013) Exploiting emoticons in sentiment analysis. In: Proceedings of the 28th Annual ACM Symposium on Applied Computing, SAC 2013, 18-22 Mar 2013, Lisbon, Portugal. pp. 703-710. ACM. ISBN 978-1-4503-1656-9</ref> created an emoticon sentiment lexicon in order to improve a state-of-the-art lexicon-based sentiment classification method. It demonstrated that people typically use emoticons in natural language text in order to express, stress, or disambiguate their sentiment in particular text segments, thus rendering them potentially better local proxies for people’s intended overall sentiment than textual cues. We will be analysing emoticons too to improve the accuracy of our model. | ||

| + | |||

| + | In the paper Tokenization and Filtering Process in RapidMiner (Verma et al., 2014)<ref>Verma, T., & Renu, D. G. (2014). Tokenization and Filtering Process in RapidMiner. International Journal of Applied Information Systems (IJAIS)–ISSN, 2249-0868.</ref>, the authors shows how text mining is implemented in Rapidminer through tokenisation, stopword elimination, stemming and filtering. We will be using RapidMiner too in our methodology.</div> | ||

| + | </div> | ||

| + | |||

| + | |||

| + | <div align="left"> | ||

==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Methodology: Text analytics using RapidMiner</font></div>== | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Methodology: Text analytics using RapidMiner</font></div>== | ||

| − | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | + | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px;"> |

| + | |||

'''''Download RapidMiner [https://rapidminer.com/signup/ here]''''' | '''''Download RapidMiner [https://rapidminer.com/signup/ here]''''' | ||

| + | |||

{| class="wikitable" style="margin-left: 10px;" | {| class="wikitable" style="margin-left: 10px;" | ||

|-! style="background: #0084b4; color: white; text-align: center;" colspan= "2" | |-! style="background: #0084b4; color: white; text-align: center;" colspan= "2" | ||

| Line 76: | Line 103: | ||

| [[File:Tweets Jso.JPG|center|400px]]|| | | [[File:Tweets Jso.JPG|center|400px]]|| | ||

===Data Preparation=== | ===Data Preparation=== | ||

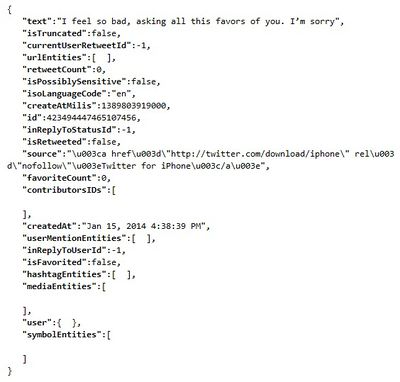

| − | In RapidMiner, there are a few ways in which we can read a file or data from a database. In our case, we will be reading from tweets provided by the LARC team. The format of the tweets given was in the JSON format. In RapidMiner, JSON strings can be read but it is unable to read nested arrays within the string. Thus, due to this restriction, we need to extract the text from the JSON string before we can use RapidMiner to do the text analysis. We did it by converting each JSON string into a javascript object and extracting only the Id and text of each tweet and write them onto a comma separated file (.csv) to be process later in RapidMiner. | + | <div style="text-align: justify;">In RapidMiner, there are a few ways in which we can read a file or data from a database. In our case, we will be reading from tweets provided by the LARC team. The format of the tweets given was in the JSON format. In RapidMiner, JSON strings can be read but it is unable to read nested arrays within the string. Thus, due to this restriction, we need to extract the text from the JSON string before we can use RapidMiner to do the text analysis. We did it by converting each JSON string into a javascript object and extracting only the Id and text of each tweet and write them onto a comma separated file (.csv) to be process later in RapidMiner.</div> |

|- | |- | ||

|} | |} | ||

===Defining a Standard=== | ===Defining a Standard=== | ||

| − | Before we can create a model for classifying tweets based on their polarity, we have to first define a standard for the classifier to learn from. In order to attain such a standard, we manually tag a random sample of 1000 tweets with 3 categories; Positive (P), Negative (N) and Neutral (X) | + | <div style="text-align: justify;">Before we can create a model for classifying tweets based on their polarity, we have to first define a standard for the classifier to learn from. In order to attain such a standard, we manually tag a random sample of 1000 tweets with 3 categories; Positive (P), Negative (N) and Neutral (X). One of the challenges faced is understanding irony as even humans sometimes face difficulty understanding someone who is being sarcastic. It is proven in a University of Pittsburgh study that humans can only agree on whether or not a sentence has the correct sentiment 80% of the time.<ref>Wiebe, J., Wilson, T., & Cardie, C. (2005). Annotating Expressions of Opinions and Emotions in Language. Language Resources and Evaluation, 165-210. Retrieved from http://people.cs.pitt.edu/~wiebe/pubs/papers/lre05.pdf</ref> |

| − | With the tweets and their respective classification, we were ready to create a model for machine learning of tweets' sentiments. | + | With the tweets and their respective classification, we were ready to create a model for machine learning of tweets' sentiments.</div> |

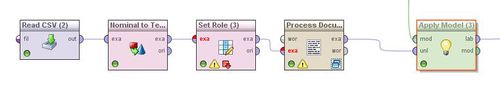

===Creating a Model=== | ===Creating a Model=== | ||

| Line 93: | Line 120: | ||

|[[File:ReadCsv.JPG|center|100px]]|| | |[[File:ReadCsv.JPG|center|100px]]|| | ||

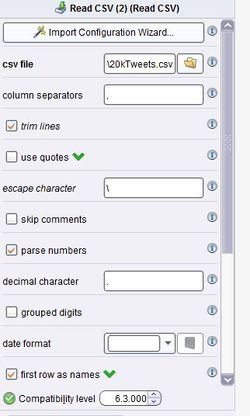

| − | + | <div style="text-align: justify;">We first used the "read CSV" operator to read the text from the prepared CSV file that was done earlier. This can be done via an "Import Configuration Wizard" or set manually.</div> | |

|- | |- | ||

|[[File:ReadCsv configuration.JPG|center|250px]]|| | |[[File:ReadCsv configuration.JPG|center|250px]]|| | ||

| − | + | Each column is separated by a ",".<br> | |

| − | + | Trim the lines to remove any white space before and after the tweet.<br> | |

| − | + | Check the "first row as names" if there a header is specified. | |

|- | |- | ||

|[[File:Normtotext.JPG|center|100px]]|| | |[[File:Normtotext.JPG|center|100px]]|| | ||

| − | + | To check the results at any point of the process, right click on any operators and add a breakpoint. | |

| − | + | To process the document, we convert the data from nominal to text. | |

|- | |- | ||

|[[File:DataToDoc.JPG|center|100px]]|| | |[[File:DataToDoc.JPG|center|100px]]|| | ||

| − | + | We convert the text data into documents. In our case, each tweet is converted in a document. | |

|- | |- | ||

|[[File:ProcessDocument.JPG|center|100px]]|| | |[[File:ProcessDocument.JPG|center|100px]]|| | ||

| − | + | <div style="text-align: justify;">The "process document" operator is a multi-step process to break down each document into single words. The number of frequency of each word as well as their occurrences (in documents) are calculated and used when formulating the model.<br> | |

| − | + | To begin the process, double-click on the operator.</div> | |

|- | |- | ||

|[[File:Tokenize.JPG|center|500px]]|| | |[[File:Tokenize.JPG|center|500px]]|| | ||

| Line 115: | Line 142: | ||

1. '''Tokenizing the tweet by word''' | 1. '''Tokenizing the tweet by word''' | ||

| − | Tokenization is the process of breaking a stream of text up into words or other meaningful elements called tokens to explore words in a sentence. Punctuation marks as well as other characters like brackets, hyphens, etc. are removed. | + | <div style="text-align: justify;">Tokenization is the process of breaking a stream of text up into words or other meaningful elements called tokens to explore words in a sentence. Punctuation marks as well as other characters like brackets, hyphens, etc. are removed.</div> |

2. '''Converting words to lowercase''' | 2. '''Converting words to lowercase''' | ||

| Line 131: | Line 158: | ||

5. '''Stemming using Porter2’s stemmer''' | 5. '''Stemming using Porter2’s stemmer''' | ||

| − | Stemming is a technique for the reduction of words into their stems, base or root. When words are stemmed, we are keeping the core of the characters which convey effectively the same meaning. | + | <div style="text-align: justify;">Stemming is a technique for the reduction of words into their stems, base or root. When words are stemmed, we are keeping the core of the characters which convey effectively the same meaning. |

<u>Porter Stemmer vs Snowball (Porter2)</u><ref>Tyranus, S. (2012, June 26). What are the major differences and benefits of Porter and Lancaster Stemming algorithms? Retrieved from http://stackoverflow.com/questions/10554052/what-are-the-major-differences-and-benefits-of-porter-and-lancaster-stemming-alg</ref> | <u>Porter Stemmer vs Snowball (Porter2)</u><ref>Tyranus, S. (2012, June 26). What are the major differences and benefits of Porter and Lancaster Stemming algorithms? Retrieved from http://stackoverflow.com/questions/10554052/what-are-the-major-differences-and-benefits-of-porter-and-lancaster-stemming-alg</ref> | ||

| Line 137: | Line 164: | ||

''Porter'': Most commonly used stemmer without a doubt, also one of the gentlest stemmers. It is one of the most computationally intensive of the algorithms(Granted not by a very significant margin). It is also the oldest stemming algorithm by a large margin. | ''Porter'': Most commonly used stemmer without a doubt, also one of the gentlest stemmers. It is one of the most computationally intensive of the algorithms(Granted not by a very significant margin). It is also the oldest stemming algorithm by a large margin. | ||

| − | ''Snowball (Porter2)'': Nearly universally regarded as an improvement over porter, and for good reason. Porter himself in fact admits that Snowball is better than his original algorithm. Has a slightly faster computation time than snowball, with a fairly large community around it. | + | ''Snowball (Porter2)'': Nearly universally regarded as an improvement over porter, and for good reason. Porter himself in fact admits that Snowball is better than his original algorithm. Has a slightly faster computation time than snowball, with a fairly large community around it.</div> |

We use the Porter2 stemmer. | We use the Porter2 stemmer. | ||

| + | |- | ||

| + | |[[File:SMPO-Generate TFIDF.PNG|center|100px]]|| | ||

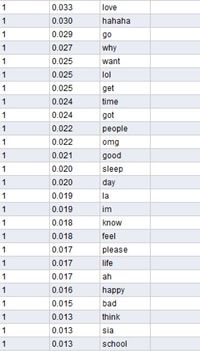

| + | <div style="text-align: justify;">We used TF-IDF (term frequency*inverse document frequency) to set the importance of each word to a particular label. The TF-IDF takes into account 2 things: if a term appears on a lot of documents, each time it appears in a document, it is probably not so important. | ||

| + | Conversely, if a term is seldom used in most of the documents, when it appears, the term is likely to be important.</div> | ||

|- | |- | ||

|[[File:Setrole.JPG|center|100px]]|| | |[[File:Setrole.JPG|center|100px]]|| | ||

| − | + | <div style="text-align: justify;">Return to the main process. | |

| − | + | We need to add the "Set Role" process to indicate the label for each tweet. We have a column called "Classification" to assign the label for that. | |

|- | |- | ||

|[[File:Validation.JPG|center|100px]]|| | |[[File:Validation.JPG|center|100px]]|| | ||

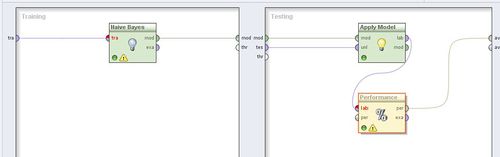

| − | + | <div style="text-align: justify;">The "X-validation" operator creates a model based on our manual classification which can later be used on another set of data. | |

| − | + | To begin, double click on the operator.</div> | |

|- | |- | ||

|[[File:ValidationX.JPG|center|500px]]|| | |[[File:ValidationX.JPG|center|500px]]|| | ||

| − | + | <div style="text-align: justify;">We carry out an X-validation using the Naive Bayes model classification, a simple probabilistic classifier based on applying Bayes' theorem (from Bayesian statistics) with strong (naive) independence assumptions. In simple terms, a Naive Bayes classifier assumes that the presence (or absence) of a particular feature of a class (i.e. attribute) is unrelated to the presence (or absence) of any other feature.</div> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

|- | |- | ||

|[[File:5000Data.JPG|center|500px]]|| | |[[File:5000Data.JPG|center|500px]]|| | ||

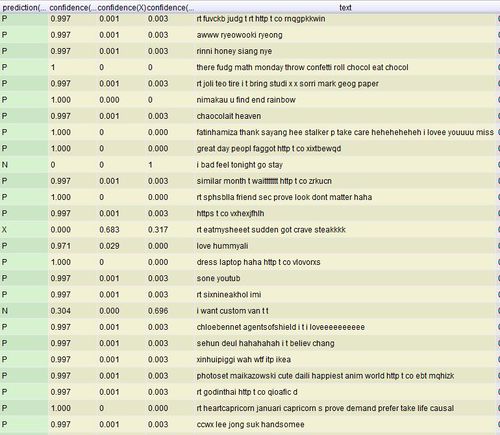

| − | + | <div style="text-align: justify;">To apply this model to a new set of data, we repeat the above steps of reading a CSV file, converting it the input to text, set the role and processing each document before applying the model to the new set of tweets.</div> | |

|- | |- | ||

|[[File:Prediction.JPG|center|500px]]|| | |[[File:Prediction.JPG|center|500px]]|| | ||

| − | + | <div style="text-align: justify;">From the performance output, we achieved 44.6% accuracy when the model was cross validated with the original 1000 tweets that were manually tagged. To affirm this accuracy, we randomly extracted 100 tweets from the fresh set of 5000 tweets and manually tag these tweets and cross validated with the predicted values by the model. The predicted model did in fact have an accuracy of '''46%''', a close percentage to the 44.2% accuracy using the X-validation module.</div> | |

|} | |} | ||

| + | </div> | ||

| + | |||

| − | ===Improving | + | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Improving Accuracy</font></div>== |

| + | |||

| + | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | ||

| − | One of the ways to improve the accuracy of the model is to remove words that do not appear frequently within the given set of documents. By removing these words, we can ensure that the resulting words that are classified are mentioned a significant number of times. However, the challenge is to determine what the number of occurrences required is before a word can be taken into account for classification. It is important to note that the higher the threshold, the smaller the result and word list would be. Practical problems exist when modeling text statistically, since we require a reasonably sized corpus in order to overcome sparseness problems, but at the same time we face the challenge of irrelevant words exerting their weights on an independent set of test data when applying the model | + | ===Pruning=== |

| + | <div style="text-align: justify;">One of the ways to improve the accuracy of the model is to remove words that do not appear frequently within the given set of documents. By removing these words, we can ensure that the resulting words that are classified are mentioned a significant number of times. However, the challenge is to determine what the number of occurrences required is before a word can be taken into account for classification. It is important to note that the higher the threshold, the smaller the result and word list would be. Practical problems exist when modeling text statistically, since we require a reasonably sized corpus in order to overcome sparseness problems, but at the same time we face the challenge of irrelevant words exerting their weights on an independent set of test data when applying the model | ||

We experimented with multiple values to determine the most appropriate amount of words to be pruned off, bearing in mind that we need a sizeable number of words with a high enough accuracy yield. | We experimented with multiple values to determine the most appropriate amount of words to be pruned off, bearing in mind that we need a sizeable number of words with a high enough accuracy yield. | ||

| − | *Percentage pruned refers to the words that are removed from the word list that do not occur within the said amount of documents. e.g. for 1% pruned out of the set of 1000 documents, words that appeared in less than 10 documents are removed from the word list. | + | *Percentage pruned refers to the words that are removed from the word list that do not occur within the said amount of documents. e.g. for 1% pruned out of the set of 1000 documents, words that appeared in less than 10 documents are removed from the word list.</div> |

| Line 206: | Line 221: | ||

|} | |} | ||

| − | From the results, we could infer that a large number of words (3680) appears only in less than 5 documents as we see the resulting size of the word list falls from 3833 to 153 when we set the percentage pruned at 0.5% | + | <div style="text-align: justify;">From the results, we could infer that a large number of words (3680) appears only in less than 5 documents as we see the resulting size of the word list falls from 3833 to 153 when we set the percentage pruned at 0.5%</div> |

| + | |||

| − | + | '''''Results''''' | |

| − | Click on the image to enlarge | + | |

| + | (Click on the image to enlarge) | ||

<gallery> | <gallery> | ||

File:Manual_tag_1000_Performance_pruning_no.JPG|<center>''0% pruned''</center> | File:Manual_tag_1000_Performance_pruning_no.JPG|<center>''0% pruned''</center> | ||

| Line 217: | Line 234: | ||

File:Manual_tag_1000_Performance_pruning(5%).JPG|<center>''5% pruned''</center> | File:Manual_tag_1000_Performance_pruning(5%).JPG|<center>''5% pruned''</center> | ||

</gallery> | </gallery> | ||

| + | |||

| + | |||

| + | ===Using Different Classifiers=== | ||

| + | |||

| + | ====Support vector machine==== | ||

| + | <div style="text-align: justify;">More formally, a support vector machine constructs a hyperplane or set of hyperplanes in a high- or infinite- dimensional space, which can be used for classification, regression, or other tasks. Intuitively, a good separation is achieved by the hyperplane that has the largest distance to the nearest training data points of any class (so-called functional margin), since in general the larger the margin the lower the generalization error of the classifier. Whereas the original problem may be stated in a finite dimensional space, it often happens that the sets to discriminate are not linearly separable in that space. For this reason, it was proposed that the original finite-dimensional space would be mapped into a much higher-dimensional space, presumably making the separation easier in that space. </div> | ||

| + | |||

| + | ====K-Nearest Neighbour==== | ||

| + | <div style="text-align: justify;">The k-Nearest Neighbor algorithm is based on learning by analogy, that is, by comparing a given test example with training examples that are similar to it. The training examples are described by the words that are contained within the document. Each example represents a point in an n-dimensional space, depending on the size of the word list. In this way, all of the training examples are stored in an n-dimensional pattern space. When given a new document with its features, a k-nearest neighbor algorithm searches the pattern space for the k training examples that are closest to the unknown example. These k training examples are the k "nearest neighbors" of the unknown example. "Closeness" is defined in terms of a distance metric, such as the Euclidean distance.</div> | ||

| + | |||

| + | ====Naives Bayes==== | ||

| + | <div style="text-align: justify;">A Naive Bayes classifier is a simple probabilistic classifier based on applying Bayes' theorem (from Bayesian statistics) with strong (naive) independence assumptions. A more descriptive term for the underlying probability model would be 'independent feature model'. In simple terms, a Naive Bayes classifier assumes that the presence (or absence) of a particular feature of a class (i.e. attribute) is unrelated to the presence (or absence) of any other feature. For example, a tweet or document is based upon the words that are contained within it. Words do not affect one another and are independent of each other. Even if these features depend on each other or upon the existence of the other features, a Naive Bayes classifier considers all of these properties to independently contribute to the probability that this tweet is unique to itself. | ||

| + | The advantage of the Naive Bayes classifier is that it only requires a small amount of training data to estimate the means and variances of the variables necessary for classification. Because independent variables are assumed, only the variances of the variables for each label need to be determined and not the entire covariance matrix.</div> | ||

</div> | </div> | ||

| + | |||

==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Deriving insights from emoticons</font></div>== | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Deriving insights from emoticons</font></div>== | ||

| Line 223: | Line 254: | ||

<div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | ||

| − | An emotion icon, better known by the emoticon is a metacommunicative pictorial representation of a facial expression that, in the absence of body language and prosody, serves to draw a receiver's attention to the tenor or temper of a sender's nominal verbal communication, changing and improving its interpretation. It expresses — usually by means of punctuation marks (though it can include numbers and letters) — a person's feelings or mood, though as emoticons have become more popular, some devices have provided stylized pictures that do not use punctuation. | + | <div style="text-align: justify;">An emotion icon, better known by the emoticon is a metacommunicative pictorial representation of a facial expression that, in the absence of body language and prosody, serves to draw a receiver's attention to the tenor or temper of a sender's nominal verbal communication, changing and improving its interpretation. It expresses — usually by means of punctuation marks (though it can include numbers and letters) — a person's feelings or mood, though as emoticons have become more popular, some devices have provided stylized pictures that do not use punctuation.</div> |

| − | + | '''Experiment''' | |

| − | The data that we have was in plain text. To be able to view the emoticons, we needed a "translator" to convert the emoticons used. This can be done on any browser with a plugin to convert these emoticons. We carried out the following steps: | + | <div style="text-align: justify;">The data that we have was in plain text. To be able to view the emoticons, we needed a "translator" to convert the emoticons used. This can be done on any browser with a plugin to convert these emoticons. We carried out the following steps: |

# Print the entire list of tweets that we have | # Print the entire list of tweets that we have | ||

| Line 236: | Line 267: | ||

# Calculate the percentage of matches between the 2 tagged values. | # Calculate the percentage of matches between the 2 tagged values. | ||

| − | We carried out this experiment on 100 random tweets with emoticons and matched the accuracy of the sentiments. We achieved a 82% match/accuracy in terms of using the emoticons to determine the sentiments of the tweets. | + | We carried out this experiment on 100 random tweets with emoticons and matched the accuracy of the sentiments. We achieved a 82% match/accuracy in terms of using the emoticons to determine the sentiments of the tweets.</div> |

</div> | </div> | ||

| + | |||

==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Deriving word associations from tweets</font></div>== | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Deriving word associations from tweets</font></div>== | ||

<div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | <div style="border-left: #EAEAEA solid 12px; padding: 0px 30px 0px 18px; "> | ||

| − | Modeling words co-occurrence is important for many natural language applications, such as topic segmentation (Ferret, 2002), query expansion (Vechtomova et al., 2003), machine translation (Tanaka, 2002), language modeling (Dagan et al., 1999; Yuret, 1998), and term weighting (Hisamitsu andNiwa, 2002). We want to know if certain words given within a set of tweets happen more often than expected by chance | + | <div style="text-align: justify;">Modeling words co-occurrence is important for many natural language applications, such as topic segmentation (Ferret, 2002), query expansion (Vechtomova et al., 2003), machine translation (Tanaka, 2002), language modeling (Dagan et al., 1999; Yuret, 1998), and term weighting (Hisamitsu andNiwa, 2002). We want to know if certain words given within a set of tweets happen more often than expected by chance. |

| − | |||

| − | |||

| − | + | We highlight the process of getting word associations below.</div> | |

{| class="wikitable" style="margin-left: 10px;" | {| class="wikitable" style="margin-left: 10px;" | ||

| Line 263: | Line 293: | ||

# Out of the 20000 tweets, we were only able to draw 121 sets of word associations, of which 25 contains 2 words, 12 contain 3 words and 2 contain 1 word | # Out of the 20000 tweets, we were only able to draw 121 sets of word associations, of which 25 contains 2 words, 12 contain 3 words and 2 contain 1 word | ||

# The word with the highest support stands at 0.044, a far cry from the minimal support of 0.75, commonly used for associating words. | # The word with the highest support stands at 0.044, a far cry from the minimal support of 0.75, commonly used for associating words. | ||

| − | |||

| − | |||

|} | |} | ||

| − | With tweets holding at most 140 characters, it is no surprise that we are unable to derive high volumes of word associations from the data set. It is even harder to derive word associations when the data set is time-based rather than event-based. The topics discussed | + | <div style="text-align: justify;">We conclude that deriving word associations would be a huge challenge when it comes to Twitter data and may be deemed irrelevant when doing text analytics on them. With tweets holding at most 140 characters, it is no surprise that we are unable to derive high volumes of word associations from the data set. It is even harder to derive word associations when the data set is time-based rather than event-based. The topics discussed vary greatly, making it hard to formulate word associations. Furthermore, it is apparent that the tone and vocabulary used by Twitter users are casual, and with the likes of short forms and abbreviations, it is even hard to draw word associations from tweets.</div> |

| + | </div> | ||

| − | |||

| − | |||

==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Pitfalls of using conventional text analysis on social media data</font></div>== | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Pitfalls of using conventional text analysis on social media data</font></div>== | ||

| Line 277: | Line 304: | ||

===Multiple languages=== | ===Multiple languages=== | ||

| − | Being a multilingual and multiracial community, this makes it more challenging to do text analysis in Singapore Twittersphere, as we have to take into account different languages. For each specific language, a dictionary is required to translate the text to the English language before any natural language processing can be done on the text. With advanced tools like RapidMiner not being able to accommodate Chinese, Malay or even Korean words, much work have to be done to come up with a localisation tool to analyse the social media data here. | + | <div style="text-align: justify;">Being a multilingual and multiracial community, this makes it more challenging to do text analysis in Singapore Twittersphere, as we have to take into account different languages. For each specific language, a dictionary is required to translate the text to the English language before any natural language processing can be done on the text. With advanced tools like RapidMiner not being able to accommodate Chinese, Malay or even Korean words, much work have to be done to come up with a localisation tool to analyse the social media data here.</div> |

===Misspelled words and abbreviations=== | ===Misspelled words and abbreviations=== | ||

| − | With the limitation of 140 chars in twitter, twitter users are fond of using abbreviations and short forms to substitute words that they want to convey. A huge challenge is to unravel misspelled words , and differentiating the former with these words as well. This can be done using a more robust or aggressive stemmer that deciphers abbreviations, remove unnecessary repeated characters in a word and correcting short forms to their root words. | + | <div style="text-align: justify;">With the limitation of 140 chars in twitter, twitter users are fond of using abbreviations and short forms to substitute words that they want to convey. A huge challenge is to unravel misspelled words , and differentiating the former with these words as well. This can be done using a more robust or aggressive stemmer that deciphers abbreviations, remove unnecessary repeated characters in a word and correcting short forms to their root words.</div> |

===Length of status=== | ===Length of status=== | ||

| − | The length of status is 140 characters long, which makes it difficult to have any word associations with strong support and confidence levels. Given such a short length, there may be insufficient space to substantiate a point or may lack evidence to the true sentiments of the tweet. | + | <div style="text-align: justify;">The length of status is 140 characters long, which makes it difficult to have any word associations with strong support and confidence levels. Given such a short length, there may be insufficient space to substantiate a point or may lack evidence to the true sentiments of the tweet.</div> |

===Other media types=== | ===Other media types=== | ||

| − | Other media types (URL, image URL and video URLs) are common attachments that Twitter users used to convey a message. In certain cases, this media type makes up the entire tweet, which nullifies any textual analysis done on the tweet itself. Much more context may be derived if information of the link is embedded into the tweet itself. Unfortunately, such a feature is still not available and hence, hinders the process of analysis on Tweets. | + | <div style="text-align: justify;">Other media types (URL, image URL and video URLs) are common attachments that Twitter users used to convey a message. In certain cases, this media type makes up the entire tweet, which nullifies any textual analysis done on the tweet itself. Much more context may be derived if information of the link is embedded into the tweet itself. Unfortunately, such a feature is still not available and hence, hinders the process of analysis on Tweets.</div> |

</div> | </div> | ||

| + | |||

==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Improving the effectiveness of sentiment analysis of social media data</font></div>== | ==<div style="background: #c0deed; padding: 15px; font-family:Segoe UI; font-size: 18px; font-weight: bold; line-height: 1em; text-indent: 15px; border-left: #0084b4 solid 32px;"><font color="black">Improving the effectiveness of sentiment analysis of social media data</font></div>== | ||

| Line 297: | Line 325: | ||

=== Increasing size of training data=== | === Increasing size of training data=== | ||

| − | The larger the size of data, the more accurate the model would be. However, the time to process and apply the model may also increase. | + | <div style="text-align: justify;">The larger the size of data, the more accurate the model would be. However, the time to process and apply the model may also increase.</div> |

===Leveraging on Emoticons === | ===Leveraging on Emoticons === | ||

| − | Emoticons provide more insights to how the user is feeling with just a single character. In tweets, where the number of characters is a valuable resource, emoticons come into play quite frequently. Being able to dissect a tweet based on the emoticons in it and assigning a sentiment score to the emoticons use, we can get a more accurate depiction of the tweet's overall sentiment score as compared to analysing the text itself | + | <div style="text-align: justify;">Emoticons provide more insights to how the user is feeling with just a single character. In tweets, where the number of characters is a valuable resource, emoticons come into play quite frequently. Being able to dissect a tweet based on the emoticons in it and assigning a sentiment score to the emoticons use, we can get a more accurate depiction of the tweet's overall sentiment score as compared to analysing the text itself.</div> |

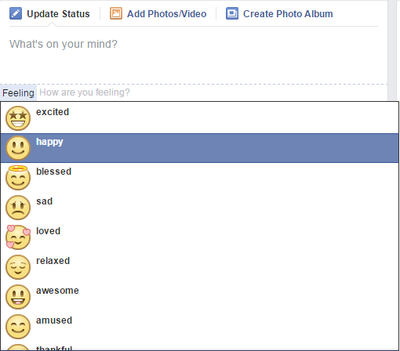

===Allowing the user to tag their feelings to their status === | ===Allowing the user to tag their feelings to their status === | ||

| − | One of the ways in which Facebook may make such analysis easier is by allowing the user to specify how he/she is feeling at the moment of posting a status. With this option, Facebook has effectively increase the probability of determining the right sentiment of the user at the point in time. This mitigates the possibility of sarcasm or other inferred sentiments within that post itself | + | <div style="text-align: justify;">One of the ways in which Facebook may make such analysis easier is by allowing the user to specify how he/she is feeling at the moment of posting a status. With this option, Facebook has effectively increase the probability of determining the right sentiment of the user at the point in time. This mitigates the possibility of sarcasm or other inferred sentiments within that post itself.</div> |

| + | |||

| + | |||

| + | [[File:Fb sentiment tagging.png|center|400px]] | ||

| − | |||

===Analyse data on an event/topic basis rather than on time=== | ===Analyse data on an event/topic basis rather than on time=== | ||

| − | The data that we used was within a given time frame of 1 month. Drilling down this tweets to a particular topic (hashtag) or an event would bring about more significant results. Brands which want to conduct sentiment analysis on social media data should make it specific to a particular campaign/event/initiative. | + | <div style="text-align: justify;">The data that we used was within a given time frame of 1 month. Drilling down this tweets to a particular topic (hashtag) or an event would bring about more significant results. Brands which want to conduct sentiment analysis on social media data should make it specific to a particular campaign/event/initiative.</div> |

</div> | </div> | ||

Revision as of 00:58, 22 April 2015

Contents

- 1 Abstract

- 2 Change in project scope

- 3 Related Work

- 4 Methodology: Text analytics using RapidMiner

- 5 Improving Accuracy

- 6 Deriving insights from emoticons

- 7 Deriving word associations from tweets

- 8 Pitfalls of using conventional text analysis on social media data

- 9 Improving the effectiveness of sentiment analysis of social media data

- 10 References

Abstract

Sentiment analysis on social media provides organisations an opportunity to monitor their reputation and brands by extracting and analysing online comments posted by Internet users about them or the market in general. There are various existing methods to carry out sentiment analysis on social media data, with varying results. In this paper, we show how to use Twitter as a corpus for sentiment analysis and investigate the effectiveness of sentiment text analysis on tweets. We adopt a supervised approach, by labelling the training data and using it to train a model for predicting the responses on the unlabelled testing data.

Change in project scope

Although anyone and everyone can easily attain such data, there are certain challenges faced that can hamper the effectiveness of such analysis.

- Can conventional text analysis methods be done on social media data?

- How effective are these methods?

- What are some of the unique features of social media that we need to take note of when doing text analysis on them?

Related Work

A real-time text-based hedonometer was built to measure happiness of over 63 million Twitter users over 33 months, as recorded in the paper Temporal Patterns of Happiness and Information in a Global Social Network: Hedonometrics and Twitter (Dodds et al., 2011)[1]. It shows how a highly robust and tunable metric can be constructed with the word list chosen solely by frequency of usage.

In the paper Twitter as a Corpus for Sentiment Analysis and Opinion Mining (Pak & Paroubek, 2010)[2], the authors show how to use Twitter as a corpus for sentiment analysis and opinion mining, perform linguistic analysis of the collected corpus and build a sentiment classifier that is able to determine positive, negative and neutral sentiments for a document. The authors build a sentiment classifier using the multinomial Naïve Bayes classifier that uses N-gram and part-of-speech tags as features as it yielded the best results as compared to Support Vector Machines (SVMs) and Conditional Random Fields (CRFs) classifiers. We will be using the Naive Bayes classifier too.

For the paper Twitter Sentiment Analysis: The Good the Bad and the OMG! (Kouloumpis et al., 2011)[3], the authors investigate the usefulness of linguistic features for detecting the sentiment of tweets. The results show that show that part-of-speech features may not be useful for sentiment analysis in the microblogging domain.

The authors mentioned in the paper Exploiting Emoticons in Sentiment Analysis (Hogenboom et al., 2013)[4] created an emoticon sentiment lexicon in order to improve a state-of-the-art lexicon-based sentiment classification method. It demonstrated that people typically use emoticons in natural language text in order to express, stress, or disambiguate their sentiment in particular text segments, thus rendering them potentially better local proxies for people’s intended overall sentiment than textual cues. We will be analysing emoticons too to improve the accuracy of our model.

In the paper Tokenization and Filtering Process in RapidMiner (Verma et al., 2014)[5], the authors shows how text mining is implemented in Rapidminer through tokenisation, stopword elimination, stemming and filtering. We will be using RapidMiner too in our methodology.

Methodology: Text analytics using RapidMiner

Download RapidMiner here

| Screenshots | Steps |

Setting up RapidMiner for text analysisTo carry out text processing in RapidMiner, we need to download the plugin required from the RapidMiner's plugin repository. Click on Help > Managed Extensions and search for the text processing module. Once the plugin is installed, it should appear in the "Operators" window as seen below. | |

Data PreparationIn RapidMiner, there are a few ways in which we can read a file or data from a database. In our case, we will be reading from tweets provided by the LARC team. The format of the tweets given was in the JSON format. In RapidMiner, JSON strings can be read but it is unable to read nested arrays within the string. Thus, due to this restriction, we need to extract the text from the JSON string before we can use RapidMiner to do the text analysis. We did it by converting each JSON string into a javascript object and extracting only the Id and text of each tweet and write them onto a comma separated file (.csv) to be process later in RapidMiner.

|

Defining a Standard

Creating a Model

| Screenshots | Steps |

|

We first used the "read CSV" operator to read the text from the prepared CSV file that was done earlier. This can be done via an "Import Configuration Wizard" or set manually.

| |

|

Each column is separated by a ",". | |

|

To check the results at any point of the process, right click on any operators and add a breakpoint. To process the document, we convert the data from nominal to text. | |

|

We convert the text data into documents. In our case, each tweet is converted in a document. | |

|

The "process document" operator is a multi-step process to break down each document into single words. The number of frequency of each word as well as their occurrences (in documents) are calculated and used when formulating the model.

To begin the process, double-click on the operator. | |

|

1. Tokenizing the tweet by word Tokenization is the process of breaking a stream of text up into words or other meaningful elements called tokens to explore words in a sentence. Punctuation marks as well as other characters like brackets, hyphens, etc. are removed.

2. Converting words to lowercase All words are transformed to lowercase as the same word would be counted differently if it was in uppercase vs. lowercase. 3. Eliminating stopwords The most common words such as prepositions, articles and pronouns are eliminated as it helps to improve system performance and reduces text data. 4. Filtering tokens that are smaller than 3 letters in length Filters tokens based on their length (i.e. the number of characters they contain). We set a minimum number of characters to be 3. 5. Stemming using Porter2’s stemmer Stemming is a technique for the reduction of words into their stems, base or root. When words are stemmed, we are keeping the core of the characters which convey effectively the same meaning.

Porter Stemmer vs Snowball (Porter2)[7] Porter: Most commonly used stemmer without a doubt, also one of the gentlest stemmers. It is one of the most computationally intensive of the algorithms(Granted not by a very significant margin). It is also the oldest stemming algorithm by a large margin. Snowball (Porter2): Nearly universally regarded as an improvement over porter, and for good reason. Porter himself in fact admits that Snowball is better than his original algorithm. Has a slightly faster computation time than snowball, with a fairly large community around it.We use the Porter2 stemmer. | |

|

We used TF-IDF (term frequency*inverse document frequency) to set the importance of each word to a particular label. The TF-IDF takes into account 2 things: if a term appears on a lot of documents, each time it appears in a document, it is probably not so important.

Conversely, if a term is seldom used in most of the documents, when it appears, the term is likely to be important.

| |

|

Return to the main process.

We need to add the "Set Role" process to indicate the label for each tweet. We have a column called "Classification" to assign the label for that. | |

|

The "X-validation" operator creates a model based on our manual classification which can later be used on another set of data.

To begin, double click on the operator.

| |

|

We carry out an X-validation using the Naive Bayes model classification, a simple probabilistic classifier based on applying Bayes' theorem (from Bayesian statistics) with strong (naive) independence assumptions. In simple terms, a Naive Bayes classifier assumes that the presence (or absence) of a particular feature of a class (i.e. attribute) is unrelated to the presence (or absence) of any other feature.

| |

|

To apply this model to a new set of data, we repeat the above steps of reading a CSV file, converting it the input to text, set the role and processing each document before applying the model to the new set of tweets.

| |

|

From the performance output, we achieved 44.6% accuracy when the model was cross validated with the original 1000 tweets that were manually tagged. To affirm this accuracy, we randomly extracted 100 tweets from the fresh set of 5000 tweets and manually tag these tweets and cross validated with the predicted values by the model. The predicted model did in fact have an accuracy of 46%, a close percentage to the 44.2% accuracy using the X-validation module.

|

Improving Accuracy

Pruning

We experimented with multiple values to determine the most appropriate amount of words to be pruned off, bearing in mind that we need a sizeable number of words with a high enough accuracy yield.

- Percentage pruned refers to the words that are removed from the word list that do not occur within the said amount of documents. e.g. for 1% pruned out of the set of 1000 documents, words that appeared in less than 10 documents are removed from the word list.

| Percentage Pruned | Percentage Accuracy | Deviation | Size of resulting word list |

|---|---|---|---|

| 0% | 39.8% | 5.24% | 3833 |

| 0.5% | 44.2% | 4.87% | 153 |

| 1% | 42.2% | 2.68% | 47 |

| 2% | 45.1% | 1.66% | 15 |

| 5% | 43.3% | 2.98% | 1 |

Results

(Click on the image to enlarge)

Using Different Classifiers

Support vector machine

K-Nearest Neighbour

Naives Bayes

Deriving insights from emoticons

Experiment

- Print the entire list of tweets that we have

- Identify the ones that has a converted emoticon tag (e.g. "😔")

- Get the list of emoticons from an emoticon library[8] and tag each emoticon with positive (P), Negative (N) and Neutral(X)

- For each tweet that we have, we manually tag the tweets based on the sentiments of the tweets.

- Cross validate that with the sentiments of the emoticons present in the tweet

- Calculate the percentage of matches between the 2 tagged values.

Deriving word associations from tweets

| Screenshots | Steps |

| |

| |

|

Pitfalls of using conventional text analysis on social media data

Multiple languages

Misspelled words and abbreviations

Length of status

Other media types

Improving the effectiveness of sentiment analysis of social media data

Increasing size of training data

Leveraging on Emoticons

Allowing the user to tag their feelings to their status

Analyse data on an event/topic basis rather than on time

References

- ↑ Dodds PS, Harris KD, Kloumann IM, Bliss CA, Danforth CM (2011) Temporal Patterns of Happiness and Information in a Global Social Network: Hedonometrics and Twitter. PLoS ONE 6(12): e26752. doi:10.1371/journal.pone.0026752

- ↑ Pak, A., & Paroubek, P. (2010). Twitter as a Corpus for Sentiment Analysis and Opinion Mining.

- ↑ Kouloumpis, E., Wilson, T., & Moore, J. (2011). . In International AAAI Conference on Weblogs and Social Media. Retrieved from https://www.aaai.org/ocs/index.php/ICWSM/ICWSM11/paper/view/2857/3251

- ↑ Hogenboom, A. and Bal, D. and Frasincar, F. and Bal, M. and de Jong, F.M.G. and Kaymak, U. (2013) Exploiting emoticons in sentiment analysis. In: Proceedings of the 28th Annual ACM Symposium on Applied Computing, SAC 2013, 18-22 Mar 2013, Lisbon, Portugal. pp. 703-710. ACM. ISBN 978-1-4503-1656-9

- ↑ Verma, T., & Renu, D. G. (2014). Tokenization and Filtering Process in RapidMiner. International Journal of Applied Information Systems (IJAIS)–ISSN, 2249-0868.

- ↑ Wiebe, J., Wilson, T., & Cardie, C. (2005). Annotating Expressions of Opinions and Emotions in Language. Language Resources and Evaluation, 165-210. Retrieved from http://people.cs.pitt.edu/~wiebe/pubs/papers/lre05.pdf

- ↑ Tyranus, S. (2012, June 26). What are the major differences and benefits of Porter and Lancaster Stemming algorithms? Retrieved from http://stackoverflow.com/questions/10554052/what-are-the-major-differences-and-benefits-of-porter-and-lancaster-stemming-alg

- ↑ Emoticon - emotions library https://github.com/wooorm/emoji-emotion/blob/master/data/emoji-emotion.json