Group04 Final

| GROUP4 |

| PROPOSAL | INTERIM | FINAL |

|---|

Contents

Overview

In this section, we will be using nonparametric statistical tests and text analysis to understand factors that affect the performance of content. Having a clear understanding on the factors of content performance will enable the company to determine its future strategy to continuously strive for better performance.

We will explore posting times and content as factors of performance and seek an appropriate methodology to analyze their effects on content performance. To capture a wide range of audiences, the company is currently active on Facebook and YouTube. We will thus be looking at data scraped from Facebook and YouTube.

For the Facebook Post dataset, the performance of posts will be compared across posting time to determine if specific posting times will affect performance, while text analysis will be performed on consumers’ comments from the Facebook Comment dataset and YouTube dataset to identify if different surfaced topics will result in differing sentiments. After our literature review, we have chosen Topic Modeling and Sentiment Analysis as the preferred methodologies for text analysis. Also, the Median test will be used to compare performance across different posting times.

Facebook Posts

The company is concerned that publishing content on Facebook on different days and time will affect its content’s engagement performance. However, they have yet to establish a methodology to study the impact of publishing day and time on performance.

Studies have shown that identifying the optimal time to reach an audience will drive social media engagement and traffic. Due to the algorithm-based feed of Facebook, having a large audience does not necessarily translate to high viewership. Instead of viewership, reach is a metric used by Facebook to measure the number of people who has seen a particular content.

The main objective is to identify the most optimal time to publish a post that will result in the highest reach as it would drive engagement. However, we were unable to scrape this metric as it is not available publicly. We considered combining three performance metrics that were scraped (i.e. number of reactions, shares, and comments) into a single metric as a proxy for reach. However, this approach is infeasible as we have identified that each metric would accumulate data over a different length of time. While we have determined that comments are no longer made on posts over six days old, we do not have time-series data on reactions and shares to perform similar analysis and determine when the last reaction or share occurs after publication of a post.

Due to the limited scope of data scraped, we defined comments as a proxy to reach as a metric for performance. However, we acknowledge this to be a limitation, as comments are not a true representation of viewership.

Facebook Comments

For the Facebook comments, we will seek to understand how consumers perceive the respective Facebook posts. Popular topics identified within the comments and their sentiment scores will be explored using Latent Dirichlet Allocation (LDA) and Sentiment Analysis. According to literature review, sentiment analysis will allow us to identify positive and negative opinions and emotions, and will be performed using the TextBlob Python package. TextBlob was chosen, as prior research has used this Python package to perform sentiment analysis on social media. The objective is to identify possible insights and define actionable plans based on the Facebook Comment dataset.

Youtube

We will also analyze comments made on the YouTube videos through sentiment analysis, as this technique has been used for “analysis of user comments” from YouTube videos by other researchers. Performing sentiment analysis on YouTube comments is ideal as “mining the YouTube data makes more sense than any other social media websites as the contents here are closely related to the concerned topic [the video]”. We will perform sentiment analysis, using TextBlob, on scraped comments to understand the consumers’ sentiments, i.e. level of positivity, vis-a-vis the content of the published videos. These comments would be from videos published from 2017 onwards, ensuring that the analysis is still relevant given the fast-paced dynamic nature of YouTube channels.

Methods and Analysis

Facebook Posts

Comments, used as a proxy metric for reach, will be compared across publishing day and time to determine the timing that has the highest comments using nonparametric statistics tests.

Methodology

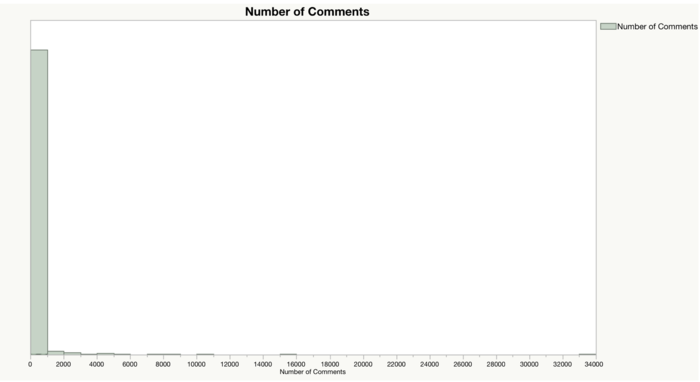

As the chosen performance indicator has a right-skewed distribution (Display 1), a nonparametric analysis Median Test will be used to test the hypothesis of the equality of population medians across categorical groups to determine if there are statistically significant differences between publishing days and time, or if the different level of performance is caused by random differences from the selected samples. Median has been chosen as the appropriate measure of central tendency, since it has a right-skewed distribution and the outliers within the dataset would significantly distort the mean.

The Median Test was chosen over Kruskal-Wallis test, as it is more robust against outliers. For datasets with extreme outliers, Median Test should be used. Furthermore, Kruskal-Wallis test requires several assumptions to hold. In particular, the assumption that variances is approximately equal across groups does not hold for several of the time groups.

Specifically, the Median test will test on the following hypotheses to compare performance across publishing days:

H1: The median number of comments across all publishing days are significantly different from each other.

To compare performance across publishing time bins, the Median test will test on the following hypotheses:

H1: The median number of comments across all publishing time bins are significantly different from each other.

To determine which publishing day or time groups are significantly different from others, pairwise Median Test are carried out as post-hoc tests. Finally, top performing day or time group(s) with highest medians will be identified as the optimal publishing day(s) or time(s).

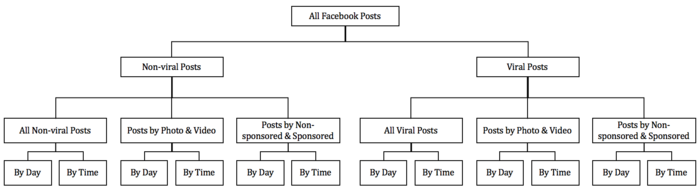

The dataset will be segregated into non-viral post dataset and viral post dataset, as viral posts share several common characteristics that non-viral posts do not have. Due to the different nature of either types of posts, they should not be analyzed together since posting time may be a possible determining factor on the eventual virality of a post. Viral posts are defined as the posts whose performance is an outlier. Using the Quantile Range Outlier analysis on JMP Pro to identify outlier observations, there are 71 Facebook posts that are viral posts.

For each of the two datasets, performance of all posts will be analyzed across publishing days and times. Next, each dataset will be further broken down into different characteristics groups, such as photo posts, video posts, non-sponsored posts, and sponsored posts. Performance for each of these characteristics group will then be analyzed across publishing days and times. A visual representation of the process is illustrated in Display 2.

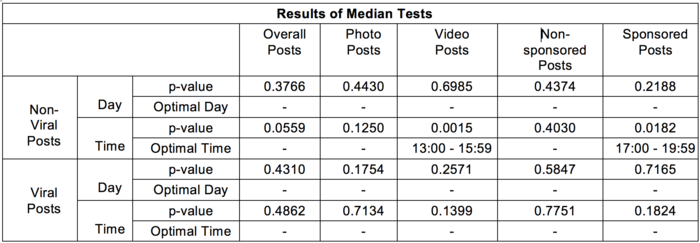

Results

For non-viral posts, only the median comments of Video posts and Sponsored posts across the time bins are significantly different. After performing the post-hoc test, we have identified that for Video posts, the optimal publishing time is from 1PM to 3:59PM as it has the highest median comments of 130. As for Sponsored posts, the optimal publishing time is from 5PM to 7:59PM as it has the highest median comments of 71.

As for non-viral posts, there are no significant differences in the median comments of viral posts. There is no optimal time to publish that may generate a viral post defined by the number of comments. This result is not unexpected, as the main factors of viral posts typically revolve around the content of the posts. Content that generates buzz or are non-traditional are common factors found across viral content.

Business Insights

Further studies should be done to better understand the impact that publishing day and time may have on reach, instead of just using comments as a proxy for reach. The company may wish to consider starting regular collections of internal metrics data on Facebook to reanalyze their performance across publishing day and time. Facebook Insights collects and enables the downloading of time-series and cross-sectional data on multiple metrics. Through availability of these data, the company may gain a better understanding on multiple performance metrics (e.g. reach and engagement), as well as audience behavior over time using the time-series data.

Facebook Comments

Facebook comments were used to identify popular topics. We mimicked the methodology of research on Twitter, another social media platform, performing sentiment analysis on topics derived from LDA. “Topic sentiment analysis provides a more precise snapshot of the sentiment distribution”.

Methodology

Facebook Comments dataset was cleaned to ensure that results generated were reliable. Emojis were scraped as symbols. Using Regular Expression Python package, an iterative loop removed symbols. Next, stop words were removed and words are stemmed and tokenized as this is a requirement of Natural Language processing functions.

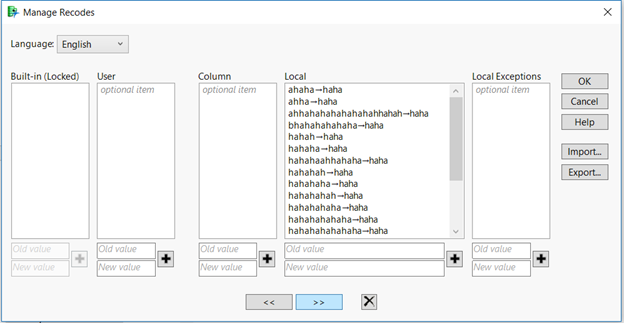

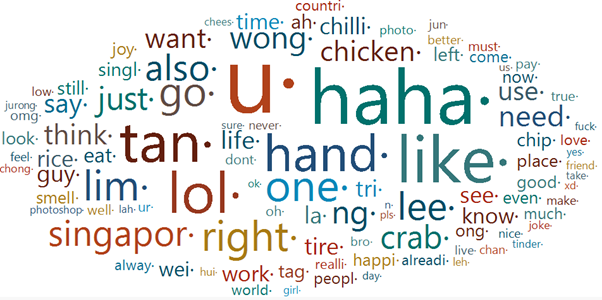

Next, using JMP Pro’s Text Explorer, we created a Wordcloud to explore the remaining text. As JMP Pro is unable to stem different versions of ‘haha’, we used Manage Recodes function in JMP Pro to recode them to “haha” (Display 3). Since this recoding is done natively on JMP Pro, different versions of ‘hahahaha’s are still present in the dataset . Random words were present (Display 4). These words are meaningless and would affect analysis downstream; hence, a requirement was set to ensure that the minimum character per word is 3.

Next, LDA via JMP was used to understand the topics that Facebook commentators have made. We have selected 10 as a preferred number of topics. However, after the first round of LDA, there are contextually ambiguous terms that might dilute the description of the topics, hence we include such terms as stop words.

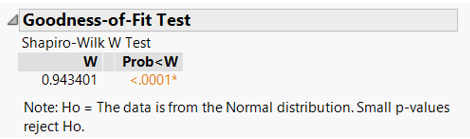

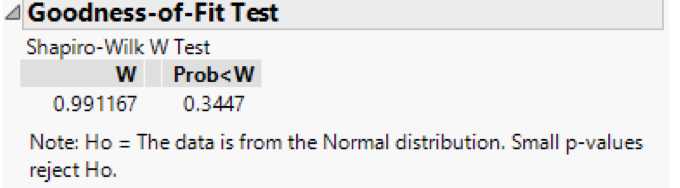

LDA was ran again and 4 prominent topics were chosen for sentiment analysis, thereby understanding the sentiment for a topic. Next, we examined the distribution of sentiment scores to decide on the appropriate measure of central tendency, i.e. mean or median. As the distribution of sentiment scores do not clearly show skewness, we utilized Shapiro-Wilk’s Test via JMP Pro to test for normality. The hypotheses are as follow:

The results of this test can be seen in display 5. According to the results, at 95% confidence interval, we reject the null hypothesis that the sentiment scores are normally distributed.

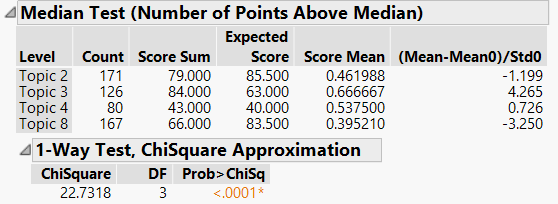

For confirmatory analysis, a nonparametric Median test, via JMP Pro, was utilized to test if the median sentiment score should be used for further analysis.The dataset has fulfilled all Median test assumptions, that the sentiment scores for each topic are independent from each other and their distributions have the same shape.

The hypotheses are:

The results of this test can be seen in display 6. According to the results, at 95% confidence interval, we reject the null hypothesis, that sentiment scores for each topic should not be utilized for further analysis.

Results

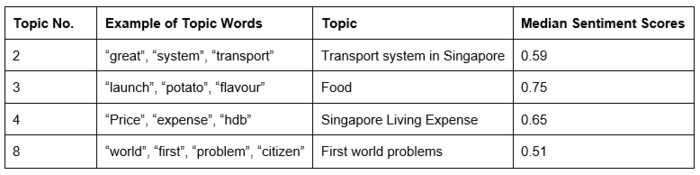

The table below summarises median scores for each topic. As we have defined sentiment score over ≥ 0.5 as positive and < 0.5 as negative, commentators have positive sentiments when commenting on these 4 topics. However, topics like the transport system in Singapore and first-world problems are borderline positive which highlights that commentators either do not like the topic or the way the content was communicated i.e. humorous or serious tone. Topics 3 and 4 generate good positive sentiment scores, which shows that commentators are generally positive about them.

Business Insights

The company wants to focus on positive topics to portray a cheerful image. However, being a new media platform, they are unable to avoid covering negative topics entirely. Hence, a proposition for them would be to communicate messages across for such topics in a positive manner. As seen in Table 9, the company can now understand the commentators’ sentiment towards these topics. The company needs to present topics that have negative or borderline positive sentiments in a more humorous manner. For example, the company could focus more on the communication and tone used for topics “first-world problems” and “transportation system in Singapore” to generate more positive consumer sentiments. Finally, the company can use these successful and/or unsuccessful topics based on consumer sentiments to understand what works and what does not to emulate across future content.

YouTube

The following analysis performed uses comments of YouTube videos to study consumer sentiments. Similarly, we understood sentiments by topics. However, LDA was not performed as we seek to understand sentiments in relation to video’s topics. Therefore, tags of videos were used as a proxy for topics. Tags were created by the company, they were used as a proxy for content. Closer inspection made it clear that tags were a better representative of content than its video title.

Methodology

We removed emojis and stop words via NTLK. Positive sentiment scores were obtained using TextBlob and NaiveBayesAnalyzer. Although visually, distribution of sentiment scores looked normal, Shapiro-Wilk test was carried out to confirm if the sentiment scores were normally distributed. The hypothesis are as follows:

H0: The sentiment distribution is normal. H1: The sentiment distribution is not normal.

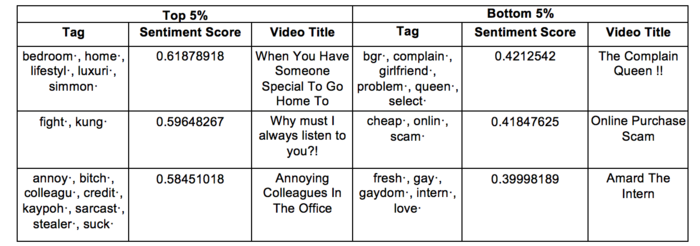

Using the web URL, we assigned a sentiment score to each video tag based off the appropriate measurement of central tendency. For tags with more than 30 comments, a Shapiro-Wilk test was conducted. For tags with less than 30 comments, the absolute skew value would be used. Finally, tags of the top and bottom 5% of YouTube videos, based off sentiment scores were presented. We collapsed tags from same videos into a row and its corresponding title is reported.

Results

After conducting the Shapiro-Wilk test, at a 95% confidence level, we can conclude that most videos have an average sentiment score of 0.49, i.e. most consumers leave borderline negative comments. This can be seen in the display below:

After analyzing tags, the results are:

Most of the top and underperforming tags originated from the same video. The company should use these videos as case studies to understand how to obtain positive sentiment.

Business Insights

This methodology provides a systematic process to understand sentiments of YouTube comments and most consumers leave a borderline negative comment. They can also identify top and underperforming videos to learn from. For example, although “HPB” has been tagged in multiple videos, only 1 had high sentiment scores while the rest had lower sentiment scores. The company needs to understand what kind of content combination works well with “HPB” and what does not. In summary, based on the consumer comments published, it allows the company to understand what works and what does not. Future research could look at longitudinal studies, i.e. the perceptions of topics across time. NaiveBayes Classifier could be trained in a Singaporean context, i.e. with Singlish and local lingo, to improve accuracy of sentiment scores. Furthermore, the use of tags as a proxy for YouTube videos could be better studied. Finally, due to the dynamic nature of the industry, such analysis must be re-run constantly to understand up-to-date insights.