Difference between revisions of "Group04 Final"

| Line 80: | Line 80: | ||

[[Image:Group04_commentsdistribution.png|700px|centre]] | [[Image:Group04_commentsdistribution.png|700px|centre]] | ||

| + | <center>Display 1: Distribution of Comments</center> | ||

The Median Test was chosen over Kruskal-Wallis test, as it is more robust against outliers. For datasets with extreme outliers, Median Test should be used. Furthermore, Kruskal-Wallis test requires several assumptions to hold. In particular, the assumption that variances is approximately equal across groups does not hold for several of the time groups. | The Median Test was chosen over Kruskal-Wallis test, as it is more robust against outliers. For datasets with extreme outliers, Median Test should be used. Furthermore, Kruskal-Wallis test requires several assumptions to hold. In particular, the assumption that variances is approximately equal across groups does not hold for several of the time groups. | ||

Revision as of 14:20, 8 April 2018

| GROUP4 |

| PROPOSAL | INTERIM | FINAL |

|---|

Contents

Overview

In this section, we will be using nonparametric statistical tests and text analysis to understand factors that affect the performance of content. Having a clear understanding on the factors of content performance will enable the company to determine its future strategy to continuously strive for better performance.

We will explore posting times and content as factors of performance and seek an appropriate methodology to analyze their effects on content performance. To capture a wide range of audiences, the company is currently active on Facebook and YouTube. We will thus be looking at data scraped from Facebook and YouTube.

For the Facebook Post dataset, the performance of posts will be compared across posting time to determine if specific posting times will affect performance, while text analysis will be performed on consumers’ comments from the Facebook Comment dataset and YouTube dataset to identify if different surfaced topics will result in differing sentiments. After our literature review, we have chosen Topic Modeling and Sentiment Analysis as the preferred methodologies for text analysis. Also, the Median test will be used to compare performance across different posting times.

Facebook Posts

The company is concerned that publishing content on Facebook on different days and time will affect its content’s engagement performance. However, they have yet to establish a methodology to study the impact of publishing day and time on performance.

Studies have shown that identifying the optimal time to reach an audience will drive social media engagement and traffic. Due to the algorithm-based feed of Facebook, having a large audience does not necessarily translate to high viewership. Instead of viewership, reach is a metric used by Facebook to measure the number of people who has seen a particular content.

The main objective is to identify the most optimal time to publish a post that will result in the highest reach as it would drive engagement. However, we were unable to scrape this metric as it is not available publicly. We considered combining three performance metrics that were scraped (i.e. number of reactions, shares, and comments) into a single metric as a proxy for reach. However, this approach is infeasible as we have identified that each metric would accumulate data over a different length of time. While we have determined that comments are no longer made on posts over six days old, we do not have time-series data on reactions and shares to perform similar analysis and determine when the last reaction or share occurs after publication of a post.

Due to the limited scope of data scraped, we defined comments as a proxy to reach as a metric for performance. However, we acknowledge this to be a limitation, as comments are not a true representation of viewership.

Facebook Comments

For the Facebook comments, we will seek to understand how consumers perceive the respective Facebook posts. Popular topics identified within the comments and their sentiment scores will be explored using Latent Dirichlet Allocation (LDA) and Sentiment Analysis. According to literature review, sentiment analysis will allow us to identify positive and negative opinions and emotions, and will be performed using the TextBlob Python package. TextBlob was chosen, as prior research has used this Python package to perform sentiment analysis on social media. The objective is to identify possible insights and define actionable plans based on the Facebook Comment dataset.

Youtube

We will also analyze comments made on the YouTube videos through sentiment analysis, as this technique has been used for “analysis of user comments” from YouTube videos by other researchers. Performing sentiment analysis on YouTube comments is ideal as “mining the YouTube data makes more sense than any other social media websites as the contents here are closely related to the concerned topic [the video]”. We will perform sentiment analysis, using TextBlob, on scraped comments to understand the consumers’ sentiments, i.e. level of positivity, vis-a-vis the content of the published videos. These comments would be from videos published from 2017 onwards, ensuring that the analysis is still relevant given the fast-paced dynamic nature of YouTube channels.

Methods and Analysis

Facebook Posts

Comments, used as a proxy metric for reach, will be compared across publishing day and time to determine the timing that has the highest comments using nonparametric statistics tests.

Methodology

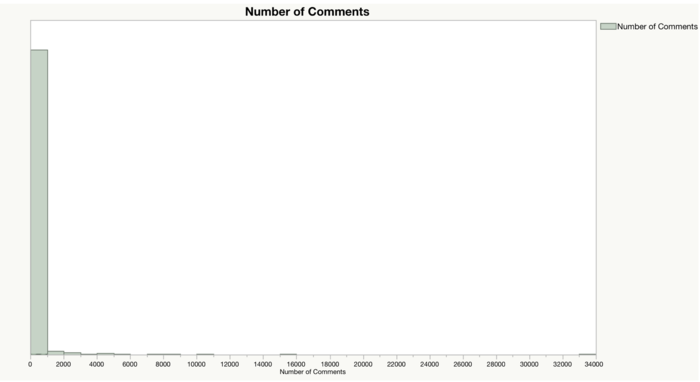

As the chosen performance indicator has a right-skewed distribution (Display 1), a nonparametric analysis Median Test will be used to test the hypothesis of the equality of population medians across categorical groups to determine if there are statistically significant differences between publishing days and time, or if the different level of performance is caused by random differences from the selected samples. Median has been chosen as the appropriate measure of central tendency, since it has a right-skewed distribution and the outliers within the dataset would significantly distort the mean.

The Median Test was chosen over Kruskal-Wallis test, as it is more robust against outliers. For datasets with extreme outliers, Median Test should be used. Furthermore, Kruskal-Wallis test requires several assumptions to hold. In particular, the assumption that variances is approximately equal across groups does not hold for several of the time groups.

Specifically, the Median test will test on the following hypotheses to compare performance across publishing days:

H1: The median number of comments across all publishing days are significantly different from each other.

To compare performance across publishing time bins, the Median test will test on the following hypotheses:

H1: The median number of comments across all publishing time bins are significantly different from each other.

Results

aaaa

Business Insights

aaaa

Facebook Comments

aaaa

Methodology

aaaa

Results

aaaa

Business Insights

aaaa

YouTube

aaaa

Methodology

aaaa

Results

aaaa

Business Insights

aaaa