Difference between revisions of "ANLY482 Enigma Methodology"

Xxwei.2013 (talk | contribs) |

Xxwei.2013 (talk | contribs) |

||

| Line 63: | Line 63: | ||

Furthermore, most of the cleaning procedures need to check a certain column’s value for each row of the data. For example, in “df_soc_vt” data frame, a record could be considered as ‘error’ if its value under “col_name_A” column is “XXX”, so the system will add a comment message in the “Verification” column. | Furthermore, most of the cleaning procedures need to check a certain column’s value for each row of the data. For example, in “df_soc_vt” data frame, a record could be considered as ‘error’ if its value under “col_name_A” column is “XXX”, so the system will add a comment message in the “Verification” column. | ||

| − | + | [[File:Nrow.PNG]]<br/> | |

<i>Figure 5. R code to check a column value and assign comment message if necessary</i> | <i>Figure 5. R code to check a column value and assign comment message if necessary</i> | ||

| Line 70: | Line 70: | ||

Another useful function for data cleaning is “grepl”, which helps you to pick out those records contain the target information. For instance, we need to check whether any row contains the “ABC” under “wclass” column. | Another useful function for data cleaning is “grepl”, which helps you to pick out those records contain the target information. For instance, we need to check whether any row contains the “ABC” under “wclass” column. | ||

| + | [[File:Grepl.PNG]]<br/> | ||

<i>Figure 6. R code to check the partial value of a column</i> | <i>Figure 6. R code to check the partial value of a column</i> | ||

Revision as of 10:14, 23 April 2017

|

HOME |

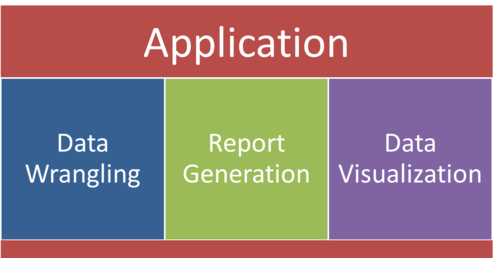

Application Overview

Data Wrangling

The data cleaning section aims to automate the current manual data verification process in the spreadsheet. The user should just upload the raw data file in excel format to our application portal respectively, and two data files will be generated automatically: one clean data set and one data set only contains those suspicious records with an additional column of the verify information. Additionally, once the error has been fixed, user is supposed to upload the “suspicious data set” to the same window again, the application will append these rows to the clean data file if the records could pass through the verification process. And this is an iterative process until all errors in the dataset have been cleaned and the dataset is readily available for report generation.

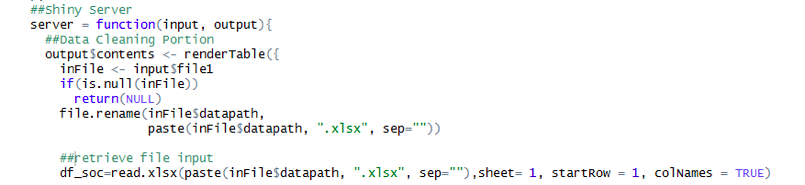

Firstly, as the raw data file’s size could be large, and to ensure the application performance, we get help from one R library “openxlsx”, which allows the system to read the massive data set in excel format more effectively than “xlse”:

Figure 1. R code to upload file from a relative path for data cleaning with “openxlsx”

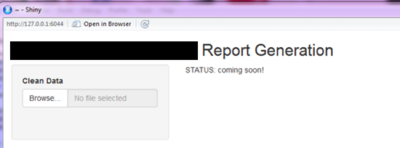

To have a better user experience, we use ShinyR as shown below to build a user interface for file uploading, and the ”read.xlsx” reads the relative path, which is more dynamic.

Figure 2. User interface for file uploading of data cleaning

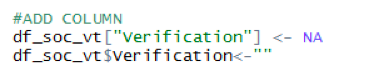

Once the data file has been uploaded successfully. We would perform data cleaning according to various business rules provided by KTPH. As required, we need to add a new column called “Verification” on existing data frame to store the comments for any suspicious record, and the default value is empty.

Figure 3.R code to create a new column of a data frame with default value as empty

Some of the procedures required to just keep a certain part of the data from the original raw data set, so we use a ‘filter’ function here from “dplyr” library. This function allows us to keep those records based on the particular values in the certain column only. For example, the R code below will give back a new data frame “df_soc_vt”, which contains records from “df_soc” with “visit_type” of AB, CD, EF or GH only.

Figure 4. R code to filter according to a column value

Furthermore, most of the cleaning procedures need to check a certain column’s value for each row of the data. For example, in “df_soc_vt” data frame, a record could be considered as ‘error’ if its value under “col_name_A” column is “XXX”, so the system will add a comment message in the “Verification” column.

Figure 5. R code to check a column value and assign comment message if necessary

This part of code will check through each row of “df_soc_vt” in a for-loop, and “nrow” is an effective way to calculate the total number of rows in the data frame; “paste” function is to write a content of the data frame cell.

Another useful function for data cleaning is “grepl”, which helps you to pick out those records contain the target information. For instance, we need to check whether any row contains the “ABC” under “wclass” column.

Figure 6. R code to check the partial value of a column

Lastly, the system will differentiate those clean and suspicious records, and save them in the different data frame accordingly to write them back in csv files. This could be done by checking the “Verification” column and R-built-in “write.table” function will write the data frame into a csv file effectively, as shown below:

Figure 12. R code to get clean data set and write back to a csv file

To summarize, these are the functions used frequently in data cleaning process. Depending on the business rules, we need to adjust them accordingly to meet various needs.