Difference between revisions of "AY1516 T2 Team AP Data"

| Line 57: | Line 57: | ||

* Interactions with SGAG's posts (Favourites, Retweets and Replies) | * Interactions with SGAG's posts (Favourites, Retweets and Replies) | ||

| − | Due to Twitter API's querying limit, we will have to spend some time requesting for data. We have arranged to do this within 1 week. <br> | + | Due to Twitter and Instagram's API's querying limit, we will have to spend some time requesting for data. We have arranged to do this within 1 week. <br> |

After successfully crawling the data, we will load it up into Gelphi and begin our visualisation.<br> | After successfully crawling the data, we will load it up into Gelphi and begin our visualisation.<br> | ||

Revision as of 02:02, 17 January 2016

| Project Description | Data | Methodology |

|---|

Dataset provided by SGAG

Currently, SGAG only uses the insights provided on Facebook Page Insights and SocialBakers to gauge the reception of its posts, and much of the data that they have access to has not been analysed on a deeper level.

They have provided us with social media metric data extracted from its social media platforms, namely Facebook, Twitter and Youtube. This gives us the following datasets that present a generic aggregated representation SGAG's followers:

- Unique visitors, by day and month

- Post level insights: Total Impressions, Reach, Feedback

- Engagement Insights: Likes, Viewed, Commented

This does not assist us directly in mapping out SGAG's social network, and we would have to crawl for more data using the API for each social media platform pertaining to the social network.

Crawling

Initially, we thought of mapping out the social networks for SGAG's main platforms: Facebook, Twitter and Instagram. However, due to the inaccessibility of user data that can be extracted from Facebook, we decided to focus on Twitter and Instagram first since we are able to extract social network data much more easily.

We will have to crawl the data through Twitter and Instagram API. Using NodeXL, we are able to extract SGAG's Twitter social network data.

This gives us the following information:

- Followed/ Following relationship represented by Edges

- Names of Twitter accounts associated with SGAG and their followers

- Interactions with SGAG's posts (Favourites, Retweets and Replies)

Due to Twitter and Instagram's API's querying limit, we will have to spend some time requesting for data. We have arranged to do this within 1 week.

After successfully crawling the data, we will load it up into Gelphi and begin our visualisation.

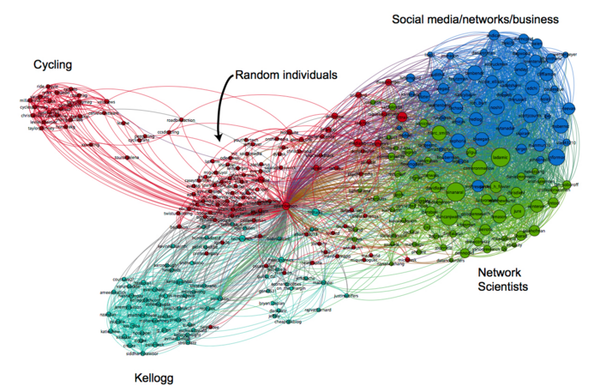

Here is an example of an expected network visualisation for a social media platform.

Merging data

Since the data is provided for each URL, we can easily match the URL between the data given by Skyscanner and the characteristics crawled by us. Thus, we will have a list of attributes mapped to URLs of each specific article.

Storing data

Our data needs to be saved in a convenient format so that we can use it as input for other analytic programs.

An option for fast querying is storing the data in a database. This approach provides easy export to other formats that can work with analytic software, and access from both a GUI and code. Another option is to store data in flat files for easy transport between systems. However, it will reduce accessibility since our code and program need to parse the information again.

With pros and cons in mind, we will proceed with the database approach initially, and make changes as the the project continues.