AY1617 T5 Team AP Methodology

Contents

- 1 DATA ANALYTICS LIFE CYCLE

- 2 LEADING BUSINESS DOMAIN (DISCOVERY)

- 3 BUSINESS PROBLEM (DISCOVERY)

- 4 DATA COLLECTION (DATA PREPARATION)

- 5 DATA PREPARATION

- 6 Initial Exploratory Data Analysis (Data Preparation)

- 7 DATA CLEANING (DATA PREPARATION)

- 8 DATA INTEGRATION (DATA PREPARATION)

- 9 INTERACTIVE EXPLORATORY DATA ANALYSIS (MODEL PLANNING)

- 10 ANALYSIS AND MODEL BUILDING (MODEL BUILDING)

- 11 MODEL VALIDATION (MODEL BUILDING)

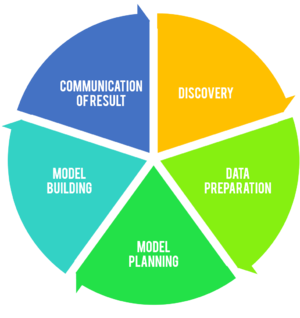

DATA ANALYTICS LIFE CYCLE

Our team adopts the data analytics lifecycle as part of the planning process to guide us through the different stages of the project. The diagram below illustrates the key stages which our team have adopted.

LEADING BUSINESS DOMAIN (DISCOVERY)

Our team aim to understand SGAG through exploring the content published by SGAG through multiple channels, namely SGAG’s social network services, website and media contents.

BUSINESS PROBLEM (DISCOVERY)

Through interviews and speaking with the founder of the company, our team aims to understand the business problems of SGAG to assist us in identifying problems and translating them into analytics objective.

DATA COLLECTION (DATA PREPARATION)

SGAG will be able to provide us with data of the uploaded video posts, generated from their facebook and youtube pages with variables listed above. Apart from what is given, we would also like to gather additional information of the videos, including the comments on the videos, characters involved in the video, video resolution, as well as the video type, whether it is an original or reposted video. As SGAG uploads videos of various categories and resolutions (Filmed using a phone versus video camera), we would like to accurately categorise them to further increase the dimension of the data set.

DATA PREPARATION

Some of the datasets provided are not structured in the format suitable for analysis to be done (ie: Additional derived columns need to be added, tables need to be traversed etc.).

Data required for analysis on the video specifications in relation to audience receptivity will require additional use of open source tools like EXIFTool to extract the EXIF (Camera data) data like video width and video height.

Initial Exploratory Data Analysis (Data Preparation)

Before we do a more concrete and complete exploratory data analysis (EDA), we first would like to do a basic EDA to identify outliers that might, in future, affect our analysis results.

DATA CLEANING (DATA PREPARATION)

For data cleaning, we would be going through the data sets to identify missing data, inconsistent values, inconsistency between fields and duplicated data. For each row of data with missing value(s), we would then check the number of missing fields it has to decide whether we should omit the row of data completely, or should we predict a value for the missing field(s) using suitable techniques such as association rule mining and decision trees. Extracted data from EXIFTools will require the removal of irrelevant fields to remove less important data. The required fields from the exif data of videos are:

- Video height

- Video length

- Video Width

DATA INTEGRATION (DATA PREPARATION)

For the videos uploaded on Facebook, we intend to collect 3 sets of data - Video data on Facebook, data of the post on Facebook that was used to share the video and the data on video specifications (resolution, dimensions etc.). Facebook data of the video and post can be integrated using the full ID (xxxxx_xxxxxx). The dataset on the video specifications can be integrated by using the last 16 digits (digits after the underscore) of the ID.

INTERACTIVE EXPLORATORY DATA ANALYSIS (MODEL PLANNING)

Post integration, further analysis will be conducted on the data to provide the basic trends. This is to bring out insights which may not be directly clear on Facebook and YouTube individually but as a whole. It also includes summary statistics to highlight to our client the key high performers based on the available metrics.

Outliers for the data may also include viral videos which the client have curated. For these videos, they may be put together for further analysis. This may also include videos with interesting video titles. An example of this identifying this could be a trend with videos post which may have higher video plays but lower “at least 30 seconds view” or “view to 95% length”.

ANALYSIS AND MODEL BUILDING (MODEL BUILDING)

We will then proceed to do our analysis of the various components and create analytical models using related variables. For our prediction models, suitable techniques, such as stepwise regression, can be carried out. We will then determine which variables are the best predictors using a threshold, and remove other variable which are not producing the best results.

MODEL VALIDATION (MODEL BUILDING)

Model validation ensures that the models meet the intended requirements with regards to the methods used and the results obtained. Ultimately, we aim to have models that addresses the business problem and provides results with fairly high accuracy. We will split our data sets into 2 parts - training data and testing data. After we have built our models using the training data (Decision tree, linear/multilinear regression etc.), we need to check if the model is over or underfitting by running the test dataset through the models and compare both the results of the test and training data. For regression models, we also need to check the R-square values to ensure the model’s accuracy.