ISSS608 2018-19 T1 Assign Stanley Alexander Dion Data Preparation

|

|

|

|

|

|

Contents

Task 1

Dataset Background and Approach

The EEA data is available from 2013 until 2018 across 6 different observation stations. The first step we need to do is to join the observation data (named EEA with different year periods on different stations) with the station information (stored in metadata.csv). The join will be made on AirQualityStationEoICode as the common column on both table. This will enable us to cross-check observation data across different geographical locations by observing the longitude, latitude, and altitude of the stations. To achieve this, I am using R Data Wrangling package within TidyVerse package. An overview of the data is shown below

The `DateTimeEnd` instead of `DateTimeBegin` is chosen as the time indicator for our analysis since I believe the time shown here concludes the true observation of the measuring period. To ensure consistency of records throughout our data, we will create a virtual data set consisting of complete sequence of dates of the entire observation period for each of the station in the dataset with which we can do a left outer join. This will allow us to detect days when some or all stations don’t have any observations columns on the dates that don’t have any observations for some or all stations.

Task 2

Dataset Background

The Citizen Air Quality is available from 2017 January until September 2018. Unlike that of Government official data, we had a huge number of pollution sensing stations. Each of the sensors is tagged with a geohash code. The geohash code is then converted into the longitude and latitude with the help of R Geohash package.

In order to detect peculiar observation on the sensor, we should expand our data to time-series observation as what we have done for Task 1. Each of the sensor will have one row for each of the date.

Approach Introduction

In order to ease the pattern finding process, we would do time-series clustering to see groups of sensors that have the same pattern of pollution across the city. This way we could answer the question on which part of the city shows a relatively high reading on certain occasion.

Since PM 10 particle has bigger size and more dangerous and yet we only have PM 10 observations from the government official data, we would focus more on PM 10 concentration. This would allow us to have direct comparison between the two datasets. Another reason that we might omit using PM2.5 observations will be discussed further in Task 3.

Approach Discussion

The clustering algorithm will take each time-series observation as the features for each of the sensor location. As a result, we have a huge dimension, which are 8,249 columns. To reduce the dimensionality, we will employ Mean Seasonal Time-Series representation, where we represent the data by sets of moving averages. We will bring down the features to 24 points.

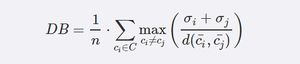

To choose the number of optimal clusters, we will use the Davies Bouldin Index that relates the average distance of elements of each cluster to their respective centroids to the distance of the centroids of the two clusters. The lesser the Index, the better cluster formed since they have separable clusters

3 Clusters were chosen since it has the least value for the DB index. We could see we have formed more or less homogen clusters seen from the seasonality of the cluster.

Task 3

In the third task, we would like to join the meteorological information to each of our data in Task 1 and Task 2. This would allow us to answer the what kind of factors affecting PM concentrations within the city. Since we have fixed each dataset to have the full set of date range, now we just need to do a left-outer join between the corresponding data and the meteorological information.

Approach for Weather Correlation

In this task, we would like to find how does meteorological information correlate with the pollution concentration in Bulgaria. Due to the geographical nature of the data, e.g., different meteorological conditions of different regions of the city will have different impact to the concentration, we couldn't use the global approach of normal regression to do the analysis. Instead, we would like to employ Geographical-Weighted Model (GWR) learn the different local parameters adjusted at different regions of the city.

The preparation steps needed also includes removing observation with extremely high PM10 concentration readings (> 800 MicroGram) as we can assume the sensor experience breakdown within the time. The learning algorithm will then produce parameters that we can plot on the map to understand the effect of Pressure, Humidity, Temperature, and Wind speed at the various regions of Sofia.