ISSS608 2016-17 T1 Assign3 Linda Teo Kwee Ang Approach

|

|

|

|

|

|

APPROACH

Given both movement data and communication data, I will first analyse the movement data for any observations on any visitors of interest. Thereafter, the communication data of these visitors of interest will be probed, to draw links to any other related persons.

Because of the massive data, data exploration is done on segments of the data, broken down into day, hour, location and specific ID groups. It is very important to track the development and analysis along the way so as to avoid missing out any segments. Along the way, more and more data files (JMP, Tableau+extracts, and Gephi) files were created. As part of housekeeping, I will state them inside this wikinotes for my own reference.

Dataset

The dataset used comprised the following for the period of 6 to 8 Jun 2014 (Friday to Sunday):

- Movement data: this covered the movement and check-in records for users of the app devices. The volume of movement data for all 3 days totaled 26,021,963 rows.

- In-app communication data: this covered communications between paying park visitors and communications between visitors and park services, and records of text sent by users to external parties. The volume of communication data for all 3 days totaled 4,153,329 rows.

Data processing required

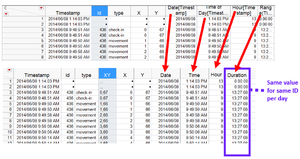

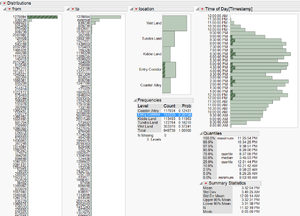

3 additional columns were added using right-click on Timestamp column, then New Formula Column\Date Time\[selection]. The selections were Date, Time of Day and Hour. Then another column was created on the duration of visit per day for each ID, by using Tables\Summary on Timestamp, and Range (Timestamp) for Statistics, and Group by Date and ID. A new worksheet is created, we will need to adjust the Column Info for the Range column, then merge it back into the main data table using Tables\Update. Before this step, I would usually lock the columns in the main data table to avoid unnecessary changes to the other columns. The columns X and Y were also combined by selecting them and using Cols\Utilities\Combine Cols. At this stage, the main data table looks like this (after sorting by Timestamp and then ID):

Using Analyze\Tabulate, we can check for IDs which are common across all 3 days, to check for any particular visitors who had gone to the park for all 3 days. The Date column is changed to ordinal scale for this purpose. The data is then updated back to the main data table as number of visit days.

Duration is calculated for every movement record at each XY point for each visitor. This is done by sorting the movement rows chronologically by each visitor ID, then using formula Dif([Time of day],1) to calculate the time difference of current row with next row. As this column is susceptible to sorting (which changes the row order hence disrupts the calculation), the values of the formula were pasted into a new column, and then applied formula Lag([Individual timespan],-1) to shift the values 1 row upwards. In this way, the exact duration spent for that row will be reflected in the same row.

Using Tableau for visualising movements

Tableau was used to visualise the movements of selected visitors in the park over time. This is done by importing the relevant data records and plotting X to Columns, and Y to Rows. The map of the park can be applied to Tableau using Map\Background Images. By putting Timestamp into Pages, a Playback control would appear at the right menu, and we can visualise a "video" of the movement. It is recommended to configure the mode down to minute level, so that the video is smooth. Different visitors can be viewed at the same time, to support hypothesis as follows:

- Are the visitors travelling together?

- Did the paths of visitors cross? Eg two seemingly unrelated visitor is suspected to be in cahoots.

I had tried to place the Tableau dashboards on movements for all 3 days together for comparison, but the timings cannot be synchronised as Sunday (which contained much more movement records), ran slower than the other 2 days. This method was also tried using selected data - please refer to Analysis section for details. https://www.youtube.com/watch?v=O78AJlH9RKQ (produced using Camtasia v9)<Ref: 3days.tscproj>

<Ref: DinoFunWorld_MovData\..>

<Ref: DinoFunWorld_MovData\..>

Using JMP for Cluster Analysis on movement data

Additional columns was created using JMP, to aggregate the movements at the various locations in the park. Only movement records which exceed the normal 6 second movements was considered. This is in contrast to another method of calculating the number of check-ins into the attractions/rides, reason being that it was mentioned in the Park's website that the check-ins may be used to check for waiting times, hence they may not be referring to actual use of the park's attractions/rides. The only problem with using movements > 6 seconds, would be that it would include visitors who walked slower than average pace. Using JMP, I had excluded rows where the time spent at each XY point is less than or equal to 0:00:06, then tabulated them by ID, and aggregate by the ride types for each XY point.

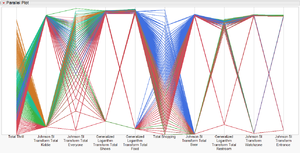

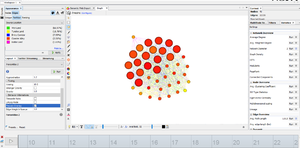

The aggregated variables comprised Total Thrill Rides, Total Kiddie Rides, Total Rides for Everyone, Total Beer, Total Food, Total Shopping, Total Restroom, Total Watchzone and Total Entrance. Pairwise correlation performed showed that there was moderate positive correlation between Total Rides for Everyone and Total Thrill Rides at 0.69, and Total Restroom to Total Thrill at 0.61, and Total Restroom to Total Rides for Everyone at 0.57. The rest of the correlation coefficients were below 0.5. Other than Total Thrill and Total Shopping, the rest were all transformed using Johnson Sl or GLog. The complete-linkage hierarchical clustering method was found to have the highest Cubic Clustering Criterion at 92.16, with 5 clusters.

While cluster analysis is not specifically required for this assignment, it is performed to gain an understanding on the general preferences of the visitors in the park.

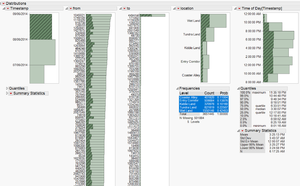

Using JMP and Tableau to process communication data

JMP and Tableau were used to process and preview the 3 days’ worth of communication data:

- To identify the high volume communications and understand what could be their purpose/intent

- To tabulate the data so that it may be compiled into an aggregated format for visualisation using Gephi. For example to aggregate the interactions between IDs to derive their weights. Note that this can also be done inside Gephi itself, however the high volume of data slowed down Gephi, hence processing was done in JMP instead.

- To retrieve communication data of specific IDs for visualisation using Gephi.

I was also using Tableau to visualise the sequence of communication flow initially, by tabulating the Source and Target in a graph. However subsequently, I learnt that a timeline can be added into Gephi. Please read about the method below. However I subsequently did not find it effective, and went back to using Tableau.

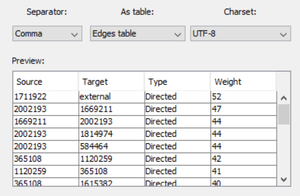

Using Gephi to visualise communication data

Gephi was used to visualise the communication data on individual days. To start, 8 Jun (Sun)’s data was visualised since it was the eventful day. As the critical timing is around 11:30am, I had "shortlisted" the communication data to this timeframe. It should be noted that Gephi (and the entire system) may hang if we deploy too much data for this. It is also not meaningful to see the whole lump of nodes and edges with massive data.

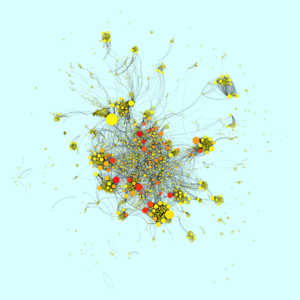

My first idea was to create 2 Gephi visualisations to compare the pre and post periods of the opening of Creighton Pavilion. 2 data sets were prepared - 11:15am to 11:29am, and 11:30am to 11:45am. My aim was to see a spike in the communications in the 2nd set. Only communication data sent out from Wet Land was used - this is to support the hypothesis that visitors quickly disseminate the information (about the crime) after they visit the Pavilion, and also to reduce the size of data further. The respective nodes and edge files were prepared and uploaded in separate sessions. The Fruchterman Reingold layout (@ speed 50) was first used, then Force Atlas2 (with scale 5), followed by running of statistics for Network Diameter. Adjustments were then made to the node size using . Adjustments were made to node size based on Degree, for min 5 and max 100’’’. The node colour was adjusted using Betweenness Centrality, from yellow to red. Edge colour was adjusted using Weight. Thereafter, manual adjustments were done to move the overlapping edges, so that the groups are distinct from one another. At preview, edge opacity was set at 60, and colour Original. Then the graph was exported to Sigma.js template - to remind myself that the ‘’’html file should be opened using Firefox instead of Chrome’’’, because the latter had some browser security restrictions hence will not be able to display the chart.

We are able to use a rectangle selection to select the individual groups and copy to a new workspace for further probes. This allows more specific checks, and also a clearer view as the labels in each nodes can be seen with better resolution. It is also useful to use the Drag method, as we can increase the circle size to drag more nodes at the same time. To be reminded that ‘’’in Gephi, right-click of mouse moves the page while left click selects.’’’

Reference was made to this Youtube video on how to ‘’’visualise Gephi with a timeline’’’ https://www.youtube.com/watch?v=hKYku8b60Dc. The Datetime is first uploaded inside the edges csv using String format, and while still at Data Laboratory view, click Merge button and select Datetime. Use Merge Strategy of “Create time interval”, then click on Enable Timeline. It is necessary to leave the Default End Time blank. There is a small setting button at bottom left of the screen, where can be used to change set time format.. Furthermore, the nodes remained on the screen and only edges were changed when I shifted the timeline. More research will be necessary to understand how this works. As a result, I fell back on using Tableau.

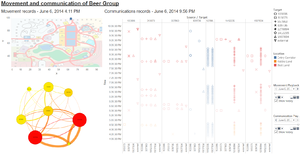

Using Tableau dashboards to visualise movement, communication (who/where/when) and network graph

I personally found it useful to have everything in one page, eg a Dashboard, so that I can concurrently "see-all" for a more complete analysis. As Tableau Dashboards are pretty easy to do, I had done my anaysis of the different groups of interest in a dashboard. In the dashboard, I can run the playback to see the movement of the visitors, then make reference to their communications based on the timing, and also see their network graph (pasted as an image). Unfortunately the dashboard is not interactive, as I have yet to match the IDs and timings from different data sources effectively.

Using a Sankey diagram in Power BI

I was attempting different methods of visualisation for network data. Under Power BI, we are able to add custom visuals from the Custom Visuals Gallery at https://app.powerbi.com/visuals/. In particular, the Sankey, published by Brad Sarsfield, was found to be applicable towards the communication data. We are able to add a time filter to it.

When using Power BI, to remember to click Get Data, then go to Edit to adjust the format of each column. Then click Close and Apply. To apply custom visuals, click on the … under Visualisations, and select Import a custom visual. The Sankey with Labels custom visual is rather easy to use, just by dragging the Source and Target into the respective fields, and add Location to Weight, then add Time to Report level filters. At Format, select Link Labels, then turn the Names on.