Teppei Syokudo - Improving Store Performance: ESK Data Analysis Methodology

| Home | Product Portfolio Analysis | Improving Store Performance | Project Management | Documentation | The Team |

Data Exploration

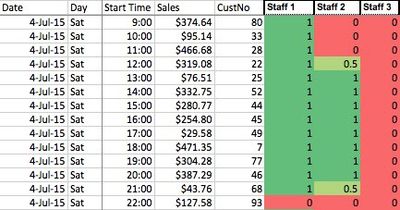

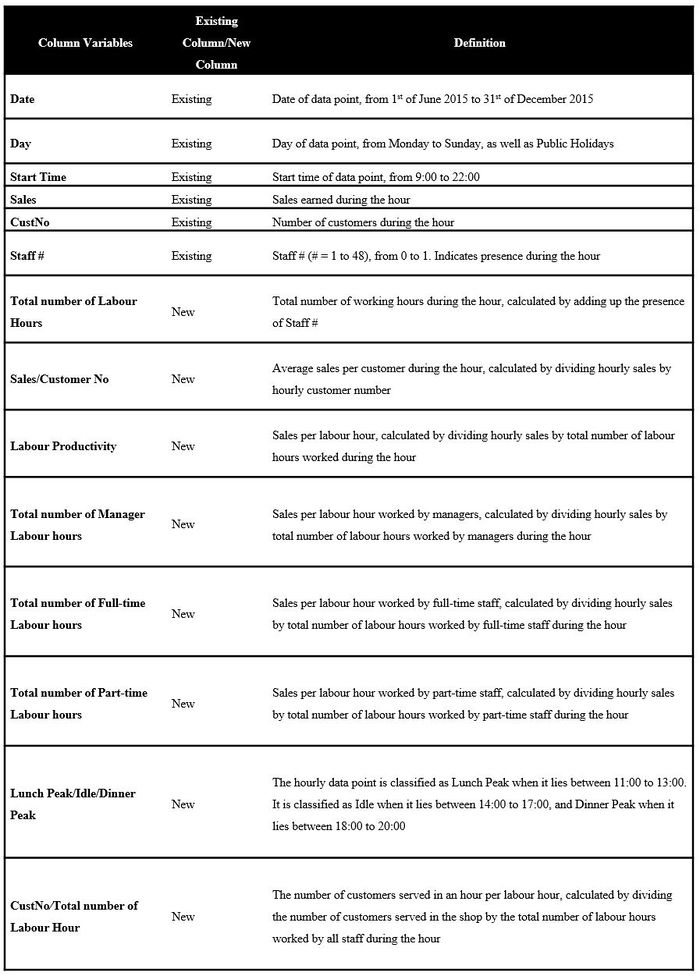

Our dataset comprises of 48 staff and their hourly labour records from 1st June 2015 to 31st December 2015. As the shop is opened seven days a week from 9:00am in the morning to 23:00 at night, we collected 2,996 hours’ worth of data over 214 days. The “Date” column indicates the date for each hourly data point, and the “Day” column indicates the corresponding day, from Monday to Sunday, as well as Public Holidays.

We allocated a number from 0 to 1 to each staff for his presence during each hour. For example, if the staff was present the full hour, he will be allocated a “1”. He will be allocated “0” for the hours that he was not present and “0.5” for the hours that he was present for only half the time.

Hourly store performance figures such as store sales (“Sales” column) and customer numbers (“CustNo” column) were also included after examining data from the POS system.

In addition, to test some of the hypotheses that we have thought of, we collected data on the staff that was manning the cash register for each hour (cashier data). The cashier data was then prepared in the same format as above. Cashier data was available from 20th October to 31st December.

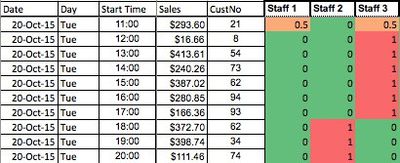

Both datasets were then imported into JMP using the “Open…” function.

After toggling the worksheet setting such that the column headers are correctly reflected, we can proceed with importing the data using the “Import” function.

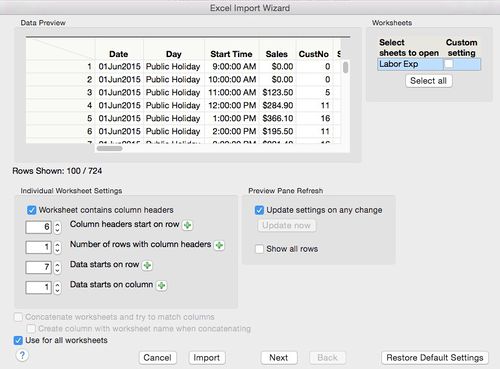

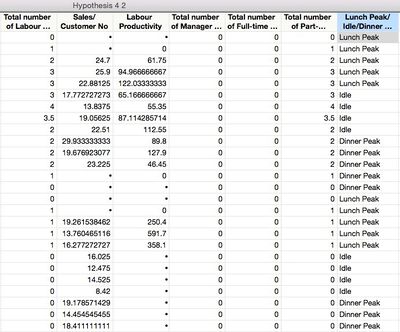

The following data table was then generated in JMP for further analysis.

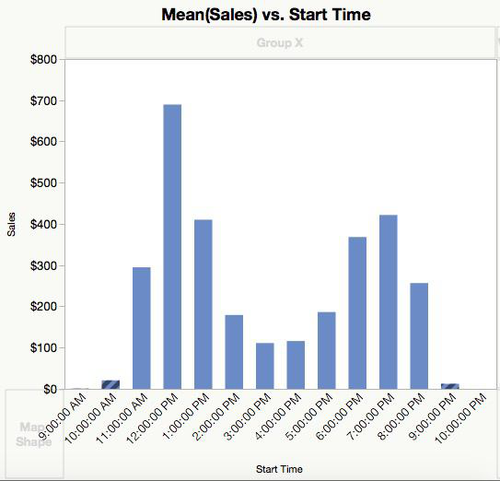

Time of Day Effect. We were provided with hourly and daily data for both sales and labour within a six-month period. In exploring the data given, we found that there was a bimodal pattern in the sales transactions.

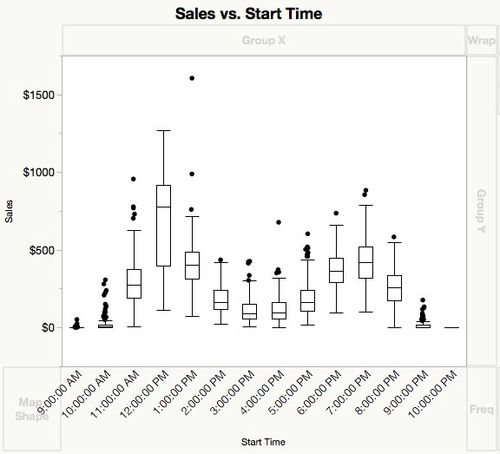

Mean sales per hour peaked at two times of the day namely, 11:00 to 13:00 and 18:00 to 20:00. We attribute the peak periods to the lunchtime and dinnertime crowds respectively. The other time periods are classified as idle time. We realise that this “Time of the Day” effect will have to be taken into account when evaluating shop productivity in our hypotheses so that sales toward a particular factor would not be over or under attributed. We label 11:00 to 13:00 as Lunch Peak, 14:00 to 17:00 as Idle, and 18:00 to 20:00 as Dinner Peak. As for time periods 9:00 to 10:00 and 21:00 to 22:00, we have decided to exclude them from our analysis due to their insignificant contribution to shop sales. Furthermore, with respect to Figure 2.1, the 75 percentile of sales for these timings are usually close to zero which implies that sales during the first two and last two hours of the day are usually zero.

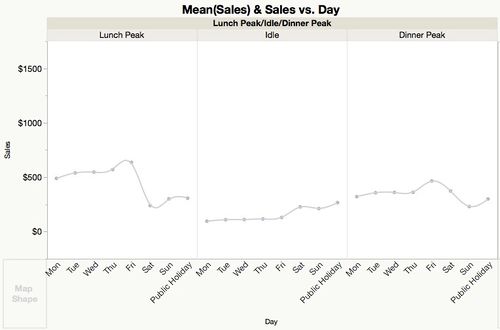

Day of Week Effect. We also found the mean sales for every day of the week. Accounting for the “Time of the Day” effect, we find the mean sales each day of the week. The day of the week also accounts for public holidays as crowds may be higher during public holidays. Similar to the “Time of the Day” effect, the chart below shows that there is also a “Day of the Week” effect.

During Lunch Peak and Dinner Peak, the store tends to achieve higher sales average on Fridays, with Saturdays experiencing lower mean sales. However during Idle periods, Saturdays and Public Holidays tend to experience relatively better mean sales compared to Idle periods on other days in the week.

Data Cleaning

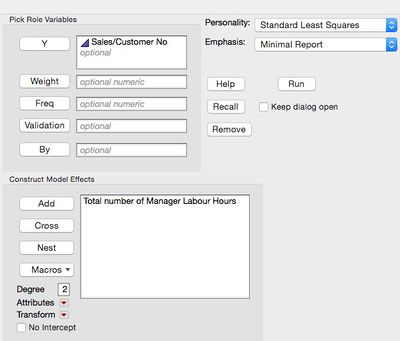

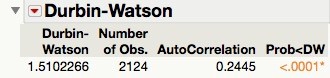

Removing Autocorrelation. The “Time of the Day” and the “Day of the Week” effects that we mentioned above indicated that the data might have significant autocorrelation due to its nature as a time-series. Significant autocorrelation would render the regression analysis inaccurate and hence we tested the dataset for autocorrelation using the Durbin-Watson test. We ran a sample regression analysis using the Fit Model function with the dependent variable Y as Sales/Customer Number and independent variable X as the Total number of Manager Labour Hours.

The results of the Durbin-Watson test is shown below:

With p-value less than 0.05, we know that autocorrelation is present in our data. The Durbin-Watson value of 1.51 tells us that there is some positive correlation between values of the dependent variable, Sales/Customer Number, across different time periods.

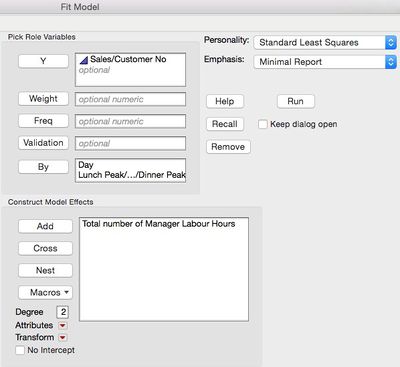

In order to remove autocorrelation, we opted to partition the dataset into eight different days (including Public Holidays) and three different time periods in a day so as to account for both the “Day of the Week” and the “Time of the Day” trends. To do this in the Fit Model, we used the By function with the variables Day and Lunch Peak/Idle/Dinner Peak.

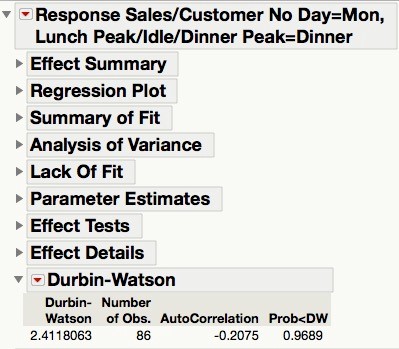

The results of the Durbin-Watson test this time is:

The p-value now is greater than 0.05, showing that the degree of autocorrelation in the partitioned data is no longer significant. Thus, for our subsequent analyses, we would run the analyses with the partitioned data instead of the full data set in order to account for the “Time of the Day” and “Day of the Week” effects.

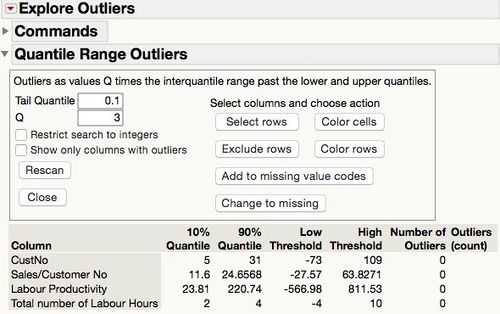

Removing Outliers. Before regression analysis can be done, we must also ensure that there are no outliers that might skew the results of the analysis. We used the JMP Remove Outlier function to search for outliers.

The definition of an outlier in this case is any point that is 3 times the interquartile range of a certain variable. We did not identify any outliers for the three constituent elements of shop productivity.

Adding Calculated Columns. In order to test our hypotheses, we had to create new columns that were not included in the data set. All the column variables are indicated in the table below.

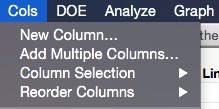

We added the new columns into the JMP table using the “Add new column” function. For example, we wanted to add a calculated column for “Total number of Labour Hours”

Step 1: Click Cols in the Menu Bar, then select New Column.

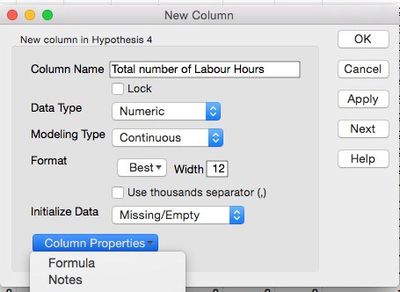

Step 2: Under Column Properties, select Formula.

Step 3: Under Statistical, click on Sum, and then select Staff 1 to Staff 5

Step 4: Click Apply

We repeated this process for all the new columns to obtain respective calculated columns.