ANLY482 AY2017-18 T2 Group 17 Project Overview Methodology

| Background | Data | Methodology |

|---|

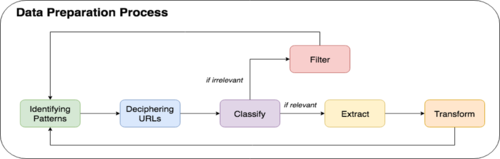

Data Preparation process

SMU Libraries provided us data logs with metadata, but minimal information was given regarding the information available within the URL column, which consists of many information needed for our analysis. Our observations found out that the URL column consists of information about database, type of document, document ID and chapter of e-book. When accessing each database, the URL generated for each page differs. As each database URL generated is different in structure and some information may be available for a database but lacking in another, resulting in a need for different data retrieval method.

In preparing the data, a 5-step systematic approach was formulated which consists of identifying URL patterns, deciphering the URL, classifying it based on relevancy, extracting data or filtering it out and lastly, transforming data to suit our analytical needs.

1. Identifying patterns

With the use of software, Charles Proxy, we attempt to replicate how the proxy log data is generated by browsing the database with the ‘Doc ID’ taken from the dataset and clicking on the various links available on the e-book. In doing so, we form expectations on the various interactions and map to the type of URL generated.When identifying common patterns in URL, the commonly used directory will be identified.

Non-Material Rows

Amongst the non-material rows identified, for the example above, ‘/assets/’ has been identified to be a common directory used.

Material Rows

For the material rows identified above, ‘/stable/’ is a common directory identified. But it differs because users are given the option to download the document or viewing the material online. For online view, the identifier would be the directory after ’10.XXXX/’ whereas for downloads, the identifier would be after ‘/pdf/10.XXXX/’.

2. Identifying patterns

For each of the sample URL extracted, it will be checked on the browser to see what the page loads.

Non- Material Rows

Material Rows

3. Identifying patterns

Judging on the content displayed, the URL accessed in the log will be deemed relevant for our analysis or not. For the non-material row result above, the logo of Jstor is displayed, suggesting that the ‘/assets/’ directory holds the images and styling files (CSS, JavaScript), which we would not need and therefore be classified as irrelevant. As for the material row result above, the content shown is the actual pdf file of the e-book which is useful for our analysis.

4. Identifying patterns

Based on the data relevancy, we will choose to extract the information to be a specific column or filter the entire record out of our analysis. In the instance of non-material rows, since all the URL with ‘/assets/’ directory are not needed in our analysis, it will be programmatically filtered out. While the material rows will be extracted for further transformation and analysis.

5. Identifying patterns

Data like timestamp will need to be transformed to suit the SQL’s standard of storing datatype datetime so that we can use functions for the datetime stored. Once data transformation is complete, sample checks are conducted on the cleaned data to ensure that the data is coherent before moving on to undergo exploratory data analysis (EDA).