ANLY482 AY2017-18 Group9: Project Overview/ Methodology

Contents

DATA COLLECTION / PREPARATION

Upon understanding the problems faced by KOI and coming up with potential solution for their problems, we requested a list of datasets that we will required to perform our analysis. In particular, we will target sales and wastage data to optimize the reorder inventory.

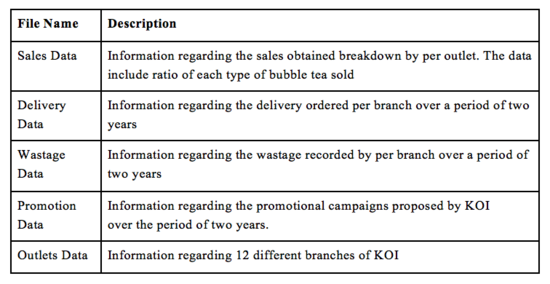

To facilitate our analysis, KOI has kindly provided our team with a data of 15-20 outlets with 1-2 years (from Jan 2016 - Dec 2017) worth of data collected. The client wish to focus on the latest business fiscal year, henceforth we will be provided with the latest data obtained. The type of data obtained are summarize in the table below.

Data Summary

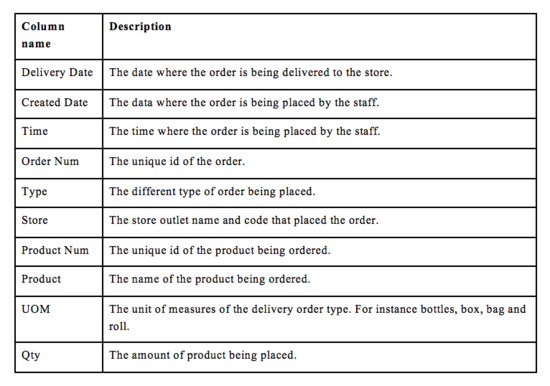

Delivery Data

A row in this table represents a specific delivery ordered by a branch. The detailed description of the main columns in this table are as follow:

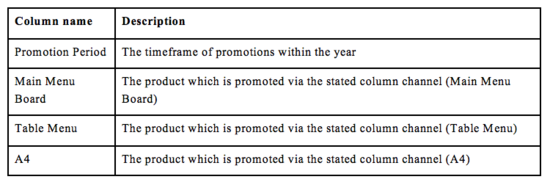

Promotion Data

A row in this table represent a specific promotional campaign held in a period. The detailed description of the main columns in this table are as follow:

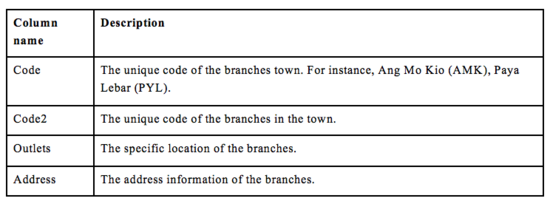

Outlet Data

A row in this table represents the KOI branch outlet information. The detailed description of the main columns in this table are as follow:

Sales Data

We currently do not have the Sales Data as our sponsor have requested more time to retrieve their sales data stored in the cloud space.

EXPLORATORY DATA ANALYSIS

Our team will look into the Sales Trend, Delivery and Wastage data to determine if there are any significant correlations between these three factors. Our main project aim is to optimize inventory reorder point with a 15-20% safety net surplus, at the same time minimizing wastage. Utilising EDA,

we will identify seasonality factor through sales analysis. Additionally, we will identify any trends regarding the delivery data as well as the wastage recorded. Henceforth, we wish to identify correlations between these above-mentioned factors.

DATA CLEANING

Missing values and outliers observed during our exploratory data analysis may result in unnecessary inaccuracy and result in skewness of our analysis. To handle missing value, we will look at the missing values / blanks identified and determine if the value should be replaced with our estimation, or simply removed. For outliers, our team will attempt to analyze the underlying reason behind such occurrence and decide if it is important and significant enough to be included in our analysis.

MODEL SELECTION

Next, seasonality analysis will be carry out to determine the optimal restock amount for each day by utilizing the past data. We will be utilizing Quantitative Forecasting Analysis to help identify optimal restock amount. This forecasting approach involves the use of historical data to predict future demand for goods. However, it is notable that the more data is available, the more accurate picture of historical demand will be attained. Furthermore, though this model provides a basis of forecasting, demand could be affected by seasonality. Henceforth, our team are considering two Time Series Analysis Models Naive Approach and Seasonal Naive Approach. In our opinion, Seasonal Naive Approach is a more appropriate model for our analysis, however due to the limited data provided, our team are considering the more generic Naive Approach too for our data analysis to ensure that we take into account the full picture available.

MODEL VALIDATION

To validate our model, we will be separating our data into two different set. 70% of the data will be used as our training data set and 30% of the data will be our test data to validate our model. Upon validating our model, we will modify our model and validate it again. This process will repeat until we are satisfied with the performance of our model.