Difference between revisions of "AY1516 T2 Team AP Analysis PostInterimPlan"

| Line 27: | Line 27: | ||

{| style="background-color:#ffffff; margin: 3px auto 0 auto" width="55%" | {| style="background-color:#ffffff; margin: 3px auto 0 auto" width="55%" | ||

|- | |- | ||

| − | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="150px"| [[AY1516 T2 Team AP_Analysis| <span style="color:#3d3d3d">Data Retrieval & Manipulation</span>]] | + | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="150px"| [[AY1516 T2 Team AP_Analysis| <span style="color:#3d3d3d">Data Retrieval & Manipulation (Pre Interim)</span>]] |

! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ||

| − | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="150px"| [[AY1516 T2 Team AP_Analysis_Findings| <span style="color:#3d3d3d"> | + | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="150px"| [[AY1516 T2 Team AP_Analysis_Findings| <span style="color:#3d3d3d">Pre interim findings</span>]] |

! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ||

| Line 36: | Line 36: | ||

! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ||

| − | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid # | + | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="150px"| [[AY1516 T2 Team AP_Analysis_PostInterimFindings| <span style="color:#3d3d3d"> Post interim findings</span>]] |

! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ! style="font-size:15px; text-align: center; border-top:solid #ffffff; border-bottom:solid #ffffff" width="20px"| | ||

|} | |} | ||

Revision as of 19:08, 9 April 2016

| Data Retrieval & Manipulation (Pre Interim) | Pre interim findings | Post interim plan | Post interim findings |

|---|

Facebook Graph API

Apart from analysing one of SGAG's popular social network Twitter, we plan to leverage the Facebook Graph API. Drawing from our experience using the twitter API, we are looking to crawl Facebook data in a similar fashion, crawling, retrieving and aggregating post-level Facebook data. Hopefully, this process can yield conclusive results about the SGAG's social network (likes, shares, etc) on Facebook.

Approach

| Step | Expected Result | Notes |

|---|---|---|

| 1 | Collect all post data |

|

| 2 | All user objects for each like, for every post |

|

| 3 | "Comment-Level" per post and number of shares on a "user-level" |

|

Data Retrieval

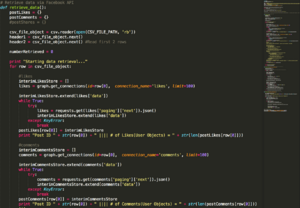

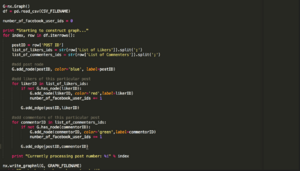

Constructing the graph from scratch involved the usage of python code to retrieve posts from SGAG's Facebook account for posts dating back 10 months. This involved connecting to the Facebook graph API programatically to formulate a csv file that resembles this structure:

Each user ID in List of Likers and List of Commenters are separated by a semicolon, and tagged to each post.

| Post ID | List of Likers | List of Commenters |

|---|---|---|

| 378167172198277_1187053787976274 | 10206930900524483;1042647259126948;10204920589409318; ... | 10153979571077290;955321504523847;1701864973403904; ... |

After crawling the Facebook API for ~4.5 Hours, the result is 1600++ posts dating 10 Months ago, with a CSV file size of ~38MB. Entire code can be viewed here.

Subsequently, we wanted to visualize the data using the Gephi tool. Hence, additional python code was used to read the CSV file, programmatically reading each row of the CSV, and attaching each post ID to likers and commenters respectively. This is done so that we can construct the .graphml graph formatted file, which gephi is able to read. Entire code can be viewed here.

The resultant file (~211MB) is uploaded here for reference.

Gephi Analysis

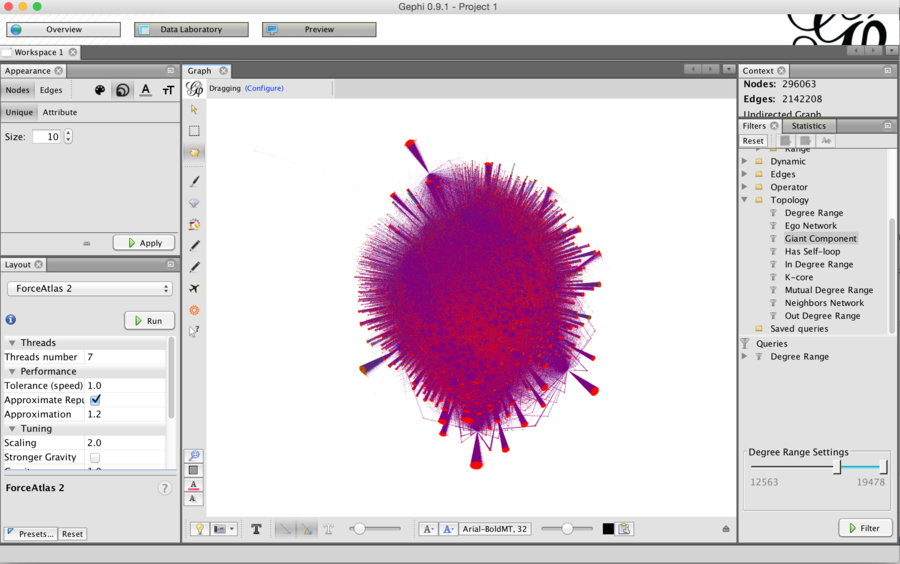

The initial import into gephi constant crashes when using computers with < 8 GBs of RAM. We eventually managed to leverage a computer with 16GB of RAM to begin the initial import of the huge .graphml file. The initial report indicated that we have 296,063 Nodes and 2,142,208 edges.

Upon successful load of the file and running the Force Atlas 2 Algorithm, we achieved the below graph.

As expected, the graph is extremely unreadable, and furthermore, even with a high performance machine, the Gephi application is extremely slow and painful to work with under these circumstances.

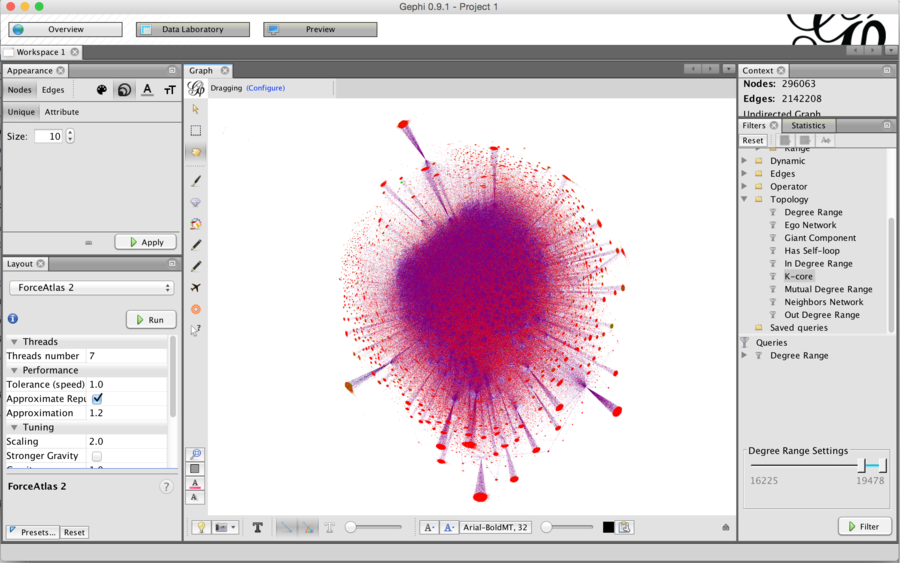

Hence, we performed a filter of the the number of degrees on the graph to obtain a condensed version below.

From a high level view of the graph, we noticed that for some clusters(located at the side of the graph) have a mixture of both commenters(Green) and likers(Red), with a large proportion of interaction being "likes". However, even though with the filter applied, working with Gephi is extremely laggy and unusable to get concrete findings, and thus we decided to look for alternatives in order to obtain additional insights.