Difference between revisions of "AY1516 T2 Team SkyTrek Data"

m (Data Page Update for Mid Term) |

|||

| Line 45: | Line 45: | ||

<!-- INSERT GA IMG HERE --> | <!-- INSERT GA IMG HERE --> | ||

[[File:SkyTrek ga data.png|frameless|700px]] | [[File:SkyTrek ga data.png|frameless|700px]] | ||

| − | ==<div style="background: #95A5A6; line-height: 0.3em; font-family:helvetica; border-left: #6C7A89 solid 15px;"><div style="border-left: #FFFFFF solid 5px; padding:15px;font-size:15px;"><font color= "#ffffff"><strong>Crawling</strong></font></div></div>== | + | |

| + | |||

| + | ==<div style="background: #95A5A6; line-height: 0.3em; font-family:helvetica; border-left: #6C7A89 solid 15px;"><div style="border-left: #FFFFFF solid 5px; padding:15px;font-size:15px;"><font color= "#ffffff"><strong>Extraction from Data Sources</strong></font></div></div>== | ||

| + | |||

| + | Our final data cube was derived from 3 main raw data sources, each requiring a different method to pull and transform the raw data. | ||

| + | <ol> | ||

| + | <li> <b>Skyscanner News Site (Crawling):</b> This data is pulled from the Skyscanner news site, for each article, including attributes such as article text, number of links, published date etc. This data constitutes public information that is visible to the user and hence did not need to be requested for from the client. These attributes tell us about the nature of the actual content that is being seen by the user. | ||

| + | </li> | ||

| + | <li> <b>Google Analytics:</b> This data was pulled for each article being tracked via the Skyscanner Google analytics account. This contained metrics regarding the performance of each URL on the Skyscanner news site. These attributes tell us about the performance metrics of each article mainly through Unique Page Views and Average Time on Page. It also provides the different sources of traffic such as facebook paid media or google organic and the contribution of each of the different channels. | ||

| + | </li> | ||

| + | <li> <b>Social Media Shares:</b> This data was pulled via continuous scraping through a java program of a website called http://linktally.com that provides an API of social media shares for a given URL. These attributes tell us about how widely each article is shared across different social media platforms. | ||

| + | </li> | ||

| + | </ol> | ||

| + | |||

| + | ===<div style="background: #95A5A6; line-height: 0.3em; font-family:helvetica; border-left: #6C7A89 solid 15px;"><div style="border-left: #FFFFFF solid 5px; padding:15px;font-size:15px;"><font color= "#ffffff"><strong>Crawling</strong></font></div></div>=== | ||

<p>The data provided by Skyscanner is what they currently rely on to determine their content planning. In addition to the parameters mentioned above, our group also want to track various other characteristics of the articles, namely:<br> | <p>The data provided by Skyscanner is what they currently rely on to determine their content planning. In addition to the parameters mentioned above, our group also want to track various other characteristics of the articles, namely:<br> | ||

| Line 67: | Line 81: | ||

</p> | </p> | ||

<br> | <br> | ||

| − | |||

| − | <p> | + | |

| + | ==<div style="background: #95A5A6; line-height: 0.3em; font-family:helvetica; border-left: #6C7A89 solid 15px;"><div style="border-left: #FFFFFF solid 5px; padding:15px;font-size:15px;"><font color= "#ffffff"><strong>Transformation and Loading - Merging of 3 Raw Data Sources</strong></font></div></div>== | ||

| + | |||

| + | <p>Once these three raw datasets had been extracted, there was need to merge them so that the relationship between all of these attributes can be analysed in the next stage of our project. This required many of the ‘dirty’ URLs to be cleaned out as part of the merging process. The URL was used as a primary key in order to join all the attributes from the three datasets. The diagram below shows the entire ETL process showing how the three sources of data were extracted, transformed and then stored in a MySQL database. | ||

</p> | </p> | ||

| + | <br> | ||

| + | [[File:Data Transofmration and merging process.png|frameless|700px]] | ||

| + | |||

| + | ===<div style="background: #95A5A6; line-height: 0.3em; font-family:helvetica; border-left: #6C7A89 solid 15px;"><div style="border-left: #FFFFFF solid 5px; padding:15px;font-size:15px;"><font color= "#ffffff"><strong>Aggregation Of Data</strong></font></div></div>=== | ||

| + | <p> | ||

| + | The final dataset contains about 9650 rows at the most disaggregated level. The identifier for each row is the article URL as well as the ‘source/medium’ for each URL. In order to analyse different aspects of the business problems, this dataset has been divided into different aggregated levels. The most important levels would be based on the traffic source- mainly ‘organic’ (non-paid) VS ‘inorganic’ or ‘paid’ media. The main reason behind this division is that ‘paid’ traffic numbers tend to usually be higher than non-paid ones and hence skew the data in favour of articles that have been distributed through paid channels such as Facebook, Taboola and StumbleUpon. The diagram below shows the different levels of aggregation building up from the most disaggregated (10k rows) to the most aggregated level. | ||

| + | </p> | ||

| + | |||

| + | [[File:Levels of Aggregation of Data.png|frameless|300px]] | ||

==<div style="background: #95A5A6; line-height: 0.3em; font-family:helvetica; border-left: #6C7A89 solid 15px;"><div style="border-left: #FFFFFF solid 5px; padding:15px;font-size:15px;"><font color= "#ffffff"><strong>Storing data</strong></font></div></div>== | ==<div style="background: #95A5A6; line-height: 0.3em; font-family:helvetica; border-left: #6C7A89 solid 15px;"><div style="border-left: #FFFFFF solid 5px; padding:15px;font-size:15px;"><font color= "#ffffff"><strong>Storing data</strong></font></div></div>== | ||

| Line 78: | Line 103: | ||

Another option is to store data in flat files for easy transport between systems. However, it will reduce accessibility since our code and program need to parse the information again.</p> | Another option is to store data in flat files for easy transport between systems. However, it will reduce accessibility since our code and program need to parse the information again.</p> | ||

<p>With pros and cons in mind, we will proceed with the database approach initially, and make changes as the the project continues.</p> | <p>With pros and cons in mind, we will proceed with the database approach initially, and make changes as the the project continues.</p> | ||

| + | |||

| + | ==<div style="background: #95A5A6; line-height: 0.3em; font-family:helvetica; border-left: #6C7A89 solid 15px;"><div style="border-left: #FFFFFF solid 5px; padding:15px;font-size:15px;"><font color= "#ffffff"><strong>Data Dictionary</strong></font></div></div>== | ||

| + | |||

| + | {| class="wikitable" width="50%" | ||

| + | |- | ||

| + | !| Attribute Name !! Description !! Type | ||

| + | |- | ||

| + | | URL | ||

| + | || The original URL of the article on the Skyscanner news site | ||

| + | || Categorical | ||

| + | |- | ||

| + | |Source/ Medium | ||

| + | ||The source of the traffic to the website | ||

| + | ||Categorical | ||

| + | |- | ||

| + | |Unique Page Views | ||

| + | ||The total number of unique views for the given article | ||

| + | ||Numerical | ||

| + | |- | ||

| + | |Average Time on Page | ||

| + | ||The average time in seconds that a user spends on the given article | ||

| + | ||Numerical | ||

| + | |- | ||

| + | |No. of links | ||

| + | ||The number of out-links embedded in a given article | ||

| + | ||Numerical | ||

| + | |- | ||

| + | |No. of images | ||

| + | ||The number of images in a given article | ||

| + | ||Numerical | ||

| + | |- | ||

| + | |No. of shares | ||

| + | ||The number of social media shares for a given article | ||

| + | ||Numerical | ||

| + | |- | ||

| + | |Published Date | ||

| + | ||The date at which the article was published | ||

| + | ||Categorical | ||

| + | |- | ||

| + | |Article Text | ||

| + | ||The body of text of the entire news article | ||

| + | ||Categorical | ||

| + | |||

| + | |} | ||

| + | |||

<!--------------- Body End ----------------------> | <!--------------- Body End ----------------------> | ||

Latest revision as of 22:07, 28 February 2016

| Project Description | Data | Methodology |

|---|

Contents

Dataset provided by Skyscanner

Skyscanner provided our team access to their Google Analytics platform, allowing us to pull data about the performance of articles posted on their website. Tracking parameters include:

- Unique page views

- Page views

- Average time spent on page

- Exit rate

- Bounce rate, which measures the occurrences of people coming to Skyscanner website and exit after just reading one page.

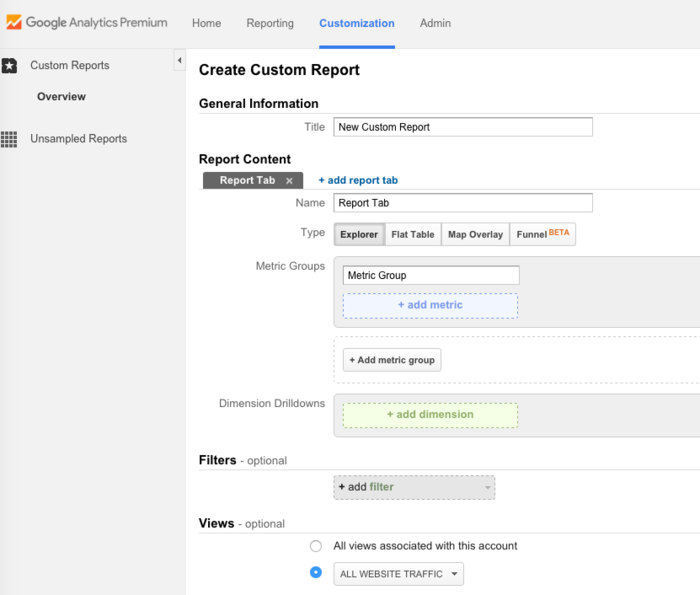

Skyscanner uses a Google Analytics premium account in order to track user behavior across all its webpages globally. The Singapore team has created an access account for this project, allowing us to pull all possible combinations of data from Google Analytics relating to the Skyscanner news site. This is also summarized in the form of different dashboard views available on Google Analytics premium. The method for querying any data is through creation of custom reports.

Extraction from Data Sources

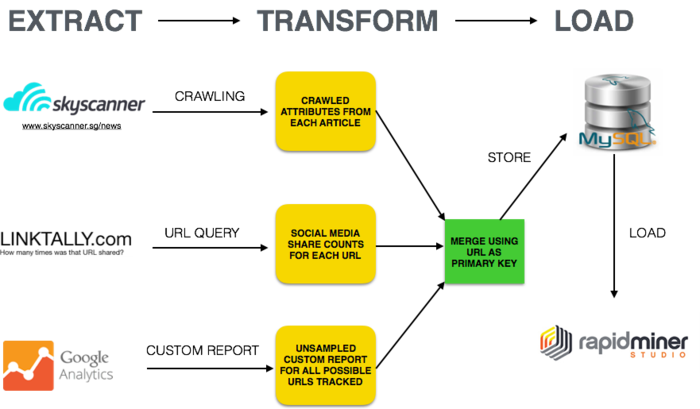

Our final data cube was derived from 3 main raw data sources, each requiring a different method to pull and transform the raw data.

- Skyscanner News Site (Crawling): This data is pulled from the Skyscanner news site, for each article, including attributes such as article text, number of links, published date etc. This data constitutes public information that is visible to the user and hence did not need to be requested for from the client. These attributes tell us about the nature of the actual content that is being seen by the user.

- Google Analytics: This data was pulled for each article being tracked via the Skyscanner Google analytics account. This contained metrics regarding the performance of each URL on the Skyscanner news site. These attributes tell us about the performance metrics of each article mainly through Unique Page Views and Average Time on Page. It also provides the different sources of traffic such as facebook paid media or google organic and the contribution of each of the different channels.

- Social Media Shares: This data was pulled via continuous scraping through a java program of a website called http://linktally.com that provides an API of social media shares for a given URL. These attributes tell us about how widely each article is shared across different social media platforms.

Crawling

The data provided by Skyscanner is what they currently rely on to determine their content planning. In addition to the parameters mentioned above, our group also want to track various other characteristics of the articles, namely:

- Number of words (stop words removed)

- Number of outbound links references

- Number of images

- Number of videos

- Number of article shares

The rationale for choosing these attributes will be mentioned in the methodology section of our proposal.

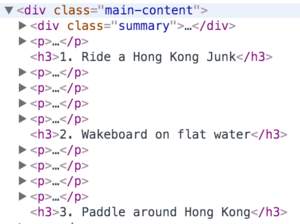

These attributes are not available for us. Thus we need to manually crawl the data from the website and merge it with the provided data set. We noticed that Skyscanner website use Javascript to add content to the DOM when the HTML finishes loading. Because of that, normal crawlers without ability to execute Javascript code will not be able to crawl for data within the page after the DOM is modified.

After some research, our group decided to employ the use of a headless browser, namely PhantomJS. It allows for Javascript code execution, DOM access, and programmatically interaction with websites without opening any real web browser. Another option is Selenium, but it needs to proxy through a browser installed on our machine.

Looking through the DOM structure of Skyscanner article, we found that the information we need is easily accessible. For example, the main article content is nested in a div block with CSS class “main-content”, and links to recommended other articles are provided in another div block with CSS class “addthis_recommended_vertical”. The repeating structure makes it easy for us to write code and scrap data from the website without too much trouble.

After successfully scraping the DOM data, we can clear out HTML tags easily using a regular expression /<(\/|).+?>/g, then proceed to compute the necessary attributes that we want to collect.

Transformation and Loading - Merging of 3 Raw Data Sources

Once these three raw datasets had been extracted, there was need to merge them so that the relationship between all of these attributes can be analysed in the next stage of our project. This required many of the ‘dirty’ URLs to be cleaned out as part of the merging process. The URL was used as a primary key in order to join all the attributes from the three datasets. The diagram below shows the entire ETL process showing how the three sources of data were extracted, transformed and then stored in a MySQL database.

Aggregation Of Data

The final dataset contains about 9650 rows at the most disaggregated level. The identifier for each row is the article URL as well as the ‘source/medium’ for each URL. In order to analyse different aspects of the business problems, this dataset has been divided into different aggregated levels. The most important levels would be based on the traffic source- mainly ‘organic’ (non-paid) VS ‘inorganic’ or ‘paid’ media. The main reason behind this division is that ‘paid’ traffic numbers tend to usually be higher than non-paid ones and hence skew the data in favour of articles that have been distributed through paid channels such as Facebook, Taboola and StumbleUpon. The diagram below shows the different levels of aggregation building up from the most disaggregated (10k rows) to the most aggregated level.

Storing data

Our data needs to be saved in a convenient format so that we can use it as input for other analytic programs.

An option for fast querying is storing the data in a database. This approach provides easy export to other formats that can work with analytic software, and access from both a GUI and code. Another option is to store data in flat files for easy transport between systems. However, it will reduce accessibility since our code and program need to parse the information again.

With pros and cons in mind, we will proceed with the database approach initially, and make changes as the the project continues.

Data Dictionary

| Attribute Name | Description | Type |

|---|---|---|

| URL | The original URL of the article on the Skyscanner news site | Categorical |

| Source/ Medium | The source of the traffic to the website | Categorical |

| Unique Page Views | The total number of unique views for the given article | Numerical |

| Average Time on Page | The average time in seconds that a user spends on the given article | Numerical |

| No. of links | The number of out-links embedded in a given article | Numerical |

| No. of images | The number of images in a given article | Numerical |

| No. of shares | The number of social media shares for a given article | Numerical |

| Published Date | The date at which the article was published | Categorical |

| Article Text | The body of text of the entire news article | Categorical |