Difference between revisions of "ANLY482 AY2017-18T2 Group14 Final"

Mq.wang.2014 (talk | contribs) |

Mq.wang.2014 (talk | contribs) |

||

| Line 52: | Line 52: | ||

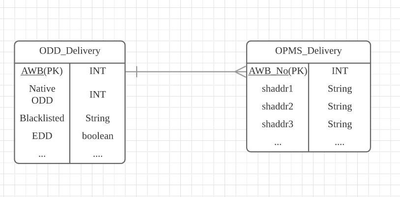

For the data to be migrated into a database, the construction of the database and its relevant tables are necessary steps to be completed. Two data tables are created within the single database that will be used, with their table structure following the metadata details that was present after the standardisation of data completed in the previous step. The partial structure of both tables, because the entire table would take up lots of space in this report to its massive amount of data fields, is being provided in Figure 5 with field “AWB” (Waybill Number) as the Primary Key identifying a single shipment. The Entity Relationship Diagram of the constructed database is shown as below. | For the data to be migrated into a database, the construction of the database and its relevant tables are necessary steps to be completed. Two data tables are created within the single database that will be used, with their table structure following the metadata details that was present after the standardisation of data completed in the previous step. The partial structure of both tables, because the entire table would take up lots of space in this report to its massive amount of data fields, is being provided in Figure 5 with field “AWB” (Waybill Number) as the Primary Key identifying a single shipment. The Entity Relationship Diagram of the constructed database is shown as below. | ||

[[Image: Anly4821718t2g14ERDiagramV.2.png|400px|center]] | [[Image: Anly4821718t2g14ERDiagramV.2.png|400px|center]] | ||

| + | |||

| + | <br> | ||

| + | The migration of the data from both OPMS and ODD on its different file sources (.mdb, .xlsx) was all done in the innate Export & Import Wizard by Microsoft SQL Server. This method was chosen because it supports various commonly file types used as data sources file types, requires no custom scripting and has no integration issues with the database (MSSQL). The variety of file types supported for importing data sources not only include sources from other SQL and Microsoft Database related servers, but also Microsoft Access Files (including Jet Engine), Excel Workbooks (.xlsx) and any kinds of Flat-file source which includes Comma-Separated Values (.csv), Tab-Separated Values (.tsv), Plain Text Files (.txt) and many more. The last advantage of the mentioned import method is that instructions are provided by Microsoft which means no in-depth training is required to perform importing, which is a benefit for many users who could be non-technical although database administrators are usually well trained in the field. | ||

| + | |||

| + | Migrating huge sizes of data from file-based sources into a single centralised database proved some challenge, its long migration time due to huge datasets, especially when data are stored in separate data files. On top of that, having to make multiple changes of the same type of issue because of data being stored into separate files makes the migration process extremely tedious. Another issue for importing data into Microsoft SQL Server is that if any issue happens during the data importing process, the server does not rollback the state of the database on its own, which means data rows inserted before the error occurrence would remain in the database. This issue could lead to potential problems of duplication of data, which could be avoided, by removing the already inserted columns manually or perform a database-wide data cleaning afterwards with the aim of removing duplicate data rows. | ||

</font> | </font> | ||

Revision as of 13:18, 12 April 2018

For the data to be migrated into a database, the construction of the database and its relevant tables are necessary steps to be completed. Two data tables are created within the single database that will be used, with their table structure following the metadata details that was present after the standardisation of data completed in the previous step. The partial structure of both tables, because the entire table would take up lots of space in this report to its massive amount of data fields, is being provided in Figure 5 with field “AWB” (Waybill Number) as the Primary Key identifying a single shipment. The Entity Relationship Diagram of the constructed database is shown as below.

The migration of the data from both OPMS and ODD on its different file sources (.mdb, .xlsx) was all done in the innate Export & Import Wizard by Microsoft SQL Server. This method was chosen because it supports various commonly file types used as data sources file types, requires no custom scripting and has no integration issues with the database (MSSQL). The variety of file types supported for importing data sources not only include sources from other SQL and Microsoft Database related servers, but also Microsoft Access Files (including Jet Engine), Excel Workbooks (.xlsx) and any kinds of Flat-file source which includes Comma-Separated Values (.csv), Tab-Separated Values (.tsv), Plain Text Files (.txt) and many more. The last advantage of the mentioned import method is that instructions are provided by Microsoft which means no in-depth training is required to perform importing, which is a benefit for many users who could be non-technical although database administrators are usually well trained in the field.

Migrating huge sizes of data from file-based sources into a single centralised database proved some challenge, its long migration time due to huge datasets, especially when data are stored in separate data files. On top of that, having to make multiple changes of the same type of issue because of data being stored into separate files makes the migration process extremely tedious. Another issue for importing data into Microsoft SQL Server is that if any issue happens during the data importing process, the server does not rollback the state of the database on its own, which means data rows inserted before the error occurrence would remain in the database. This issue could lead to potential problems of duplication of data, which could be avoided, by removing the already inserted columns manually or perform a database-wide data cleaning afterwards with the aim of removing duplicate data rows.

Although a dataset might come from the same source, but the underlying data could possess slight differences from each other. When performing data standardization and cleaning, it is unwise that any dataset from the same source is already cleaned and without problems. In data analytical work, there is still a requirement to explore and fully understand the data.

From this project, we learnt about the complexity of handling large datasets (standardizing, data insertion, data storage, database performance, data retrieval, data cleaning), as this is the first project that we handled datasets of this scale where in previous projects we have never encountered any dataset greater than 2 GB. This required us to not only think about getting the database, report or dashboard to run results as intended but also to ensure that it runs within a reasonable amount of time.

During the progress of the project, we have been hit with a reality that there are communication gaps between IT and business users when we faced issues sometimes to convey the value of our work to our sponsor, who is from a non-technical background. We realised that, without the ability to translate technical information into ways where non-technical personnel would be able to understand, all the work done will be in vain. As Analytics graduates in training, we need to display our multidisciplinary understanding and apply it into the work we perform.