Difference between revisions of "ANLY482 AY2016-17 T2 Group16: HOME/Interim"

| Line 95: | Line 95: | ||

The database and URL domain is not a simple one-to-one relationship, and the domain names may not necessarily reflect the database names. To study the characteristics of databases, we have to transform domain names, which can be extracted from request URLs, to database names. | The database and URL domain is not a simple one-to-one relationship, and the domain names may not necessarily reflect the database names. To study the characteristics of databases, we have to transform domain names, which can be extracted from request URLs, to database names. | ||

| − | ==< | + | === <big>'''Data Analysis'''</big><br/> === |

| + | ====<big>'''User Group Analysis'''</big><br/>==== | ||

| + | After data has been prepared and is ready for analysis, we want to observe the patterns that will appear in a single semester. Hence we have chosen 4 months’ common log data from Aug to Nov, which is equivalent to the first semester of annual year 2016/2017.<br/> | ||

| + | Before diving into the analysis, we first have verified in Diagram 2 below that the number of sessions requested by students from all faculty are indeed aligned with the exploratory analysis that law school and business school have the most active user groups. Apart from that, breaking down the requesters by admission year will help us identify students in different years of studies, and graduation year tells us whether the student is still a current student or a graduates. | ||

| + | |||

| + | : 1. '''Understanding the data''' : | ||

| + | |||

| − | |||

<!--/Content--> | <!--/Content--> | ||

Revision as of 00:29, 23 April 2017

Contents

Overview

The objective of this project is to provide insights on eBook databases and their users for Li Ka Shing Library Analytics Team. The analysis is not limited to eBook databases but also studies the general traits of other databases. As much of analysis is done on proxy server request logs, data cleaning is a major component of this project. The analysis results will help Li Ka Shing Library understand the usage pattern of its users, and better serve SMU community with increasing demand for professional knowledge.

Data Overview

The data we will work with is request log data (a.k.a. digital trace) and student data. Request log is a NCSA Common Log Format (CLF) data with billions of record captured by the library’s URL rewriting proxy server. This dataset captures all user request to external databases. The record attributes are user ID, request time, http request line (method, URL, and protocol), response time, and user agent. The student data, specifying faculty, admission year, graduation year, and degree program, is also provided in csv format for the team. For non-disclosure reason, the user identifier - emails - are obfuscated by hashing to a 64-digit long hexadecimal numbers. The hashed ID will be used to link up two tables. Please refer to appendix for the complete data dimensions and samples. The request log records are filed by months. The monthly numbers of records in request log data vary from 3 million to 6 million and the file sizes around 2 GB. Student dataset contains 22,427 records for students not only limited to full-time but also postgraduates and exchange students. There are users other than students (e.g. alumni, staff, visiting students and anonymous users), but the scope of this project is only limited to students because of the availability and insightfulness of student data.

Exploratory Data Analysis

Exploratory data analysis (EDA) was done on student data and request log data respectively. Through EDA, we hope to:

- understand data volume and dimensions

- assess data quality, including completeness, validity, consistency and accuracy

- formulate hypotheses for analysis and

- determine proper analysis approaches

Student

- 1. Understanding the data :

The headers of student data are not self-explanatory. We studied the values of each columns and determined the meeting for “statistical categories” as is shown in the table below.

- 2. Data quality :

By comparing the number of student IDs with unique number of students, we confirmed there is not duplicate identifier. Besides, there is no missing values under faculty, degree name and admission year. We filled in the missing values in graduation year “in process”.

- 3. Student program distribution :

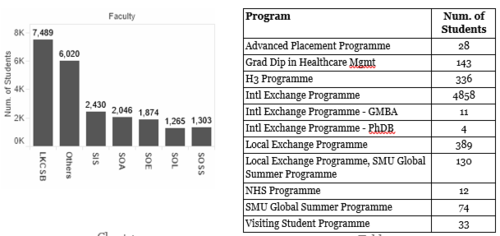

To find out the faculty distribution, we plotted bar chart Chart 1. From the bar chart, we observed that most of the students who visited the school library website for research are business students followed by “Others”. We investigated who make up the “Other” category by summarising categories in “Degree” column.

Based on Table 2, we analysed the “Others” category and found out that most of them belong to International Exchange Programme, followed by Local Exchange Programme student and H3 Program students.

- 4. Breakdown of students based on enrolment year :

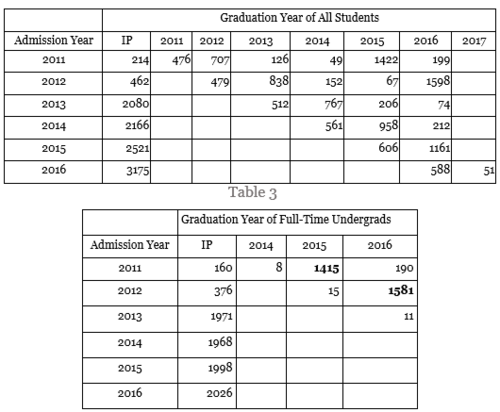

The table below shows the number of students by admission and graduation year. There are no records with graduation year before Admission year. From Table 4 we learnt that most of undergraduate students graduate within 4 years, which reflect the reality. Comparing the two tables, various postgraduate and non-degree programs reduces the average duration of study.

Request Logs

The sheer volume of data posed a challenge in EDA phase. Thus, we started from samples of 1 million records and then extended to the full dataset. Many levels of data cleaning are necessary to discover database usage patterns.

- Dataset contains duplicate records

- Many requests are directed to web resources

- Requests created by users do not necessarily directly to a title, instead they may be created by navigating to various pages

- Database name is not explicitly shown in the dataset, but has to be derived from URL domains

- User input and requested title can only be extracted from GET request parameters. Databases use different methods to encode the request information.

- 1. Duplicated records :

Through observation, we discovered request records contain duplicate rows (defined as a user makes multiple identical requests with the same URL in the same time frame). This is supposedly caused by auto or manual page refresh but we cannot ascertain the actual reason.

By and large, the number of duplicate lines correlates with the number of lines for each database, and they amount to 5% of all requests, which is a reasonable amount to remove. To find out the relative number of duplicate lines by domain, we normalised it against the total occurrence of the domains. The result shows they are fairly distributed among most domains without one single domain owning too much proportion of duplicate records.

- 2. Requests to web resources :

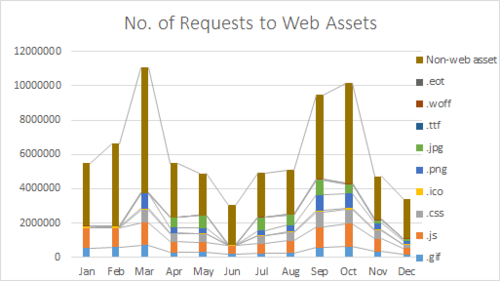

Without duplicate records, the data would still be noisy with requests to web assets. Web assets are used for page rendering and display. The typical ones are JavaScript (.js), Cascading Style Sheets (.css) and images (.img, jpg). Such web assets are not helpful in understanding user behaviour as the requests are not generated by user but are database dependent. The queries to web assets are recognised by web assets file extensions. We came with a list of web assets extensions as exhaustive as possible. Subsequent pattern extraction also uncovered more types of web assets that should be cleaned at this stage. Sites renders pages differently, thus the pattern of requests to web assets vary.

Unsurprisingly, GIF, JavaScript, and CSS takes up a fair proportion of the overall number of requests. In reality, page rendering typically requires many web resources files, which explains the large percentage of web asset requests. This can be backed up by our experiment with Ebrary database viewing page for ebook “Singapore Perspective: Singapore Perspective 2013”. During the page rendering process, 13 CSS style sheets, 24 JS scripts, 31 images and 1 font file were captured. All these files have to go through the library proxy before downloaded and used by browser for page rendering (as the asset URLs have been rewritten to be directed to libproxy).

The requests to web assets have to be removed from analysis on two grounds:

- Inflation on intra-domain analysis, as the web assets indefinitely increase the number of requests within a domain

- Distortion on inter-domain analysis, as the number of web assets used to render pages vary from site to site.

- 3. Deriving database name from URL :

The database and URL domain is not a simple one-to-one relationship, and the domain names may not necessarily reflect the database names. To study the characteristics of databases, we have to transform domain names, which can be extracted from request URLs, to database names.

Data Analysis

User Group Analysis

After data has been prepared and is ready for analysis, we want to observe the patterns that will appear in a single semester. Hence we have chosen 4 months’ common log data from Aug to Nov, which is equivalent to the first semester of annual year 2016/2017.

Before diving into the analysis, we first have verified in Diagram 2 below that the number of sessions requested by students from all faculty are indeed aligned with the exploratory analysis that law school and business school have the most active user groups. Apart from that, breaking down the requesters by admission year will help us identify students in different years of studies, and graduation year tells us whether the student is still a current student or a graduates.

- 1. Understanding the data :