Difference between revisions of "ANLY482 AY2016-17 T2 Group7: Methodology"

Yx.lim.2013 (talk | contribs) m |

Yx.lim.2013 (talk | contribs) (edited content) |

||

| Line 49: | Line 49: | ||

''Figure 2. Original Data Set''<br/> | ''Figure 2. Original Data Set''<br/> | ||

| − | The original log data file was given to us in textual representation of the URL for each session for the various databases, as seen from Figure 2. The aim of the | + | The original log data file was given to us in textual representation of the URL for each session for the various databases, as seen from Figure 2. The aim of the preparation phase is to transform these textual data into a curated table useful for further analyses. <br/> |

A full example of a row of the original data is as shown below:<br/> | A full example of a row of the original data is as shown below:<br/> | ||

| Line 81: | Line 81: | ||

|} | |} | ||

| − | After the identification of variables, we carry out our textual | + | After the identification of variables, we carry out our textual data preparation using Python 3.0.1 in Jupyter Notebook. Many software was considered in the process and our team narrowed our options down to using either Python or R, as these are the 2 software that could handle our entire data preparation phase in a single software. Python was eventually picked due to its faster processing speed for our large dataset of 10.1GB. The following are the steps taken to achieving the curated tables in Jupyter Notebook.<br/> |

[[File:Pre-processing flow.png|700px]] | [[File:Pre-processing flow.png|700px]] | ||

| Line 107: | Line 107: | ||

|} | |} | ||

| − | After the above | + | There are over 200 databases which EZproxy serves and almost all of them have different keywords to identify different actions on each respective sites. Thus, we have identified 11 search keywords which covers a total of 13 databases. This project could potentially be scaled if more keywords are identified to incorporate more databases in the future. Next, we iterate through every row to validate if the row falls into the identified 11 keywords which covers the 13 databases. As the project sponsors gave us separate log files for each of the 12 months in the year 2016, we appended them into a single .csv file after the identification of the relevant rows. To prepare the dataset for cleaning, we appended the data into a pandas data frame. |

| + | |||

| + | For the data cleaning step, we remove the duplicates due to the EZproxy server recording multiple repeated search queries for each unique session ID. We then grouped them by libsession_id and search_database to filter out the final actual searches for each session in each database. Next, we decoded the URL and converted the search queries into lowercase letter to standardize it for use in textual analytics subsequently. We then identified some rows which consists of queries that are irrelevant to a normal search query (eg. ‘nan, ‘()’) and removed them. After all that is completed, we then write the final curated pandas data frame table into a .csv file. | ||

| + | |||

| + | After the above data preparation and cleaning phase, the final curated ‘2016 log file’ table contains 688,511 records, and is ready for merge with the ‘Student User List’ table. This is as per Figure 1, where the selection of table was decided.<br/> | ||

[[File:Relational data table.png|700px]] <br/> | [[File:Relational data table.png|700px]] <br/> | ||

| Line 121: | Line 125: | ||

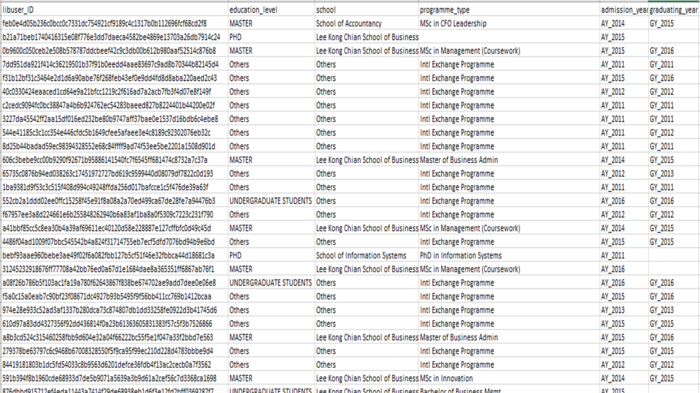

''Figure 7. ‘Student User List’ Table''<br/> | ''Figure 7. ‘Student User List’ Table''<br/> | ||

| − | + | Figure 6 and 7 shows the curated data tables, which makes it possible for further analysis to be performed. We have decided to test it out against 2 test cases, namely the data analysis of the search counts and the alternate test case being the text analytics of 3 databases, Euromonitor, Lawnet and Marketline, which can be found in Appendix E, F & G. The first test case is as follows in the next tab. <br/> | |

| − | |||

| − | |||

| − | |||

</div> | </div> | ||

[[http://wiki.smu.edu.sg/ANLY482/ANLY482_AY2016-17_Term_2 <font color="Blue">Back To Project Page</font>]] | [[http://wiki.smu.edu.sg/ANLY482/ANLY482_AY2016-17_Term_2 <font color="Blue">Back To Project Page</font>]] | ||

<!-- End Information --> | <!-- End Information --> | ||

Revision as of 18:27, 20 April 2017

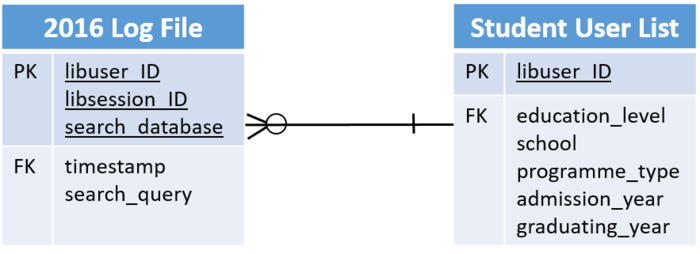

Two tables (2016 Log file and Student User List) were selected.

The 2016 Log File table contains 71,245,770 records which consists of search queries over a period of 12 months. Its primary keys are libuser_ID, libsession_ID, search_database. Its foreign keys are timestamp and search_query.

For table of Student User List, its primary keys are libuser_ID. Its foreign keys are education_level, school, programme_type, admission_year and graduating_year.

The two tables are joined by libuser_ID.

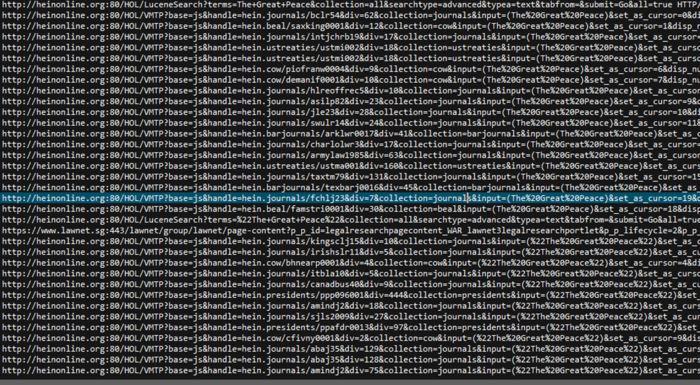

The original log data file was given to us in textual representation of the URL for each session for the various databases, as seen from Figure 2. The aim of the preparation phase is to transform these textual data into a curated table useful for further analyses.

A full example of a row of the original data is as shown below:

| A single row of the original data set |

|---|

| 59.189.71.33 tDU1zb0CaV2B8qZ 65ff93f70ca7ceaabcca62de3882ed1633bcd14ecdebbe95f9bd826bd68609ba [01/Jan/2016:00:01:36 +0800]

"GET http://heinonline.org:80/HOL/VMTP?base=js&handle=hein.journals/bclr54&div=62&collection=journals&input=(The%20Great%20Peace)&set_as_cursor=0&disp_num=1&viewurl=SearchVolumeSOLR%3Finput%3D%2528The%2520Great%2520Peace%2529%26div %3D62%26f_size%3D600%26num_results%3D10%26handle%3Dhein.journals%252Fbclr54%26collection%3Djournals%26set_as_cursor %3D0%26men_tab%3Dsrchresults%26terms%3D%2528The%2520Great%2520Peace%2529 HTTP/1.1" 200 2291 "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2526.106 Safari/537.36" |

Figure 3. A single row of the original data set

From Figure 3, we look to identify potential variables (in bold) to be included in the final table. Hence, to achieve analyses of search queries based on various factors, we have derived with the following variables.

| Parameter/Variable | Description | Example |

|---|---|---|

| libuser_ID | Student ID hashed by the SMU Library so as to protect the identity of users |

65ff93f70ca7ceaabcca62de3882ed1633bcd14ecdebbe95f9bd826bd68609ba |

| libsession_ID | Each session is identified by an unique ID, which corresponds to 1 session by a single user | tDU1zb0CaV2B8qZ |

| search_database | The e-resources database which the search query is searched on | heinonline |

| timestamp | Date and time when the search query is executed by the user in the format: DD/MMM/YYYY HH:MM:SS | 01/Jan/2016:00:01:36 |

| search_query | Search query that was being searched by the user | (The%20Great%20Peace) |

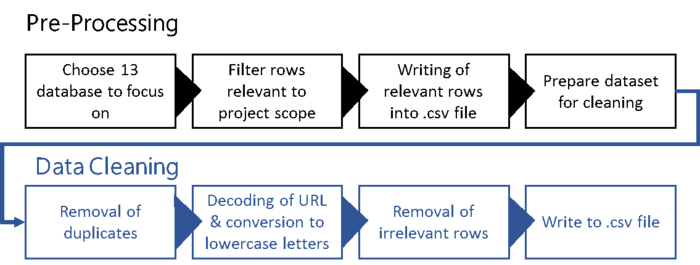

After the identification of variables, we carry out our textual data preparation using Python 3.0.1 in Jupyter Notebook. Many software was considered in the process and our team narrowed our options down to using either Python or R, as these are the 2 software that could handle our entire data preparation phase in a single software. Python was eventually picked due to its faster processing speed for our large dataset of 10.1GB. The following are the steps taken to achieving the curated tables in Jupyter Notebook.

Figure 4. Pre-Processing & Data Cleaning Overview

Figure 4. Pre-Processing & Data Cleaning Overview

| Step | Description of Steps |

|---|---|

| 1 | Choose 13 databases to focus on |

| 2 | Filtering of rows relevant to the scope of project |

| 3 | Writing of relevant rows into .csv file |

| 4 | Prepare dataset for cleaning |

| 5 | Removal of duplicates |

| 6 | Decoding of URL and conversion to lowercase letters |

| 7 | Removal of irrelevant rows |

| 8 | Write to .csv file |

There are over 200 databases which EZproxy serves and almost all of them have different keywords to identify different actions on each respective sites. Thus, we have identified 11 search keywords which covers a total of 13 databases. This project could potentially be scaled if more keywords are identified to incorporate more databases in the future. Next, we iterate through every row to validate if the row falls into the identified 11 keywords which covers the 13 databases. As the project sponsors gave us separate log files for each of the 12 months in the year 2016, we appended them into a single .csv file after the identification of the relevant rows. To prepare the dataset for cleaning, we appended the data into a pandas data frame.

For the data cleaning step, we remove the duplicates due to the EZproxy server recording multiple repeated search queries for each unique session ID. We then grouped them by libsession_id and search_database to filter out the final actual searches for each session in each database. Next, we decoded the URL and converted the search queries into lowercase letter to standardize it for use in textual analytics subsequently. We then identified some rows which consists of queries that are irrelevant to a normal search query (eg. ‘nan, ‘()’) and removed them. After all that is completed, we then write the final curated pandas data frame table into a .csv file.

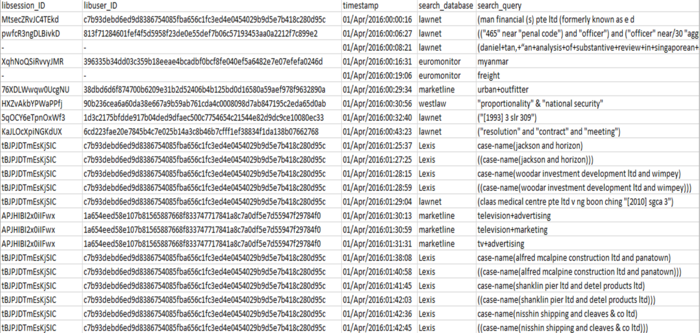

After the above data preparation and cleaning phase, the final curated ‘2016 log file’ table contains 688,511 records, and is ready for merge with the ‘Student User List’ table. This is as per Figure 1, where the selection of table was decided.

Figure 5 shows the relationship between the ‘2016 Log File’ table and ‘Student User List’ table, merged with variable “libuser_ID”. The following are examples of the both tables:

The following are snippets of the curated '2016 Log File' table and the "Student User List' table:

Figure 6. ‘2016 Log File’ Table

Figure 7. ‘Student User List’ Table

Figure 6 and 7 shows the curated data tables, which makes it possible for further analysis to be performed. We have decided to test it out against 2 test cases, namely the data analysis of the search counts and the alternate test case being the text analytics of 3 databases, Euromonitor, Lawnet and Marketline, which can be found in Appendix E, F & G. The first test case is as follows in the next tab.