Difference between revisions of "ANLY482 AY2016-17 T2 Group7: Methodology"

Yx.lim.2013 (talk | contribs) m |

Yx.lim.2013 (talk | contribs) m |

||

| Line 88: | Line 88: | ||

{| class="wikitable" | {| class="wikitable" | ||

|- | |- | ||

| − | ! Step !! Description of | + | ! Step !! Description of Steps |

|- | |- | ||

| 1 || Choose 13 databases to focus on | | 1 || Choose 13 databases to focus on | ||

Revision as of 09:23, 7 April 2017

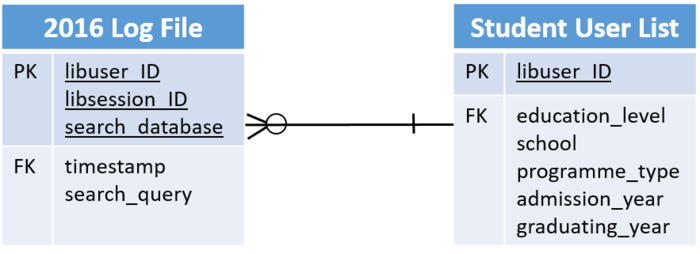

Two tables (2016 Log file and Student User List) were selected.

The 2016 Log File table contains 71,245,770 records, where each role represents a unique search query on the library online database. This dataset contains search queries over twelve months including primary keys libuser_ID, libsession_ID, search_database, and foreign keys are timestamp and seach_query. For table of Student User List, it has primary key libuser_ID and foreign keys including education_level, school, programme_type, admission_year and graduating_year. The two tables are joined by libuser_ID.

Pre-processing

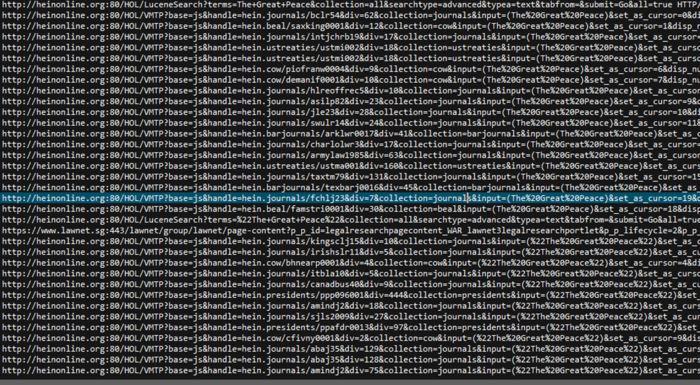

Figure 2. Original Data Set

The original log data file was given to us in textual representation of the url for each session for the various databases, as seen from Figure 2. The aim of the pre-processing phase is to transform these textual data into a curated table useful for further analyses.

A full example of a row of the original data is as shown below:

| A single row of the original data set |

|---|

| 59.189.71.33 tDU1zb0CaV2B8qZ 65ff93f70ca7ceaabcca62de3882ed1633bcd14ecdebbe95f9bd826bd68609ba [01/Jan/2016:00:01:36 +0800]

"GET http://heinonline.org:80/HOL/VMTP?base=js&handle=hein.journals/bclr54&div=62&collection=journals&input=(The%20Great%20Peace)&set_as_cursor=0&disp_num=1&viewurl=SearchVolumeSOLR%3Finput%3D%2528The%2520Great%2520Peace%2529%26div %3D62%26f_size%3D600%26num_results%3D10%26handle%3Dhein.journals%252Fbclr54%26collection%3Djournals%26set_as_cursor %3D0%26men_tab%3Dsrchresults%26terms%3D%2528The%2520Great%2520Peace%2529 HTTP/1.1" 200 2291 "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2526.106 Safari/537.36" |

Figure 3. A single row of the original data set

From the above, we look to identify potential variables to be included in the final table. Hence, to achieve analyses of search queries based on various factors, we have derived with the following variables.

| Parameter/Variable | Description | Example |

|---|---|---|

| libuser_ID | Each session is identified by an unique ID, which corresponds to 1 session by a single user |

65ff93f70ca7ceaabcca62de3882ed1633bcd14ecdebbe95f9bd826bd68609ba |

| libsession_ID | Student ID hashed by the SMU Library so as to protect the identity of users | tDU1zb0CaV2B8qZ |

| search_database | The e-resources database which the search query is searched on | heinonline |

| timestamp | Date and time when the search query is executed by the user in the format: DD/MMM/YYYY HH:MM:SS | 01/Jan/2016:00:01:36 |

| search_query | Search query that was being searched by the user | (The%20Great%20Peace) |

After the identification of variables, we carry out our textual pre-processing using Python 3.0.1 in Jupyter Notebook. The following are the steps taken to achieving the curated tables in Jupyter Notebook.

| Step | Description of Steps |

|---|---|

| 1 | Choose 13 databases to focus on |

| 2 | Filtering of rows relevant to the scope of projec |

| 3 | Writing of relevant rows into .csv file |

| 4 | Prepare dataset for cleaning |

| 5 | Removal of duplicates |

| 6 | Decoding of URL and conversion to lowercase letters |

| 7 | Removal of irrelevant rows |

| 8 | Write to .csv file |

After the above pre-processing phase, the final curated ‘2016 log file’ table contains 688,511 records, and is ready for merge with the ‘Student User List’ table.

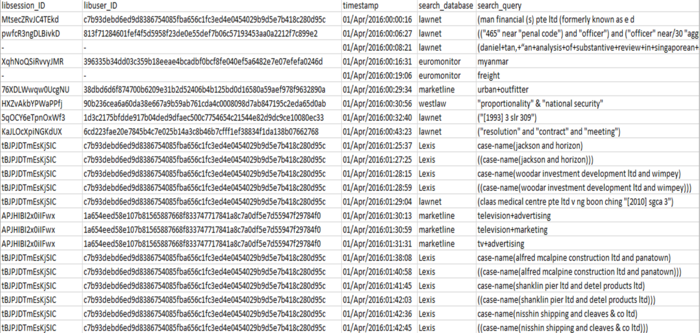

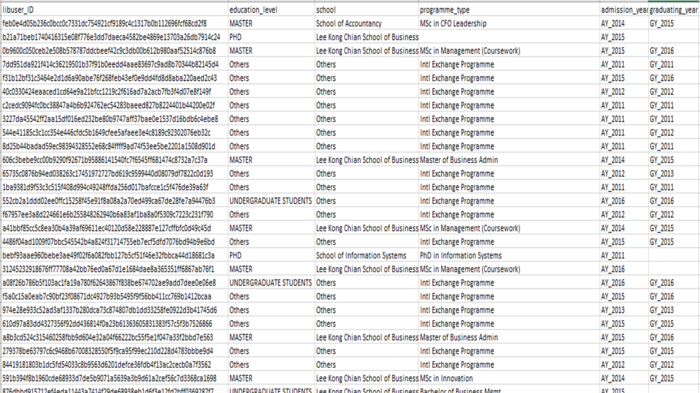

The following are snippets of the curated '2016 log file' table and the "Student User List' table:

Figure 4. ‘2016 Log File’ Table

Figure 5. ‘Student User List’ Table