Difference between revisions of "AY1516 T2 Team AP Analysis PostInterimFindings"

| Line 144: | Line 144: | ||

In this process, we filtered the dataset to include the top 10 posts with the largest number of degrees, and also the bottom 10 posts with the least number of degrees. | In this process, we filtered the dataset to include the top 10 posts with the largest number of degrees, and also the bottom 10 posts with the least number of degrees. | ||

| + | |||

| + | ==<div style="background: #232AE8; line-height: 0.3em; font-family:helvetica; border-left: #6C7A89 solid 15px;"><div style="border-left: #FFFFFF solid 5px; padding:15px;font-size:15px;"><font color= "#ffffff"><strong>Limitations and Future Work</strong></font></div></div>== | ||

| + | |||

| + | While using specific APIs like the Facebook and Twitter API help us to get specific data about about each post like its content and attached 'tags', it can prove to be too much for regular computers to handle. This is especially true when analysing posts from multiple categories, where posts from all the categories need to be analysed together. For future work, we can use algorithms such as XGBoost or logistic regression to predict the number of unique visitors per post, based on training data for existing posts and their respective unique visitors. | ||

Revision as of 20:32, 13 April 2016

| Data Retrieval & Manipulation (Pre Interim) | Pre interim findings | Post interim twitter findings | Post interim plan | Post interim findings |

|---|

Contents

Data Retrieval

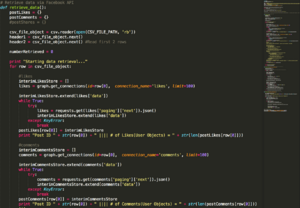

Constructing the graph from scratch involved the usage of python code to retrieve posts from SGAG's Facebook account for posts dating back 10 months. This involved connecting to the Facebook graph API programatically to formulate a csv file that resembles this structure:

Each user ID in List of Likers and List of Commenters are separated by a semicolon, and tagged to each post.| Post ID | Message | Type | List of Likers | List of Commenters |

|---|---|---|---|---|

| 378167172198277_1187053787976274 | Got take plane go holiday before, sure got kena one of these! | Link/Photo | 10206930900524483;1042647259126948; ... | 10153979571077290;955321504523847; ... |

| ... | ... | ... | ... | ... |

After crawling the Facebook API for ~4.5 Hours, the result is 1600++ posts dating 10 Months ago, with a CSV file size of ~38MB. Entire code can be viewed here.

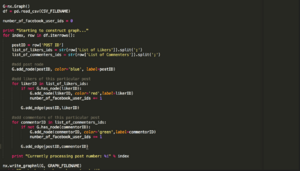

Subsequently, we wanted to visualize the data using the Gephi tool. Hence, additional python code was used to read the CSV file, programmatically reading each row of the CSV, and attaching each post ID to likers and commenters respectively. This is done so that we can construct the .graphml graph formatted file, which gephi is able to read. Entire code can be viewed here.

The resultant file (~211MB) is uploaded here for reference.

High Level Analysis

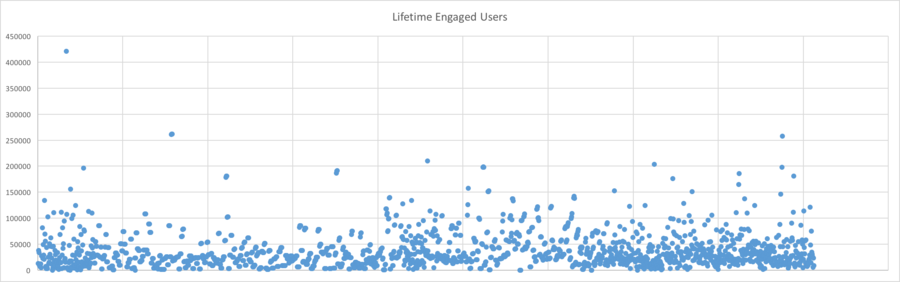

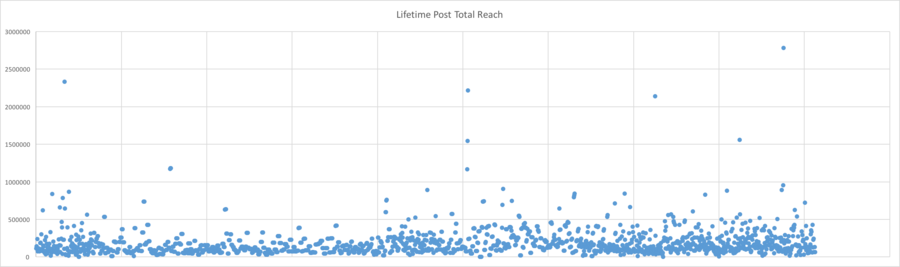

Before we attempt to dive into the specific data about the users, we performed a generic high level analysis about the number of unique users reached for all the posts dating back 10 months.

As illustrated,

Gephi Analysis

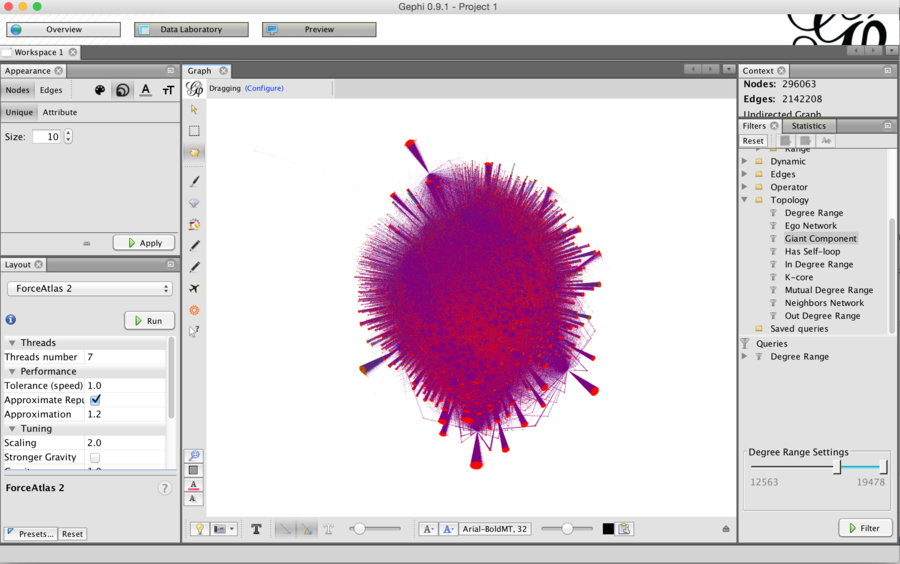

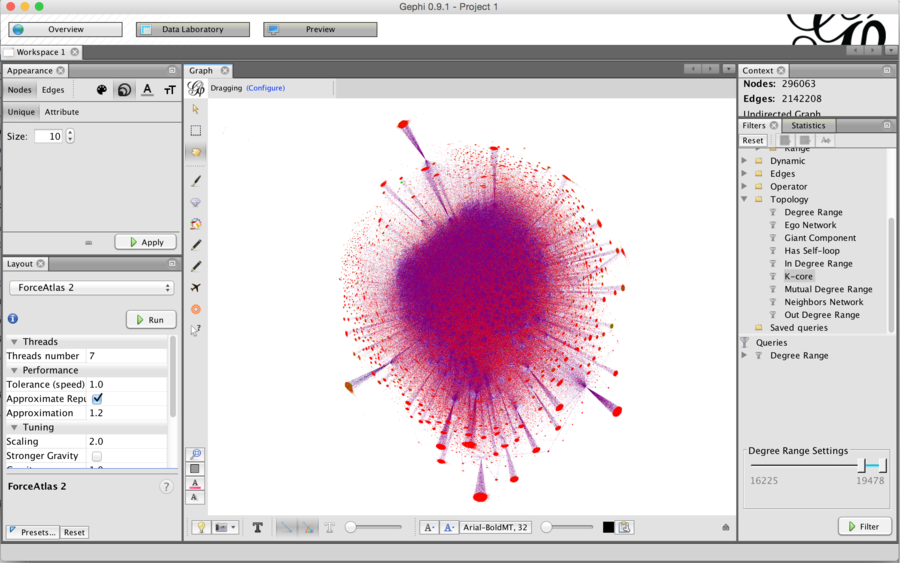

The initial import into gephi constant crashes when using computers with < 8 GBs of RAM. We eventually managed to leverage a computer with 16GB of RAM to begin the initial import of the huge .graphml file. The initial report indicated that we have 296,063 Nodes and 2,142,208 edges.

Upon successful load of the file and running the Force Atlas 2 Algorithm, we achieved the below graph.

As expected, the graph is extremely unreadable, and furthermore, even with a high performance machine, the Gephi application is extremely slow and painful to work with under these circumstances.

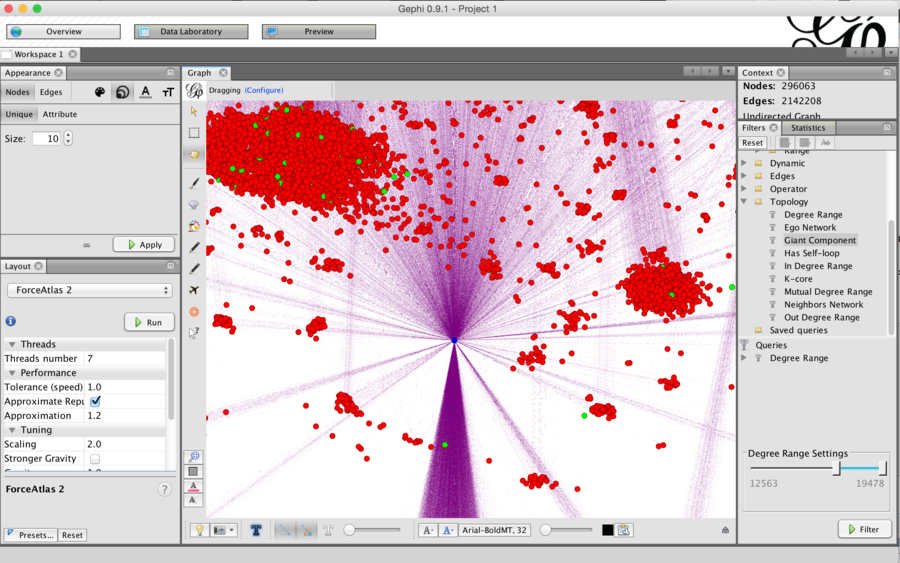

Hence, we performed a filter of the the number of degrees on the graph to obtain a condensed version below.

From a high level view of the graph, we noticed that for some clusters(located at the side of the graph) have a mixture of both commenters(Green) and likers(Red), with a large proportion of interaction being "likes". Zooming into the graph, we notice that if the post was forced to isolation by the ForceAtlas algorithm:

However, even though with the filter applied, working with Gephi is extremely laggy and unusable to get concrete findings, and thus we decided to look for alternatives in order to obtain additional insights.

NetworkX approach

To resolve this issue, we looked to Networkx to try and see how we can adapt the graph formation programmatically for us to better study the network.

After studying the code, we noticed there was an error in the initial CSV that we generated for all the posts( with respective likers and commenters). The main reason why this happened was because when we saved the data into Excel, each individual row has the character limit of 35,000, and thus, many of the posts likers/commenters were truncated because each user's ID is about 20characters long, and those particular posts have >1000 Likes or Comments.

Therefore we revised the crawling code that polls the Facebook API. It can be found here.

Similarly, after ~4 hours of crawling, the data is presented here.

In addition to the limitation of Excel in terms of each cell's size, we also notice there was an error as to how we were constructing the GraphML file. We were constructing the graphML file using a Unimodal approach to plot the network, where the users and posts were both modelled as the same nodes and thus not representing our dataset well.

Hence, we now modelled the network as a bipartite graph, with the expectation that it should give us a good representation of the network. We revised the code, and it can be found here. To have a distinction between likes and comments, we assigned likes to a weight of 1 and comments to a weight of 2, indicating that a comment represents a "heavier" interaction with the post than simply liking it.

Before we ran the code, we tried to obtain a small representation of the posts(5) because we expected the network plot to take more than 8 hours to complete. The 5-post bipartite graphml file can be found here.

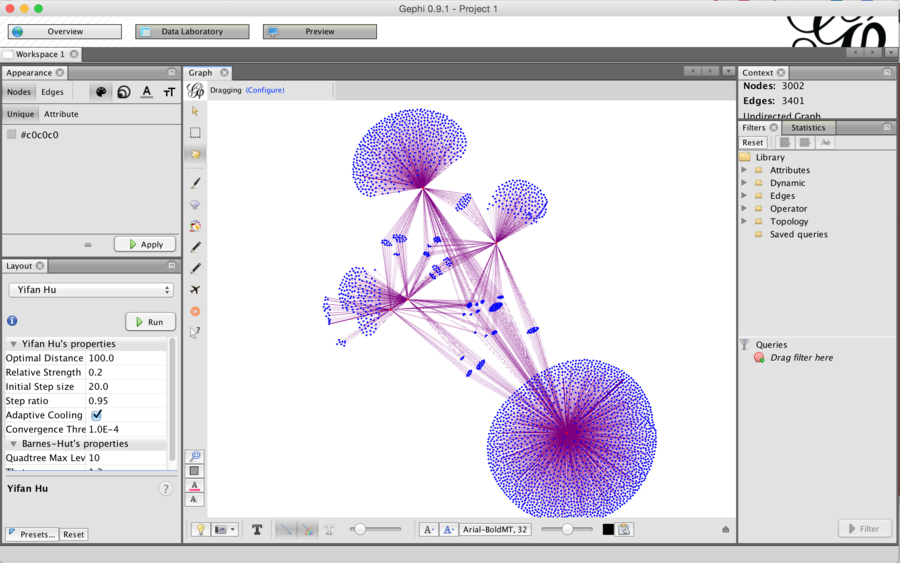

Loading it into Gephi and running the Yifan Hu algorithm, we get this result:

As shown, it is observed that users(blue nodes) that are active in multiple posts(red nodes) are clustered between the large clusters. Also, we notice that each posts popularity varies, as seen from the size of the clusters around each red node. Also, one really interesting thing to note is that there are users who interact with all 5 posts, indicating that these users might be SGAG's biggest fans.

We aim to conduct greater in depth analysis when the full network in constructed(8 Hours wait time).

Gephi Analysis Part 2

The graphml file(~320MB) for the entire network that was generated with the new code can be found here.

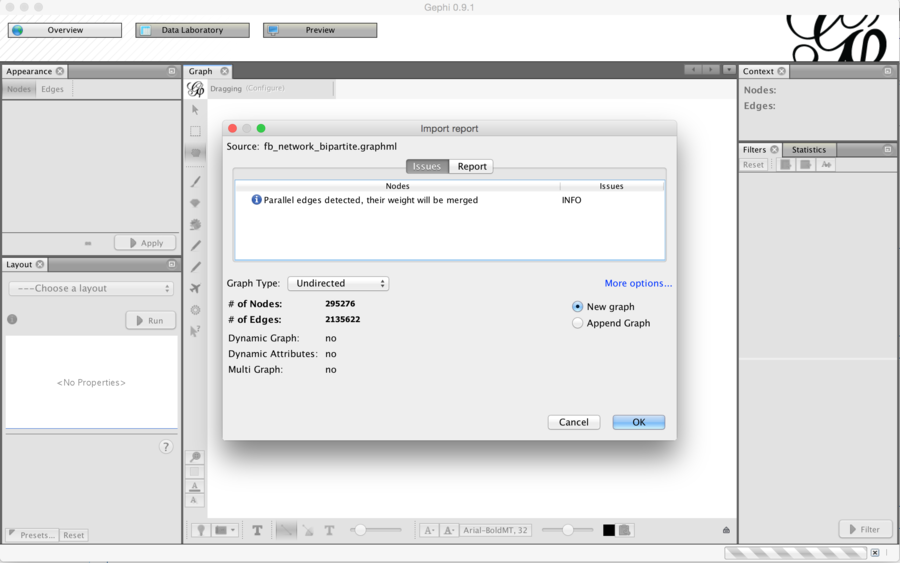

Upon load, we have 295,276 nodes and 2,135,622 edges.

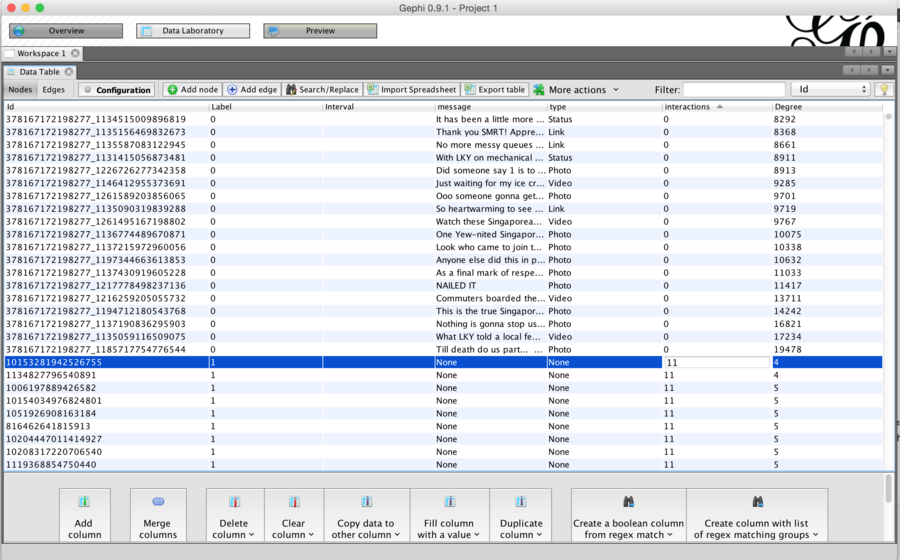

Due to SGAG's facebook huge social network, the Gephi analysis process was still very laggy, despite changes to our code to optimise the graph. Hence, we decided to edit the data rows individually in the data laboratory to shrink down the dataset, since we couldn't even apply filters as Gephi was not even responding to it.

In this process, we filtered the dataset to include the top 10 posts with the largest number of degrees, and also the bottom 10 posts with the least number of degrees.

Limitations and Future Work

While using specific APIs like the Facebook and Twitter API help us to get specific data about about each post like its content and attached 'tags', it can prove to be too much for regular computers to handle. This is especially true when analysing posts from multiple categories, where posts from all the categories need to be analysed together. For future work, we can use algorithms such as XGBoost or logistic regression to predict the number of unique visitors per post, based on training data for existing posts and their respective unique visitors.