Difference between revisions of "Twitter Analytics: Documentation"

| Line 36: | Line 36: | ||

<font size =3 face=Georgia > | <font size =3 face=Georgia > | ||

| − | <p>Data is collected | + | <p>Data is collected from Twitter with Python and stored in SQLite database. Several keywords have been tried and retrieved such as “#ippt”, “#gaza” and “#MH17”. However, the data collected is deemed to be unrepresentative as it is seasonal (“#ippt” and “MH17”) which spikes high during a short period of time. On the other hand,“#gaza” keyword retrieves a lot of tweets within a short period of time which makes a better data. However, we may need to gather more data in terms of time frame and its granularity to find the suitable forecasting.</p> |

| + | |||

| + | <p> Hence, the data chosen are iPhone6 tweets and Samsung tweets from 6th October 2014 12:00-00:00 | ||

| + | |||

<p>Based on the processing speed limitation of R, this project will only look into 10,000 rows of data for efficiency. However, more data can be analyzed if time is not a constraint to the project. | <p>Based on the processing speed limitation of R, this project will only look into 10,000 rows of data for efficiency. However, more data can be analyzed if time is not a constraint to the project. | ||

From the data gathered, various attributes are collected. However, the below will be the focus of this project:</p> | From the data gathered, various attributes are collected. However, the below will be the focus of this project:</p> | ||

| − | * | + | * Id |

| − | * | + | * Created_at |

| − | * | + | * In_reply_to |

| − | * | + | * In_reply_to_status_id |

| + | * In_reply_to_user_id | ||

| + | * Iso_languange | ||

| + | * Source | ||

| + | * Text | ||

| + | * User_id | ||

| + | * User_screen_name | ||

| + | * Search_id | ||

| + | |||

<h3> Data Cleansing Methodology </h3> | <h3> Data Cleansing Methodology </h3> | ||

Upon data exploration, the following methodologies for data cleansing is proposed: | Upon data exploration, the following methodologies for data cleansing is proposed: | ||

| − | + | * Choosing English | |

| − | + | * Remove all links | |

| − | + | * Remove retweet entries | |

| − | + | * Make each letter lowercase | |

| − | + | * Remove punctuations | |

| − | + | * Remove numbers | |

| − | + | * Define stopwords – English library and additional words | |

| − | + | * Stem document | |

| − | + | * Create document term matrix | |

| + | * Remove sparse terms that do not help to distinguish the documents | ||

| + | ** Sparse terms are defined as terms that occur only in very few documents. Normally, this reduces the matrix dramatically without losing significant relations inherent to the matrix | ||

| + | **On top of the package, further elimination is done by: | ||

| + | ** Find the sum of words in each document | ||

| + | ** Remove all docs without words | ||

| + | |||

<h3> Data Exploration Findings </h3> | <h3> Data Exploration Findings </h3> | ||

| Line 92: | Line 109: | ||

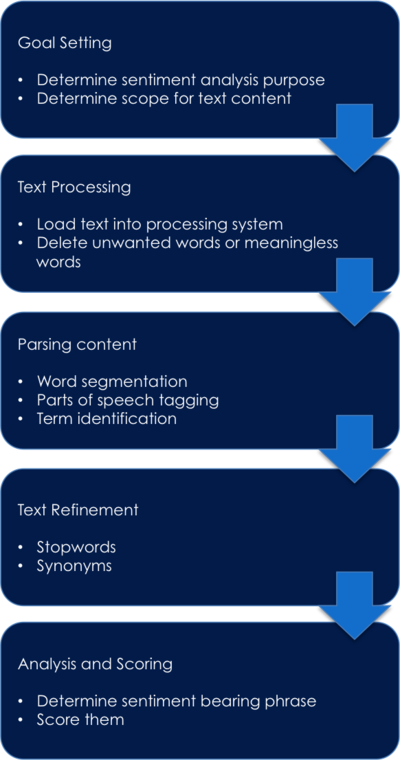

| − | ==<div style="background: #000033; padding: 13px; font-weight: bold; text-align:center; line-height: 0.3em; text-indent: 20px;font-size:26px; font-family:Britannic Bold"><font color= #ffffff> | + | ==<div style="background: #000033; padding: 13px; font-weight: bold; text-align:center; line-height: 0.3em; text-indent: 20px;font-size:26px; font-family:Britannic Bold"><font color= #ffffff>Sentiment Analysis Approach</font></div>== |

<div style="margin:20px; padding: 10px; background: #ffffff; font-family: Trebuchet MS, sans-serif; font-size: 95%;-webkit-border-radius: 15px;-webkit-box-shadow: 7px 4px 14px rgba(176, 155, 121, 0.96); -moz-box-shadow: 7px 4px 14px rgba(176, 155, 121, 0.96);box-shadow: 7px 4px 14px rgba(176, 155, 121, 0.96);"> | <div style="margin:20px; padding: 10px; background: #ffffff; font-family: Trebuchet MS, sans-serif; font-size: 95%;-webkit-border-radius: 15px;-webkit-box-shadow: 7px 4px 14px rgba(176, 155, 121, 0.96); -moz-box-shadow: 7px 4px 14px rgba(176, 155, 121, 0.96);box-shadow: 7px 4px 14px rgba(176, 155, 121, 0.96);"> | ||

<font size =3 face=Georgia > | <font size =3 face=Georgia > | ||

Latest revision as of 15:14, 12 October 2014

|

Tools

Python

Python is a widely used high-programming language that emphasize on code readability and scalability. It syntax allows programmers to code in fewer lines of code as compared to C. Python is also much better for text mining/ web scraping/ file manipulation/ XML. Features in Python such as generators is able to make processing large number of flies an ease as compared to others

SQLite

SQLite is a free in-process library that implements a portable and no server solution as it writes directly to common media. It works well with R and Python and scalable enough for the needs of the project and client’s needs as compared to utilizing a common csv file. Moreover, data security can be monitored easily as compared to cloud solution.

R

R is an open source programming language that is developed by practicing statisticians and researchers for statistical analysis. R is also compatible with other tools such as SAS, SPSS, Oracle, SQLite, etc. There are available packages that meet the client’s requirement such as sentiment analysis and time series forecasting.

NodeXL

NodeXL is an extendible toolkit for network overview and exploration, which can be implemented as an add-in feature in Microsoft Excel spreadsheet software. NodeXL is able to combine analysis and visualization functions with familiar spreadsheet layout for data handling. NodeXL is explored to get a better understanding of available open source tool in the market for Social Network Analysis