Difference between revisions of "Three horrible guys Data"

| Line 120: | Line 120: | ||

''for %f in (./test/*.gpkg); do ogr2ogr -f "format" -append ./Taiwan_stores0x.gpkg ./test/%f''<br/> | ''for %f in (./test/*.gpkg); do ogr2ogr -f "format" -append ./Taiwan_stores0x.gpkg ./test/%f''<br/> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

==<div style="background: #8b1209; padding: 15px; line-height: 0.3em; text-indent: 15px; font-size:18px; font-family:Helvetica"><font color= #FFFFFF>7. Extracting Sales Data</font></div>== | ==<div style="background: #8b1209; padding: 15px; line-height: 0.3em; text-indent: 15px; font-size:18px; font-family:Helvetica"><font color= #FFFFFF>7. Extracting Sales Data</font></div>== | ||

Revision as of 01:50, 22 November 2019

Contents

- 1 1. Geometry and Coordinate Reference System

- 2 2. Extracting each POI from the different shp files

- 3 3. Formation of polygons for each branch to align to ppt slides' trade area

- 4 4. Creation of competitors' POI

- 5 5. Aggregation of count of each POI to each trade area (Script)

- 6 7. Extracting Sales Data

- 7 Comments

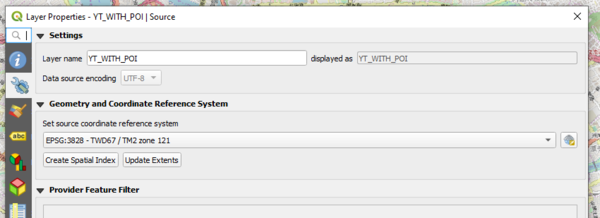

1. Geometry and Coordinate Reference System

Prior to performing data transformation for the above data, all data layers have to adhere to the same reference system used by Taiwan to use the same unit measurement system. Hence, all data layers utilise the EPSG:3828 Reference System.

Information on 3828 Reference system: https://epsg.io/3828

2. Extracting each POI from the different shp files

We are given the following POIs to extract:

- ATM

- Bank

- Bar or Pub

- Bookstore

- Bowling Centre

- Bus Station

- Business Facility

- Cinema

- Clothing Store

- Coffee Shop

- Commuter Rail Station

- Consumer Electronics Store

- Convenience Store

- Department Store

- Government Office

- Grocery Store

- Higher Education

- Hospital

- Hotel

- Medical Service

- Pharmacy

- Residential Area/ Building

- Restaurant

- School

- Shopping

- Sports Centre

- Sports Complex

- Train Station

- Night Life

- Industrial Zone

- Speciality Store

- Performing Arts

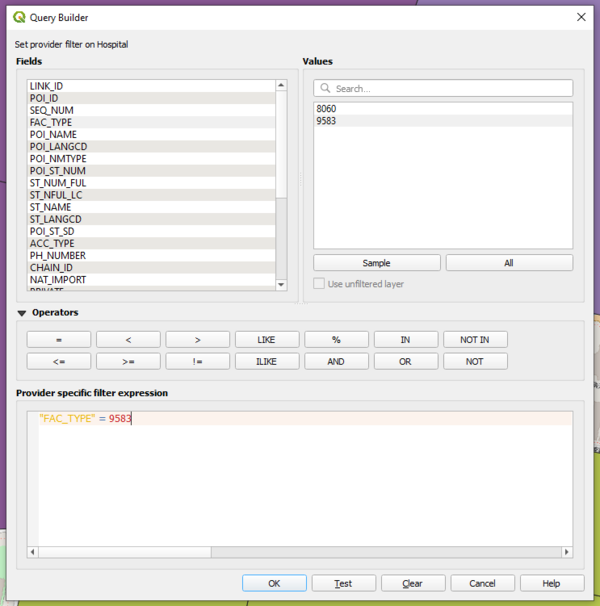

These POIs have to be extracted by finding their respective Facility Type in the shp files provided.

For example, Facility Type of 9583 was filtered using the filter function in QGIS and exported as a layer to the geopackage.

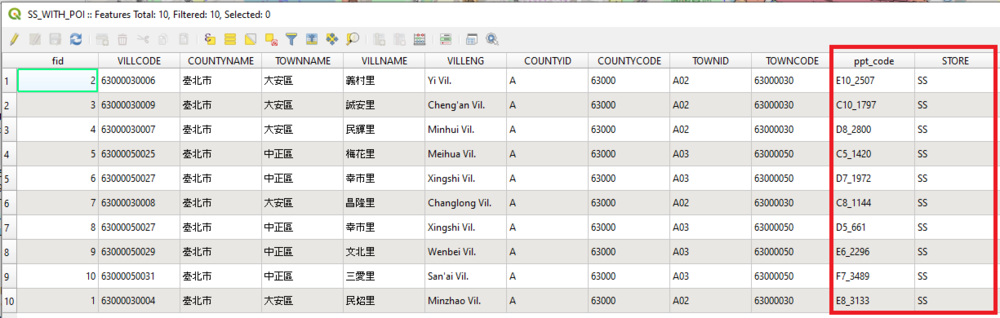

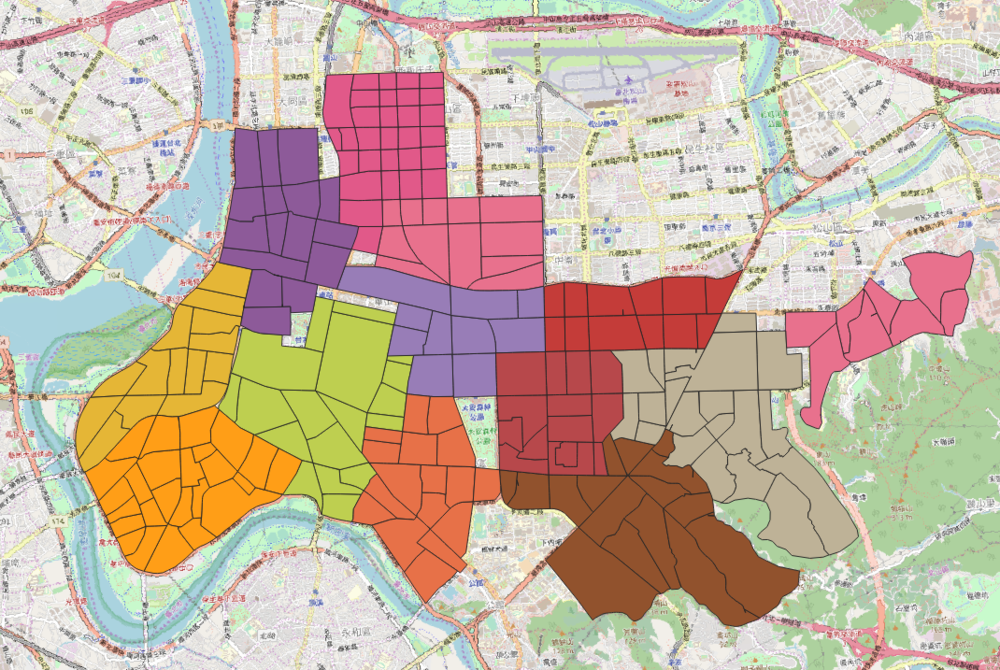

3. Formation of polygons for each branch to align to ppt slides' trade area

As the trade areas are predefined from the ppt slides, we have to manually digitalise the trade area for each of the outlets. QGIS tools were used in the process: Split Features, Merge Features and our artistic skills. Moreover, we have added the store codes and area code given by the ppt slides so that we can easily identify these polygons.

Below is the generated polygons for all of our trade area.

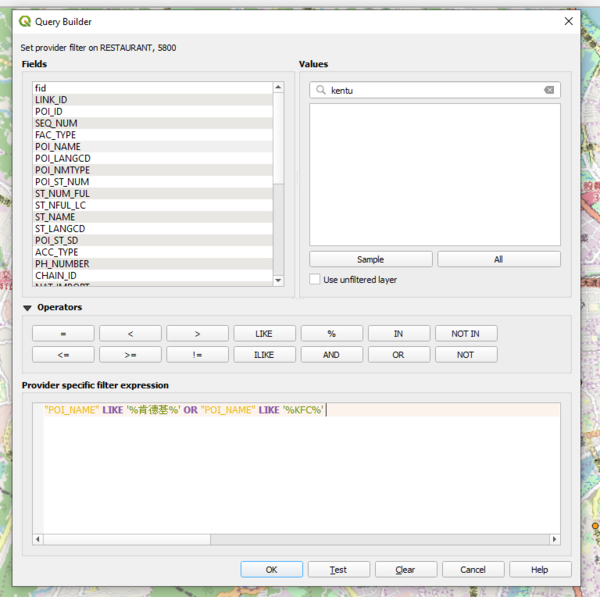

4. Creation of competitors' POI

5 Competitors are identified from the powerpoint slides and they are Domino's Pizza, Napolean, Mcdonalds, Kentucky Fried Chicken (KFC) and MosBurger. These data points are crucial in our analysis as they may have a correlation with the sales data. Hence, these competitors store locations must be extracted from the “Restaurant” SHP file. After extraction, they are exported as layers in the geopackage to be used for aggregation.

For example, KFC is filtered from “Restaurant” as shown below:

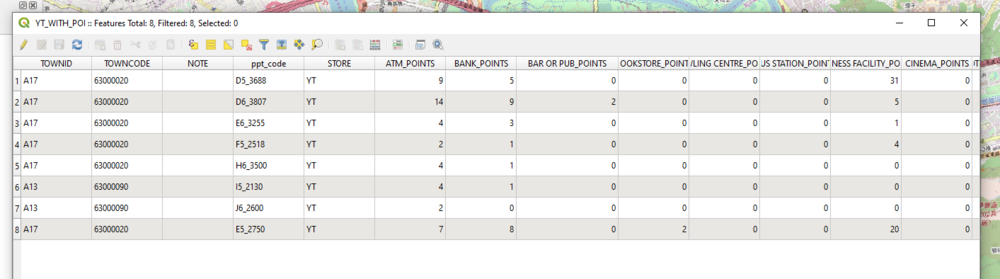

5. Aggregation of count of each POI to each trade area (Script)

After all the POIs have been extracted from the respective SHP files, the number of points for each POIs must be aggregated using “Count Points in Polygon” tool in QGIS.

However, the batching processing tool is unable to append each newly created column to an existing GeoPackage. Hence, a python script was written and ran under QGIS’s Python Console. It mainly utilised “qgis:countpointsinpolygon” function. Example of the script is shown below:

Learn more about the function here: https://docs.qgis.org/2.8/en/docs/user_manual/processing_algs/qgis/vector_analysis_tools/countpointsinpolygon.html

After the aggregation of POIs into each trade area, below is the screenshot of the columns for a particular trade area.

Since all POIs are contained in their own geopackage, we need to find a way to merge these geopackages into one. Gladly, we have the OSGeo4W Shell. It allows us to run shell commands to merge these geopackages.

First, open the OSGeo4W Shell and cd to the directory that has the master geopackage (Taiwan_stores0x.gpkg) we want to merge into.

cd C:\Users\.......\Project\data\GeoPackage

And as all the layers in each geopackage is named as Taiwan_stores37, I want to rename them to their respective area. The test folder contains all the geopackages that I want to merge.

for %f in (./test/*.gpkg); do ogrinfo ./test/%f -sql "ALTER TABLE Taiwan_stores37 RENAME TO %~nf"

Lastly, the below command append and merges all the geopackages in the test folder into Taiwan_stores0x.gpkg.

for %f in (./test/*.gpkg); do ogr2ogr -f "format" -append ./Taiwan_stores0x.gpkg ./test/%f

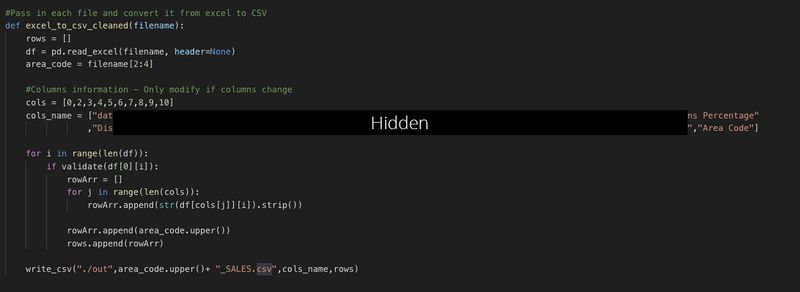

7. Extracting Sales Data

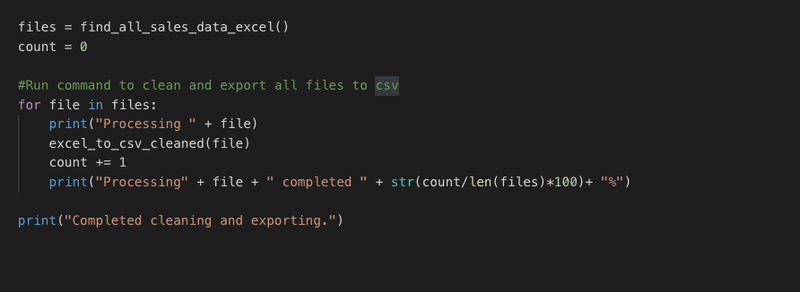

We obtained raw sales data which was generated from the company's Sales system. This was provided to us in a Report format which wasn't directly importable into QGIS. There were about 200 over outlet's data and it would have been impossible to do it manually one by one. Therefore we wrote 2 script that was used to extract key information from the Report and automated the output for the whole directory.

We found that the report although wasn't exact directly importable into QGIS. There was a pattern in each reports which allow us to easily parse and obtain key information which can be outputted into 2 different types of output: Daily Sales Data, Regional Sales Data for a Year.

Both scripts were written in Python. We found that valid rows has date on its left for the Daily Sales Data. Therefore we made us of this pattern to export the daily sales data into a separate CSV. In another similar script, we identified that once a certain word is encountered in the first column, any rows after that consisted of Regional Sales data and therefore made us of that fact to wrote a second script for a different output.

This method is then called in both scripts to enable all the Reports in the directory to be processed and outputted as a separate CSV in another directory. This allowed us to easily distribute the Regional Sales Data to the other groups who were working on different regions.

Comments

Feel free to leave comments / suggestions!