IS428 AY2019-20T1 Assign Kelvin Chia Sen Wei

Contents

- 1 MC1: Problem & Motivation

- 2 Dataset Analysis & Transformation Process

- 3 Dataset Import Structure & Process

- 4 Interactive Visualization

- 5 Observations

- 5.1 Q1: Emergency responders will base their initial response on the earthquake shake map. Use visual analytics to determine how their response should change based on damage reports from citizens on the ground. How would you prioritize neighborhoods for response? Which parts of the city are hardest hit?

- 5.2 Q2: Use visual analytics to show uncertainty in the data. Compare the reliability of neighborhood reports. Which neighborhoods are providing reliable reports? Provide a rationale for your response.

- 5.3 Q3: How do conditions change over time? How does uncertainty in data change over time? Describe the key changes you see.

- 6 References

- 7 Comments

MC1: Problem & Motivation

St. Himark has been hit by an earthquake, leaving officials scrambling to determine the extent of the damage and dispatch limited resources to the areas in most need. They quickly receive seismic readings and use those for an initial deployment but realize they need more information to make sure they have a realistic understanding of the true conditions throughout the city. In a prescient move of community engagement, the city had released a new damage reporting mobile application shortly before the earthquake. This app allows citizens to provide more timely information to the city to help them understand damage and prioritize their response. In this mini-challenge, use app responses in conjunction with shake maps of the earthquake strength to identify areas of concern and advise emergency planners.

With emergency services stretched thin, officials are relying on citizens to provide them with much needed information about the effects of the quake to help focus recovery efforts.

By combining seismic readings of the quake, responses from the app, and background knowledge of the city, help the city triage their efforts for rescue and recovery.

Tasks and Questions:

- Emergency responders will base their initial response on the earthquake shake map. Use visual analytics to determine how their response should change based on damage reports from citizens on the ground. How would you prioritize neighborhoods for response? Which parts of the city are hardest hit? Limit your response to 1000 words and 10 images.

- Use visual analytics to show uncertainty in the data. Compare the reliability of neighborhood reports. Which neighborhoods are providing reliable reports? Provide a rationale for your response. Limit your response to 1000 words and 10 images.

- How do conditions change over time? How does uncertainty in data change over time? Describe the key changes you see. Limit your response to 500 words and 8 images.

Dataset Analysis & Transformation Process

Some of the data given have to be processed to provide an accurate display of information and analysis.

Data Manipulation for given dataset: mc1-reports-data.csv

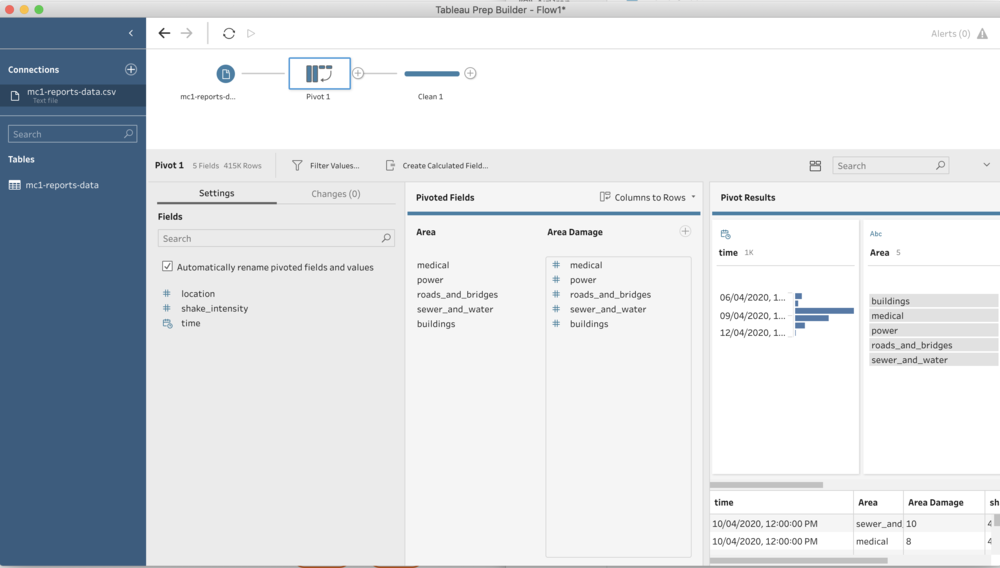

Pivoting for damage categories

Issue: The different categories of damage can be aggregated to give a more concise dataset and to be displayed as filters.

Solution: The categories: Medical, Power, Road_And_Bridges, Sewer_And_Water and buildings can be pivoted so that the categories can be filtered on Tableau's Dashboard.

The mentioned categories are pivoted using Tableau Prep Builder and transformed into "Area" for the category and "Area Damage" for the values.

Binning of Shake Intensity:

Issue: As mentioned in the provided data, the shake intensity are categorised as above and we have to align the data as such.

Solution: Through Tableau Prep Builder, we can create a calculation to bin each numerals to their respective categories.

Below is a screenshot of the calculation statement:

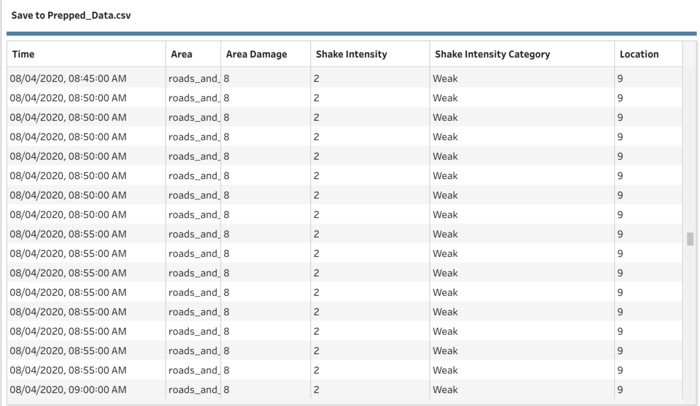

Below is the generated CSV from Tableau Prep Builder after data manipulation:

Data Manipulation for given dataset: StHimarkNeighborhoodShapefile/StHimark.shp

In order to overlay the different areas of the neighborhood to display its area outline, I used the shp file that was given in MC2 Challenge.

Issue: However after inserting the background overlay from MC2, the polygons transformed into the centroid points.

Solution: After much research, I found out that the cause is due to "Tableau just drops each Geometry at the centroid (where the generated lat/lon points would be rendered if you were using a point map instead of a filled map). Since there isn't anything that specifies to Tableau how the polygons rendered by the Geometry are to be scaled they seem to just be defaulting to a small size." Hence, I have to carry out the steps as mentioned in the guide to transform the SHP File to Polygon Coordinates.

- Refer to VA Discussion Forum for guide/steps to implement.

- Online Link explaining the transformation process: https://community.tableau.com/thread/116369

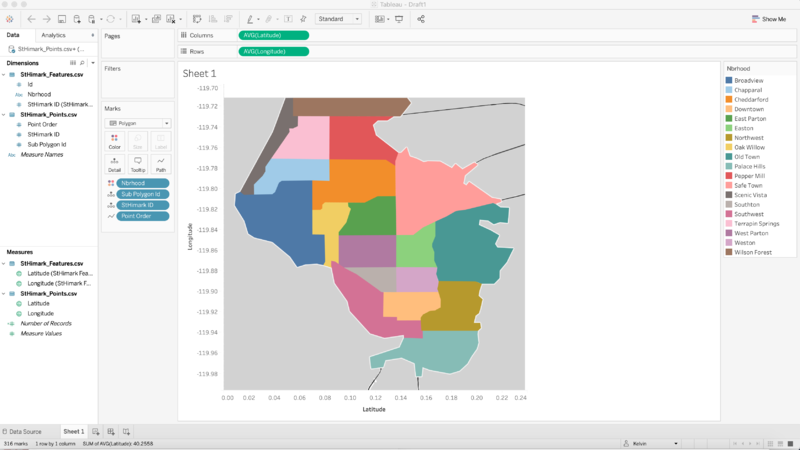

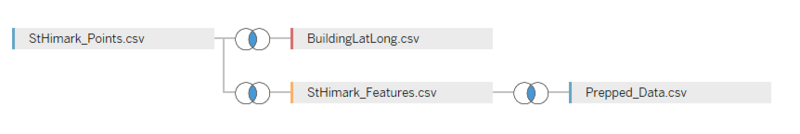

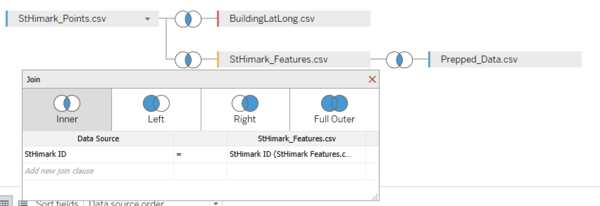

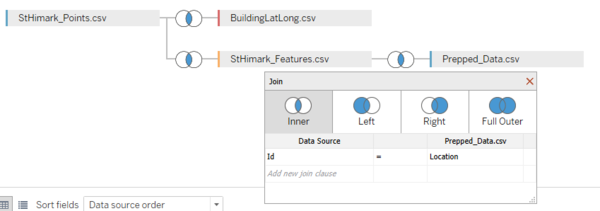

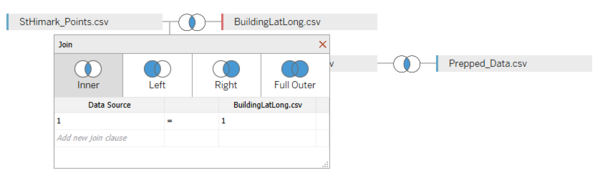

- After the transformation into polygon coordinates, StHimark_Features.csv and StHimark_Points.csv are generated and then imported and displayed as such:

Dataset Import Structure & Process

With the dataset analysis and transformation phase completed, the following files will have to be imported into Tableau for analysis:

- Prepped Data from Tableau Prep Builder

- StHimark_Points and StHiMark_Features from polygon transformation

- BuildingLatLong (Contains Hospitals and Nuclear Plant Lat Long)

The following relations are formed within the data files:

Interactive Visualization

The interactive visualization can be accessed here: https://public.tableau.com/profile/kelvin8400#!/vizhome/AS1-CloroplethWIndows/HomePage?publish=yes

Throughout all the different dashboards, useful guides/tips are provided to help users navigate through the different filters and actions so that their analysis can be performed smoothly. The following interactivity elements are also used throughout all the dashboards to maintain consistency:

| Interactive Technique | Rationale | Brief Implementation Steps |

|---|---|---|

| ||

| ||

|

The following sections elaborates on other interactivity techniques are integrated into each of the individual dashboard.

Home Dashboard

The following shows the Home Dashboard:

The following interactive technique have been employed in this dashboard:

| Interactive Technique | Rationale | Example |

|---|---|---|

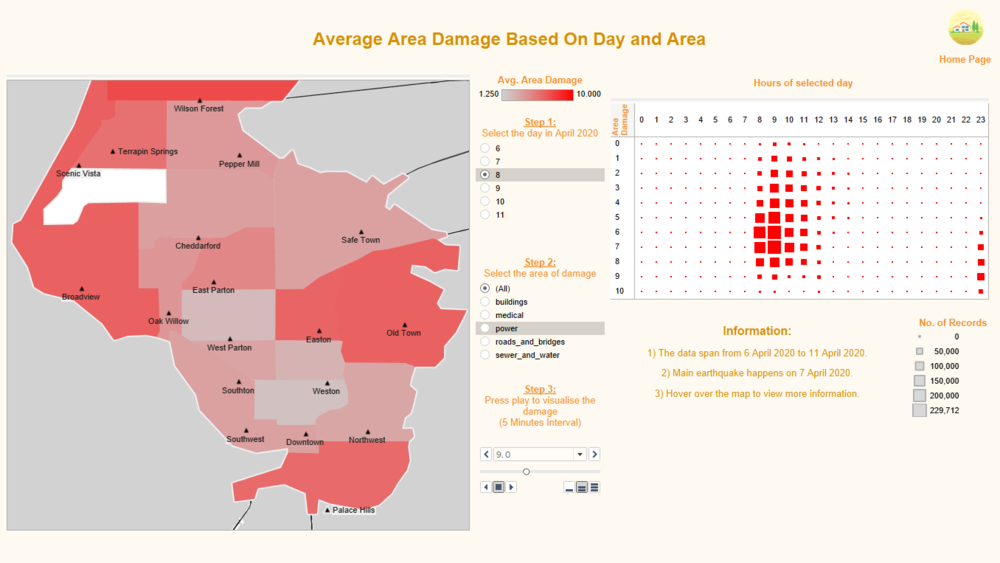

Earthquake Damage Dashboard

The following shows the Earthquake Damage Dashboard:

The following interactive visualisations have been employed in this dashboard:

| Interactive Visualisations | Rationale | Example | |

|---|---|---|---|

Data Uncertainty Dashboard

The following shows the Data Uncertainty Dashboard:

The following interactive visualisation have been employed in this dashboard:

| Interactive Visualisation | Rationale | Example | |

|---|---|---|---|

Observations

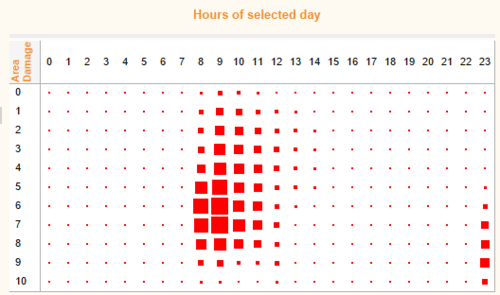

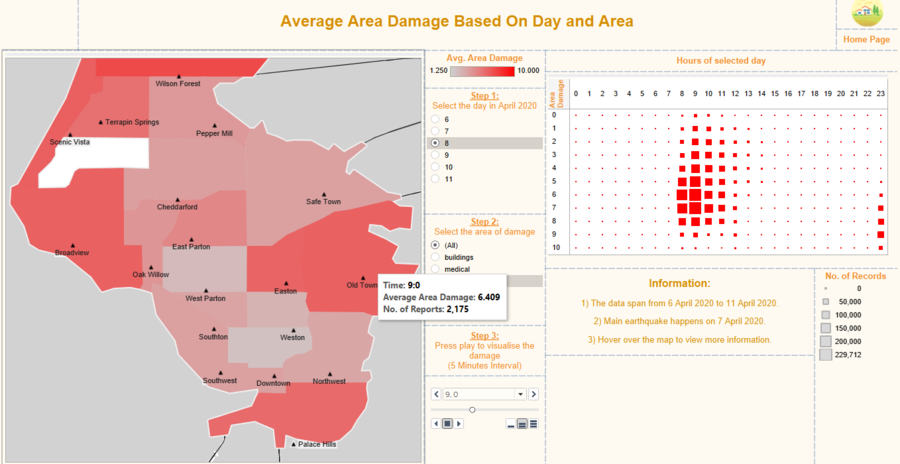

Q1: Emergency responders will base their initial response on the earthquake shake map. Use visual analytics to determine how their response should change based on damage reports from citizens on the ground. How would you prioritize neighborhoods for response? Which parts of the city are hardest hit?

| Index | Analysis | Evidence |

|---|---|---|

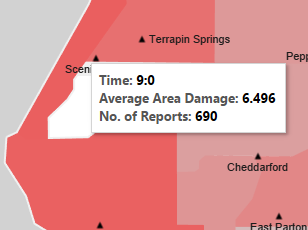

| As Pepper Mill, Old Town and Safe Town were located close to the epicentre of the quake, they were hit the hardest. From the map, it shows high amount of reports on 8th April and most damage reports are coming in from these neighborhoods. | ||

| Upon further analysis, Old Town received a large amount of reports immediately after the first quake and high intensity of damage across all categories. Responders should prioritise Old Town first before handling other neighborhoods. | ||

| According to the actual readings, Scenic Vista and Broadview should feel the shake lightly as they are further away from the quake epicenter. However, the damage reports in all categories were higher than the neighborhoods around them after the quakes. The damage reports reported higher readings after the first missing data period. The cause of these phenomenons might be due to the elite nature of the residents in Scenic Vista. | ||

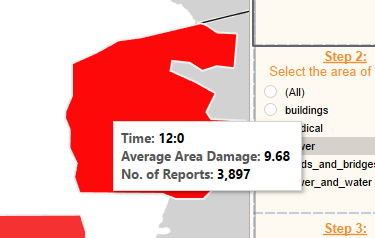

| On 10th April, there is a spike in reports which reported severe power damage with a mean of 9.68 at Old Town neighborhood. This outrage will lead to the loss of information in the subsequent hours. | ||

| Wilson Forest received the least amount of reports throughout the quake period and it might be due to the low population of the neighborhood. |

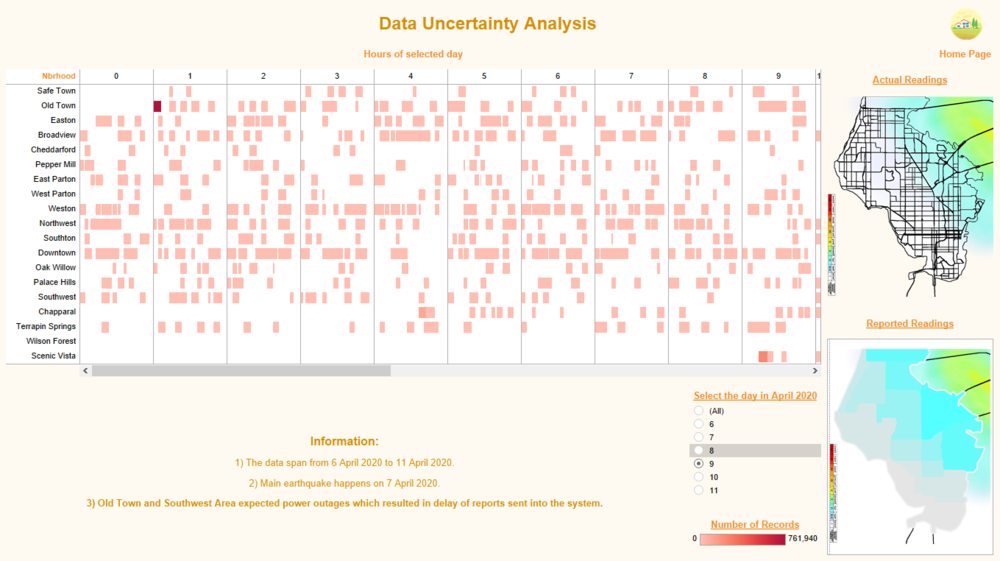

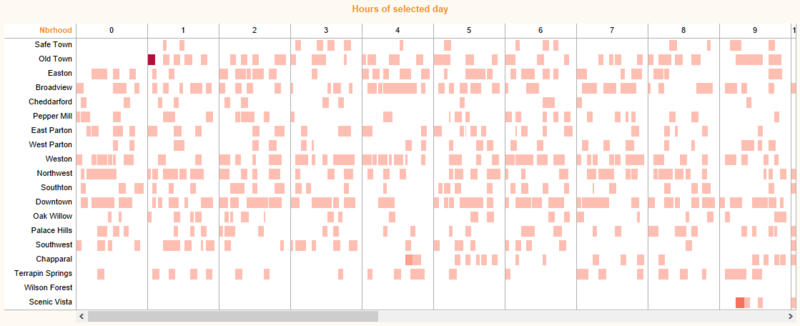

Q2: Use visual analytics to show uncertainty in the data. Compare the reliability of neighborhood reports. Which neighborhoods are providing reliable reports? Provide a rationale for your response.

| Index | Analysis | Evidence |

|---|---|---|

| When the first major quake struck the city on 8th April, the reports were consistent with the shake map, where the north-eastern neighbourhoods felt the shake more strongly than the western neighbourhoods. | ||

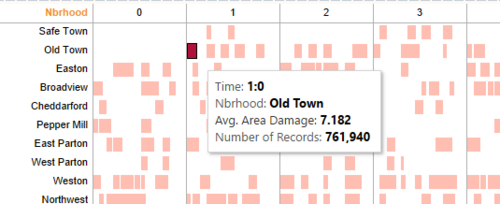

| However, when compared to the prequake shake map on 6th April, the reports were not consistent with the shake map, where Old Town reported a weak shake. | ||

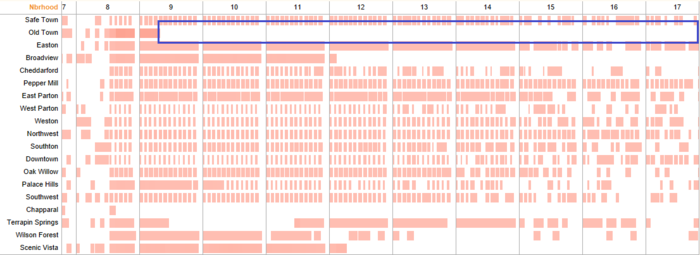

| There are two periods of data missing from Old Town after the quakes and it is likely due to the expected power outage from the electrical works. However, the recovery of power takes longer than expected and responders should investigate into this situation. | ||

| Moreover, when the power resumes, there is a huge spike in reports from Old Town as the responds are queued during the power outage. This contributes to the unreliability and uncertainty of the data which may provide a false alarm to the responders. | ||

| There are also multiple missing data from various neighborhoods like Chapparal, Terrapin Springs and Scenic Vista and for a considerable amount of time. Responders might want to investigate into it as there are no known ongoing power works for these neighborhoods. | ||

| The spike in power damage reports before a power outage can serve as a pre-empt for the missing data in the following hours. With that, engineers can prioritise these neighborhoods to restore the power. Hence, these data uncertainties can be eliminated by discovering data patterns. |

There is also a conflict of results which increased the uncertainty of the data. Hours after the main quake, some residents at Cheddarford reported that there shaking was not felt, while other residents reported that there was shake felt which was in fact referring to the quake that happened earlier. Hence, the delay in reports should be considered in the analysis of the data.

To conclude, the population size, demographic, socio-economic status and other factors also plays a part in the report frequencies for the different neighborhoods which affects the quality of the data. The reports might also be spammed by some residents. Therefore, more investigations and measures have to be implemented to curb with such cases to decrease data uncertainty.

Q3: How do conditions change over time? How does uncertainty in data change over time? Describe the key changes you see.

| Index | Analysis |

|---|---|

| The conditions varies significantly throughout the different days. The pre-quakes cultivates a gradual increment of reports among all neighborhoods. After the first major quake on 8th April, there was an immediate spike in reports coming in. The amount of reports then decline as time pass. | |

| The uncertainty of data during the pre-quake is relatively low, with a gradual increment of damage reports from all neighborhoods. However, when the major quake happened on 8th April, the uncertainty of data increased significantly with the spike of reports due to power damages. It is accompanied with other reasons which could not be identified that resulted in the loss of data in multiple periods for different neighborhoods. After the quake, the uncertainty of data decreased as reports started to become stable with minimal loss of data due to the recovery of power and repairing of building damages. | |

| In some specific neighborhoods, the ratings of some categories from Downtown and Broadview were relatively certain before the pre-quake. However, the reports suggest issues in other categories besides the ongoing road work in both neighborhoods which adds to the uncertainty of the data. |

References

Great thanks to the below references to aid in the creation of the visualisations.

https://www.tableau.com/learn/tutorials/on-demand/getting-started-tableau-prep

https://wiki.smu.edu.sg/1617t1IS428g1/IS428_2016-17_Term1_Assign3_Gwendoline_Tan_Wan_Xin#Interactive_Visualization

Comments

Please input your comments here!