ISSS608 2018-19 T1 Assign Chen Jingyi Data preparation

|

|

|

|

|

|

| Data source | Data preperation | |||||

|---|---|---|---|---|---|---|

| Official air quality measurements (5 stations in the city) (EEA Data.zip) |

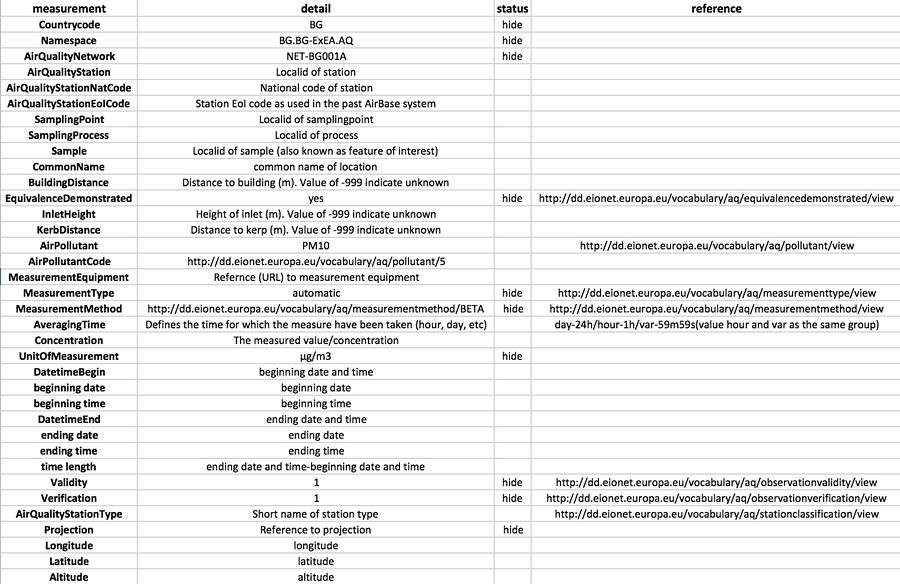

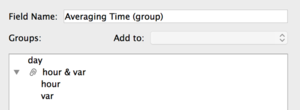

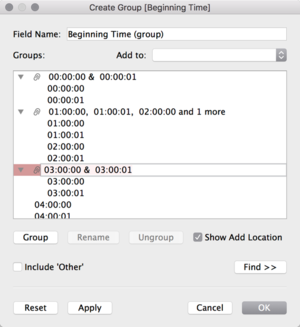

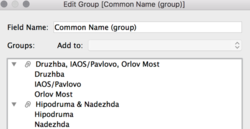

1. Use vlookup to match data from 5 stations with information in metadata.xlsx, and here we don't take the data of station BG0079A into consideration since it only has records of 2018. The following graph shows how to handle each column. 2. Use tableau to create fields. 2.2 Similarly, group time together. 3. Create new field called “weekday” to generate day information of the week. 4. Group by air quality station type(background=Druzhba +Hipodruma+Nadezhda, traffic=IAOS+Orlov Most) | |||||

| Citizen science air quality measurements (Air Tube.zip) |

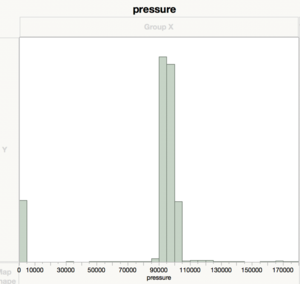

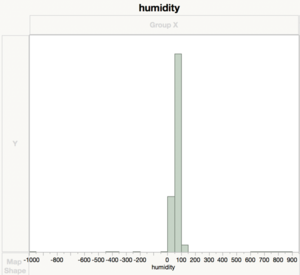

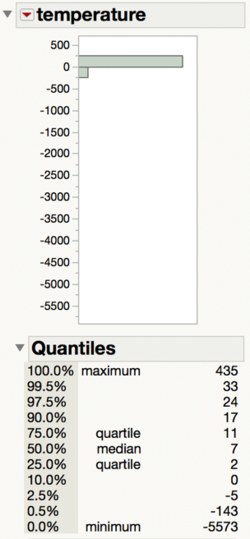

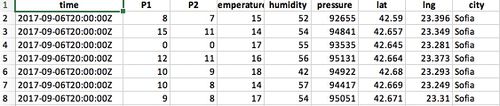

1.Use gh_decode from geohash package in R to turn geohash coordination into longitude and latitude. 2.Use reverse_geocode package in Python to transfer coordinations into a new column with city names. 3.Concatenate 2017 and 2018 data in JMP, and get about 1,700,000 rows of data for exploration and data cleaning. 4.For 'pressure',statistics should be more than 0,but there exists 195390 records=0, which is impossible. 5.For 'humidity',should be within the range of 0 to 100, need to remove ouliers. 6.For 'temperature',according to data, the temperature of Bulgaria is usually between -10 to 40, but here we want to leave some allowances for measurement, so expand the range to -25~57°C. 7.Filter out anormal data from step 4,5,6, transfer all of them into a new sheet, so left with near 1,400,000 records of clean data.Then filter by city name of 'Sofia',and get around 800,000 rows,after that we bring them into Tableau for furthur analysis and visualization. The format of final dataset is as below: | |||||

| Meteorological measurements (1 station) (METEO-data.zip) |

1.Delete all missing values 2.Change name of each col according to readme_IP.txt 3.Combine 'Day','Month','Year' attribute into a column named 'Date' in excel. | |||||

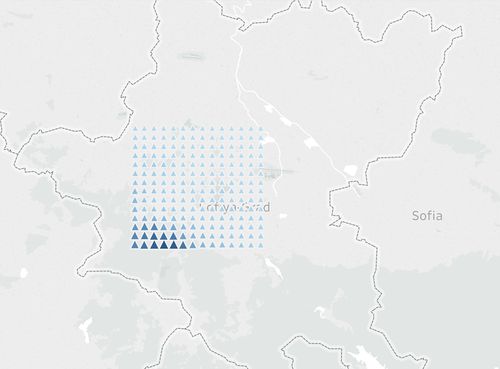

| Topography data (TOPO-DATA) |

1.Nothing special should be done for this data set. 2.The distribution of data shows that there is a gradient transformation of altitude in sofia city: the elevation is the lowest in the north-east side, and goes higher to the south-west part. Here we choose not to match coordinations with former datasets since there are a lot of mismatches, and only take this acknowledge as a common sense. |