NeighbourhoodWatchDocs

Contents

GROUP MEMBERS

PROJECT DESCRIPTION

DATA COLLECTION

|

No. |

Dataset |

Description |

Source(s) |

|

1. |

Singapore Planning Subzone (MP14_SUBZONE_WEB_PL) |

This dataset is necessary for us to be able to plot the Singapore map out at a planning subzone level. |

Included in our In-Class and Take-Home exercises |

|

2. |

Singapore Population by Planning Area/Subzone |

This dataset is necessary for us to filter out the elderly population and subsequently join it with the Subzone attributes of other datasets. |

Included in our In-Class and Take-Home exercises |

|

3. |

Singapore Population by Type of Dwelling |

This dataset is necessary for us to find out the total number of elderly population in a specific Subzone, so that we are able to locate the mature estates in Singapore. |

Included in our In-Class and Take-Home exercises |

|

4. |

Total Number of Neighborhood Clinics in Singapore |

This dataset is necessary for us to locate all the neighborhood clinics in Singapore and find out which Subzone they belong to, so as to aid in our proximity analysis with the dwellings. As this data is not readily available to us, we will need to perform web scraping to "scrape" the data off the Singapore YellowPages website. A Python script can help us achieve the following and more details can be found below. |

| Code Snippet 1 | Code Snippet 2 |

|---|---|

1. Visit through each page of the YellowPages Singapore's clinics results in a loop.

2. Make use of XPATH expressions to retrieve the content (clinic name, address, latitude and longitude) located at specific HTML attributes.

3. Check whether the retrieved clinic info is a Dental clinic, if yes we will SKIP the result, if not we will proceed to save it.

4. When all pages are visited, the retrieved clinics information will be parsed and stored into a .CSV file with the respective columns.

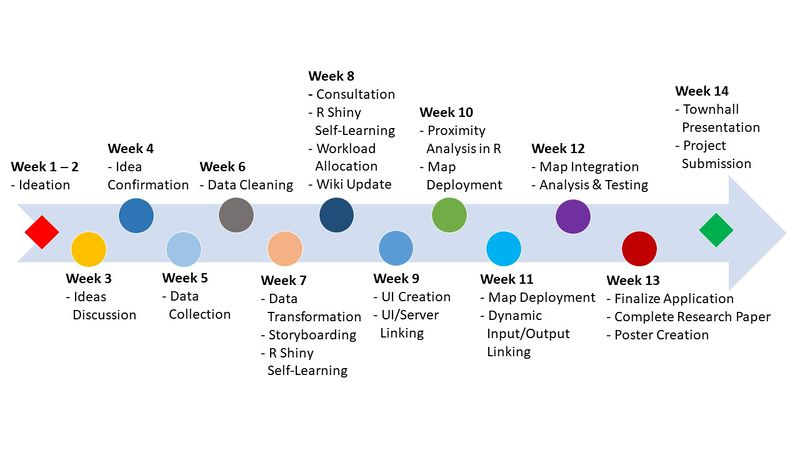

PROJECT TIMELINE

PROJECT CHALLENGES

|

No. |

Key Technical Challenges |

Description |

Proposed Solution |

Outcome |

|

1. |

Unfamiliarity with R packages and R Shiny |

Our team may encounter the use of additional R resources that were not taught in class. |

- Independent Learning on R packages and R Shiny |

We managed to solve the mentioned challenge with the following resources: |

|

2. |

Data Cleaning and Transformation |

As we need to collect the data from various sources, they may have different attributes such as the Coordinate Reference System (CRS), units of measurement and etc. |

Adopt a standardized process of cleaning the data, focusing with what we only need. Most of the datasets used for our project can be found in our Hands-On or Take-Home exercises and we can rely on those existing data. |

We managed to solve our technical challenge with the following: |

|

3. |

Limitations & Constraints in Datasets |

There are certain assumptions that we need to make based on the context and purpose of our project, such as the average number of doctors in a particular clinic, which cannot be derived from our datasets. |

Working out with the team together and figuring out a reasonable and valid assumption, together with adequate online research and consultation with Prof. Kam. |

We managed to solve our technical challenge with the following: |