IS428 2018-19 Term1 Assign Aaron Poh Weixin

Contents

Background & Objectives

Air quality in Bulgaria is a big concern: measurements show that citizens all over the country breathe in air that is considered harmful to health. For example, concentrations of PM2.5 and PM10 are much higher than what the EU and the World Health Organization (WHO) have set to protect health.

Bulgaria had the highest PM2.5 concentrations of all EU-28 member states in urban areas over a three-year average. For PM10, Bulgaria is also leading on the top polluted countries with 77 μg/m3on the daily mean concentration (EU limit value is 50 μg/m3). According to the WHO, 60 percent of the urban population in Bulgaria is exposed to dangerous (unhealthy) levels of particulate matter (PM10).

The objective of this project is to first understand..... (continue)

Task 1: Spatio-temporal Analysis of Official Air Quality

Data Preparation

Before diving into the data cleaning process, it is important to detail some findings/anomalies I got from simply observing and scanning the data:

- The given dataset only focuses on PM10, which will therefore be the main focus of our analysis

- Stations 60881 and 9484 have serious data quality issues

- Station 60881 only has data for 2018

- Station 9484 only has data up to the year 2015

- Station 9484 has missing data for the months of Oct to Dec for the year 2015

- Station 9484 has missing data for the months of Aug to Sep for the year 2013

- Averaging format inconsistent across time periods

- Year 2013-2014 averages data daily

- Year 2015 averages data daily, except for 31 Dec, which they classify as hourly

- Year 2016 mostly averages data daily, with some hourly averages

- Year 2017 averages hourly and only has data for the months of November and December. They also have a 'var' average which does not make sense

- Year 2018 averages mostly hourly, with some daily averages

Considering the task requires analysis of the past and the most recent air quality situation in Sofia City, the large amount of missing data in Station 9484 would be unacceptable, hence I decided to exclude it from the analysis. Furthermore, since Bulgaria is not a big country, 4 stations should be sufficient to explain the air quality situation

Note: (Year 2017 only has severe missing data in the original dataset, but I managed to download the latest version which captures most of the daily data)

With the above information in mind, I decided on the following cleaning procedure (in Python):

|

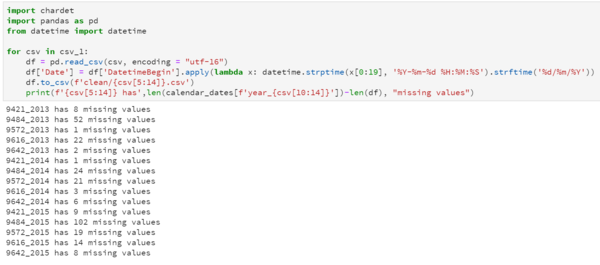

I started off with cleaning the years 2013-2015 and 2017(updated) because they had the cleanest data format. Cleaning was simply converting 'DatetimeBegin' into a suitable DateTime format ("dd/mm/yyyy")

|

||

|

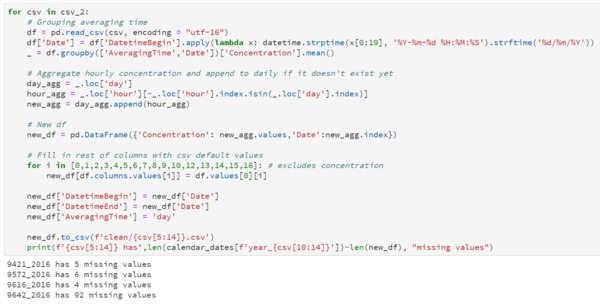

Years 2016 and 2018 were slightly more complicated because they contained both hourly and daily averages.

|

||

|

This step is the simplest as it simply merges all the cleaned datasets into 1 dataset to be parsed into the relevant visualisation tools |

Functions used:

|

Data Visualisation

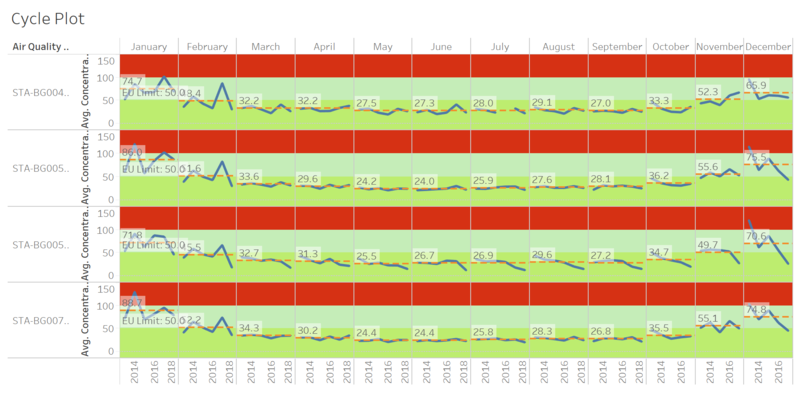

A typical day in Sofia City really depends on the month you are referring to because concentration levels changes very drastically.

The below cyclical plot shows a summary of a typical day in Sofia City. For the majority of the year from March till October, average concentration levels are below the EU limit of 50μg/m3. Concentration levels usually peak around December or January.

While the visualisation alone is not able to tell us the possible cause of the spike in concentration levels, it is able to highlight trends in concentration levels.

For example, we can see that the station STA-BG00052A has a consistent trend of lower concentration levels over the years. This might indicate a healthier environment for the citizens around the region.

More encouragingly, focusing on the right-most column, we see that average concentration levels in December is dropping significantly over the years. This could have a spillover effect unto January (another unhealthy month) should this trend continue. Looking at the following simple line graph, we can observe that peak average concentration levels seem to be slightly lowering, which brings further good news for the citizens of Sofia City.

Task 2: Spatio-temporal Analysis of Citizen Science Air Quality Measurements

Data Preparation

The first step of any visualisation project will always be a visual inspection to understand the data and cleaning the data if necessary. Similar to the earlier task, I have listed down below the steps I have taken to clean the data:

|

Geohash is not a readable format by most visualisation tools including Tableau |

Decode geohash using Prof's geohash_geocoder R tool | |

|

Data is stored separately for the years 2017 and 2018

|

Use JMP's concatenate tool to combine both 2017 and 2018 data | |

|

There are duplicate points where a geohash may produce multiple different readings of P1, P2, Temperature etc for the same hour

|

Average out rows with multiple different readings to produce one reading per geohash-timestamp id

|

Data Visualisation

Unexpectd behaviours of sensors

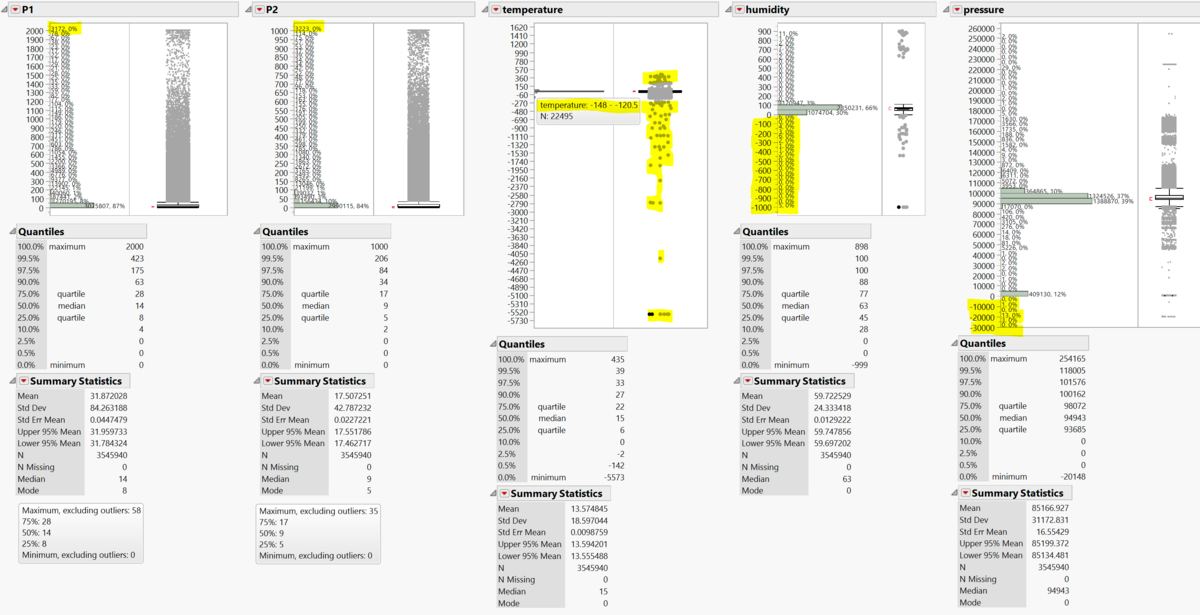

I did this step first as I think this step is essential to not just understand unexpected behaviours, but importantly, identify outliers. The outlier plots on the right of the histogram plots show the data points that lie outside the interquartile range (IQR) of the normal box plot.

Initial analysis of the above image suggests that there are many unexpected readings by the sensors, especially those that lie outside the IQR. However, data lying outside the IQR is not sufficient evidence to warrant exclusion. Therefore, given my limited understanding of weather metrics data, I'll be analysing the variables individually and suggest conservative actions to clean data that we are confident are wrong.

|

These variables are most likely referring to either PM10 or PM2.5 according to the AirTube website.

|

Technically, PM values can go as high as possible although highly unlikely. Given my limited knowledge on what is considered an 'impossible' value, I will only exclude the maximum values 2000 and 1000 (due to the sudden jump in count of these values from the next highest, which is very unlikely) | |

|

The range of values suggests that values are in degree celsius

|

As a conservative measure, I will exclude temperatures above 60°C and below -90°C (the highest and lowest temperatures ever recorded on Earth) |