Difference between revisions of "IS428 AY2018-19T1 Chua Sing Rue"

Srchua.2015 (talk | contribs) |

Srchua.2015 (talk | contribs) |

||

| Line 103: | Line 103: | ||

<p>'''Solution:''' Reverse geocoding to get the longitude and latitude of these stations must be done. Use R package, [https://github.com/Ironholds/geohash geohash] to decode geohashes into latitude/longitude pairs. </p> | <p>'''Solution:''' Reverse geocoding to get the longitude and latitude of these stations must be done. Use R package, [https://github.com/Ironholds/geohash geohash] to decode geohashes into latitude/longitude pairs. </p> | ||

| − | <p>'''Issue 2:''' Due to the large number of records, data for 2017 and 2018 are stored in separate files. Additionally, it is not possible to explore the data using Excel as not all records will load. </p> | + | <p>'''Issue 2:''' Latitude/longitude pairs through reverse geocoding is simply a reference point, and as such, is not representative as a point location. </p> |

| + | <p>'''Solution:''' Techniques such as hexagon binning should be used to better represent the stations. </p> | ||

| + | |||

| + | <p>'''Issue 3:''' Due to the large number of records, data for 2017 and 2018 are stored in separate files. Additionally, it is not possible to explore the data using Excel as not all records will load. </p> | ||

<p>'''Solution:''' Use Python package, [https://pandas.pydata.org/ pandas] to merge both 2017 and 2018 csv files into one. </p> | <p>'''Solution:''' Use Python package, [https://pandas.pydata.org/ pandas] to merge both 2017 and 2018 csv files into one. </p> | ||

Revision as of 02:11, 12 November 2018

Contents

Problem and Motivation

Task 1: Spatio-temporal Analysis of Official Air Quality

EEA Dataset

| Name of Air Quality Station | Local ID | Data available |

|---|---|---|

| ||

| ||

| ||

| ||

| ||

|

Data preparation

Issue 1: The official air quality measurements in the EEA Data folder contains PM10 measurements from air quality stations in Sofia city for the years 2013 to 2018. However, the dataset is not complete. Air quality station Mladost has only 2018 data available. Additionally, air quality station Orlov Most is missing data for the years 2016, 2017 and 2018.

Solution: For the purposes of comparison, air quality station Mladost and Orlov Most will be excluded.

Issue 2: PM10 measurements are reported as an averaged daily value for the years 2013 to 2016, but reported as an averaged hourly value for the years 2017 to 2018.

Solution: When comparing across the periods 2013 to 2016 and 2017 to 2018, the hourly PM10 average values will be recalculated as a daily average in order to get a common basis for comparison. At the same time, averaged hourly PM10 values will still be used to drill down into within-day trends for the more recent information.

Issue 3: For 2017 data, PM10 values for 1 Jan 2017 to 26 Nov 2017 are missing. Additionally, 2018 data is recorded up til September 14 only.

Solution: 2017 and 2018 data should not be used for yearly trends.

Overall trend in Daily Average PM10

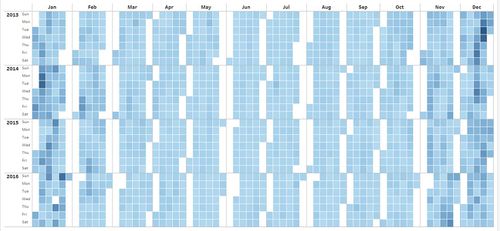

1. Time series for Daily Average PM10, 2013 - 2016

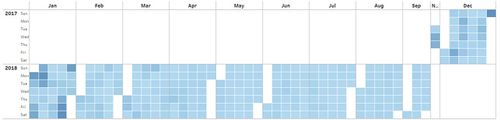

2. Time series for Daily Average PM10, Nov 2017 - Sept 2018

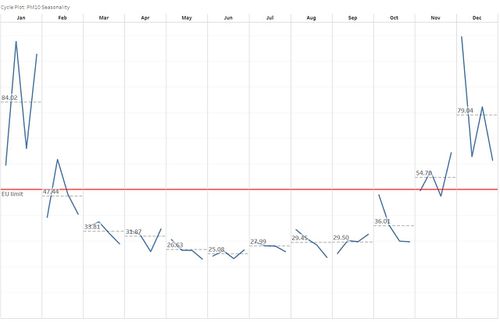

Seasonal trend in Daily Average PM10

1. Calendar Heatmap

2. Cycle Plot

Intra-day trend in Hourly Average PM10

1. Calendar Heatmap: Within-day trend of Average Hourly PM10 by Month

Task 2: Spatio-temporal Analysis of Citizen Science Air Quality Measurements

Data preparation

Issue 1: The citizen science air quality measurements in the Air Tube Data folder are mapped to unique geohash numbers. However, geohash is not supported by Tableau currently.

Solution: Reverse geocoding to get the longitude and latitude of these stations must be done. Use R package, geohash to decode geohashes into latitude/longitude pairs.

Issue 2: Latitude/longitude pairs through reverse geocoding is simply a reference point, and as such, is not representative as a point location.

Solution: Techniques such as hexagon binning should be used to better represent the stations.

Issue 3: Due to the large number of records, data for 2017 and 2018 are stored in separate files. Additionally, it is not possible to explore the data using Excel as not all records will load.

Solution: Use Python package, pandas to merge both 2017 and 2018 csv files into one.