Difference between revisions of "ISSS608 2017-18 T3 Assign Hasanli Orkhan Methodology"

Orkhanh.2017 (talk | contribs) |

Orkhanh.2017 (talk | contribs) |

||

| Line 40: | Line 40: | ||

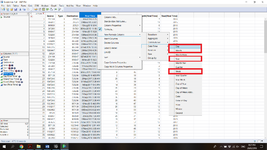

To prepare our data for being ready to dump into Gephi, firstly I concatenate all the 4 suspicious data sets and create new day, week, month and year columns for easy filtering in Gephi. | To prepare our data for being ready to dump into Gephi, firstly I concatenate all the 4 suspicious data sets and create new day, week, month and year columns for easy filtering in Gephi. | ||

<br><br> | <br><br> | ||

| − | + | <gallery mode="packed-hover" heights="100" > | |

| + | File:Gephi_Prep1.png | ||

| + | |||

| + | </gallery> | ||

<br><br> | <br><br> | ||

Next, we need to join the Suspicious_Final dataset with CompanyIndex dataset to get the labels and prepare our new created data set for Node and Edge data sets. | Next, we need to join the Suspicious_Final dataset with CompanyIndex dataset to get the labels and prepare our new created data set for Node and Edge data sets. | ||

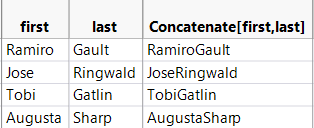

In order to Join data tables, first I match Source = ID and then Destination=ID, subsequently I will add two columns (First and Last) from Destination Labelled to Source Labelled data table. Before adding them, I will concatenate two columns (First and Last) into one named Label. | In order to Join data tables, first I match Source = ID and then Destination=ID, subsequently I will add two columns (First and Last) from Destination Labelled to Source Labelled data table. Before adding them, I will concatenate two columns (First and Last) into one named Label. | ||

<br><br> | <br><br> | ||

| − | + | <gallery mode="packed-hover" heights="100" > | |

| + | File:Concatenate.png | ||

| + | |||

| + | </gallery> | ||

<br><br> | <br><br> | ||

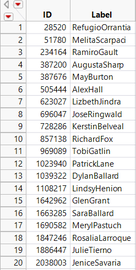

For running in Gephi Node data we need to keep only unique ID and Label. Thus, from Summary I take only unique ID and corresponding labels. The following is my Node dataset, where I have only 20 unique suspicious people. | For running in Gephi Node data we need to keep only unique ID and Label. Thus, from Summary I take only unique ID and corresponding labels. The following is my Node dataset, where I have only 20 unique suspicious people. | ||

Revision as of 20:09, 7 July 2018

|

|

|

|

|

Data Preparation

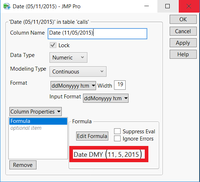

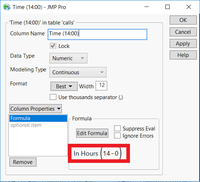

In all the data sets provided in this mini case, we need to create a new column for timestamp. I have used SAS JMP to create a new column by starting from May 11, 2015 14:00. By taking the seconds value of 5/11/2015 and 14:00, we can add up by given seconds value with created new columns values to get the actual date and time.

Same formulas were applied for creating similar columns for other datasets. Consequently, we check for duplicates in the data, duplicates from Calls, Purchases data set were removed.

Firstly, I remove May and June months from 2015 because are incomplete, as well as, if we look at quarterly data later in our analysis we cannot get quarterly information with that two months. By keeping them, I would make some noise in my data.

Gephi Data Preparation

To prepare our data for being ready to dump into Gephi, firstly I concatenate all the 4 suspicious data sets and create new day, week, month and year columns for easy filtering in Gephi.

Next, we need to join the Suspicious_Final dataset with CompanyIndex dataset to get the labels and prepare our new created data set for Node and Edge data sets.

In order to Join data tables, first I match Source = ID and then Destination=ID, subsequently I will add two columns (First and Last) from Destination Labelled to Source Labelled data table. Before adding them, I will concatenate two columns (First and Last) into one named Label.

For running in Gephi Node data we need to keep only unique ID and Label. Thus, from Summary I take only unique ID and corresponding labels. The following is my Node dataset, where I have only 20 unique suspicious people.

For the Edge data which contains 137 rows I have created two more columns Quarter and Time of the day in case I am going to investigate on quarterly or hourly basis.