Difference between revisions of "Fu Yi - Data Preparation"

Yi.fu.2017 (talk | contribs) |

Yi.fu.2017 (talk | contribs) |

||

| Line 10: | Line 10: | ||

[[ISSS608_2017-18 T3 Assign Fu Yi| <font color="#FFFFFF">Introduction</font>]] | [[ISSS608_2017-18 T3 Assign Fu Yi| <font color="#FFFFFF">Introduction</font>]] | ||

| − | | style="font-family:Century Gothic; font-size:120%; solid #1B338F; background:# | + | | style="font-family:Century Gothic; font-size:120%; solid #1B338F; background:#C00000; text-align:center;" width="25%" | |

; | ; | ||

[[Fu Yi - Data Preparation| <font color="#FFFFFF">Preparation</font>]] | [[Fu Yi - Data Preparation| <font color="#FFFFFF">Preparation</font>]] | ||

| − | | style="font-family:Century Gothic; font-size:120%; solid #1B338F; background:# | + | | style="font-family:Century Gothic; font-size:120%; solid #1B338F; background:#2b3856; text-align:center;" width="25%" | |

; | ; | ||

[[Fu Yi - Visualization and Insights| <font color="#FFFFFF">Visualization & Insights</font>]] | [[Fu Yi - Visualization and Insights| <font color="#FFFFFF">Visualization & Insights</font>]] | ||

Revision as of 22:06, 8 July 2018

|

|

|

|

|

Methodology & Visualization tools

Questions in Mini Challenging 3 contain mostly Network analysis and company operational trend analysis.

For Question 1 company operational trend, I used Tableau to visualize the scenarios. For Question 2 Network Analysis, I used Gephi to detect abnormal patterns.

Other tools: SAS JMP Pro and Excel supported for data cleaning and preparation, as well as initial exploration of the data.

Data Preparation Question 1

a) Add titles Open 4 large tables (calls, emails, purchases, meetings) in Excel. Add title for each column (source, eType, target, time) for each of 4 tables.

b) Change date

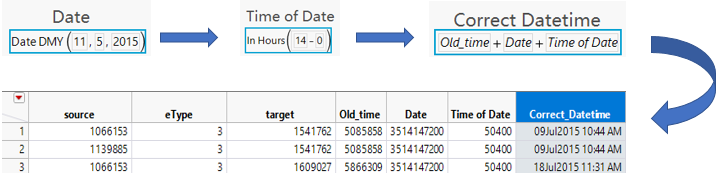

Import tables to JMP, since the real time should start from 11/05/2015, 14:00. I created 2 new columns for 11/05/2015 and 14:00 respectively, and combine Old time, Date, Time of date together to get the correct date.

c) No duplication

Check summary of each table to eliminate the duplication.

d) Clear out incomplete month

The date starts from May,2015, however, the first 2 months have incomplete data. I delete the first 2 months data (May + June 2015) to make the dataset have a complete cycle. The description of final 4 tables:

- Calls table: 10,091,409 rows - Emails table: 13,846,639 rows - Purchase table: 723,586 rows - Meetings table: 127,110 rows

Data Preparation Question 2 (For Gephi)

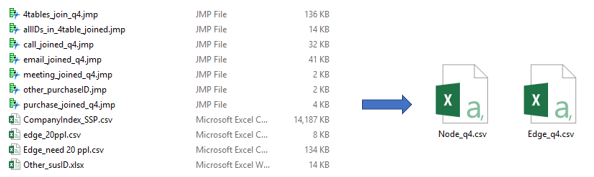

a) Prepare Suspicious Node files and Edge files

Edge:

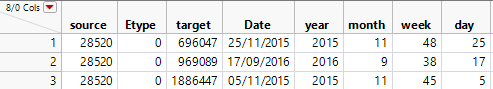

- Add titles, concatenate tables, change to correct date and extract Year/Month/Week/Day

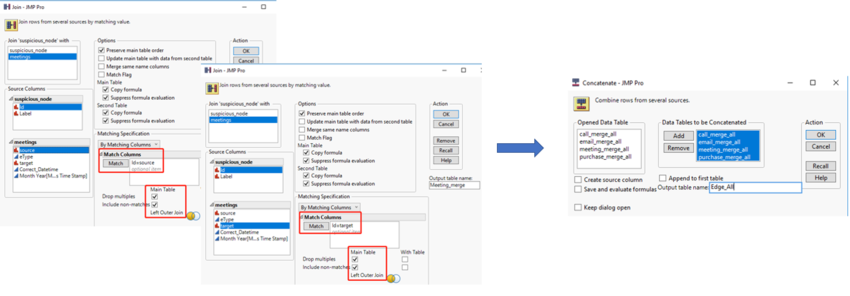

Open 4 suspicious tables (calls, emails, purchases, meetings) in Excel. Add titles for all tables, then import all tables into JMP, use Concatenate method to aggregate all tables as one file. Change time to correct datetime accordingly (same as Question 1)

The Day here is the month of the day, it is to drill down to see the changes day by day, so as to identify who are the people conducted the suspicious event, how the suspicious purchased made.

Node:

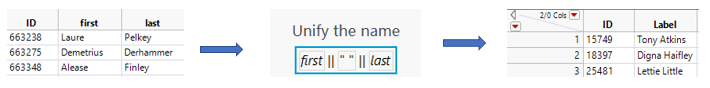

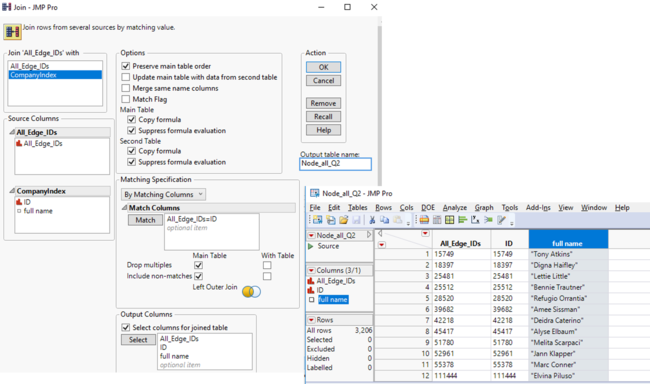

Copy and Paste all the Id from Source and Target into one column, extract unique Ids as ID column. There are 20 people in suspicious files from the insider’s data. From the Company Index file, aggregate First name and Last name into one column, name it as “Label”

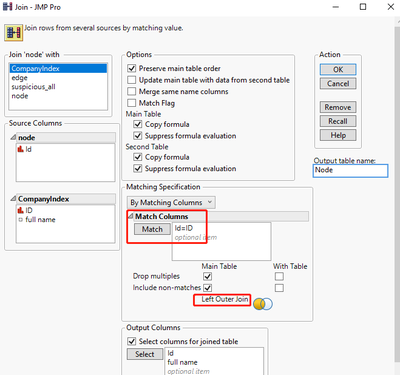

From the 20 suspicious IDs table, left outer join Company Index by matching the IDs to get the Label (full name).

b) Prepare the Node and Edge for large data file

Edge: Large file

- Extract records from 4 large data set that IDs have association with those 20 suspicious-IDs.

For large data source file, I left outer join twice from suspicious_Node with each large data source table (calls, emails, purchase, meetings), first join by matching Source ID, then matching with Target ID, concatenate 2 matched tables as one, then, clear out all the missing value, because some suspicious people do not have in-degree or out-degree in large data source tables.

Do that for all 4 large data source tables, then, combine 4 tables as one.

Node: Large file

Take distinct IDs from both Source and Target, pull them into one column, left outer join with Company Index to get all IDs label. There are 3,206 IDs that have association with 20 suspicious IDs (inclusive).

Data Preparation Question 4 (For Gephi)

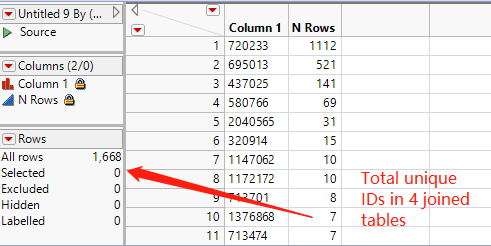

-Select distinct 8 people from other suspicious purchase table, link each 4 large data table to get the records relate to those 8 people (Same method as Question 1). Then, create the Node for large data table where has 8 suspicious purchase people inside.(1,668 IDs)

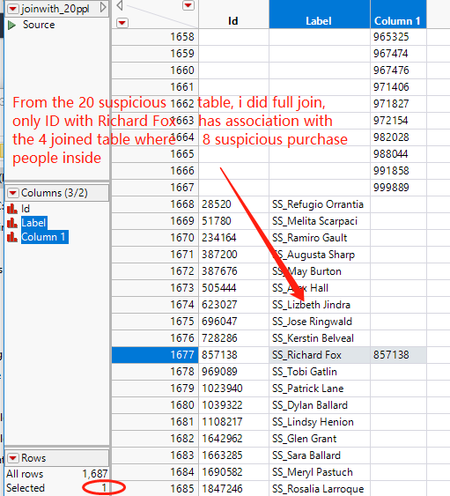

-Joined the table with the first group to check who has association with the joined large table which contains the 8 suspiciou-purchase-group (Only Richard has association with them)

-Concatenate first group edge table, in order to detect the pattern and cross check with larger data table, select unique IDs to join Company index to get a proper Node file with labels,