Difference between revisions of "Maptimization Details"

| (13 intermediate revisions by the same user not shown) | |||

| Line 29: | Line 29: | ||

__FORCETOC__ | __FORCETOC__ | ||

| − | ==<div style="padding | + | ==<div style="padding: 20px; line-height: 0.3em; text-indent: 16px;letter-spacing:0.1em;font-size:26px;border-top:solid #8470ff; border-bottom:solid #8470ff;"><font color= #3c3c3c face="Bebas Neue">Motivation and Problem Discovery</font></div>== |

===Motivation=== | ===Motivation=== | ||

With families in Singapore moving towards modern times where both parents or couple work full-time, domestic household chores are performed much less due to time-constraints. Jelivery Service(JS) Pte Ltd aims to alleviate this issue by providing convenient laundry washing services. | With families in Singapore moving towards modern times where both parents or couple work full-time, domestic household chores are performed much less due to time-constraints. Jelivery Service(JS) Pte Ltd aims to alleviate this issue by providing convenient laundry washing services. | ||

| Line 56: | Line 56: | ||

<br/> This is compared to ezBuy’s collection points, where there are stipulated parcel collection timings imposed by MRT or neighbourhood collection points. | <br/> This is compared to ezBuy’s collection points, where there are stipulated parcel collection timings imposed by MRT or neighbourhood collection points. | ||

| − | ==<div style="padding | + | ==<div style="padding: 20px; line-height: 0.3em; text-indent: 16px;letter-spacing:0.1em;font-size:26px;border-top:solid #8470ff; border-bottom:solid #8470ff;"><font color= #3c3c3c face="Bebas Neue">Application and Solution</font></div>== |

| − | ===Methodology - | + | ===Methodology - Three-pronged Approach=== |

====Elbow method==== | ====Elbow method==== | ||

Kmean clustering is to define clusters such that the total intra-cluster variation [or total within-cluster sum of square (WSS)] is minimized. The total WSS measures the compactness of the clustering and we want it to be as small as possible. | Kmean clustering is to define clusters such that the total intra-cluster variation [or total within-cluster sum of square (WSS)] is minimized. The total WSS measures the compactness of the clustering and we want it to be as small as possible. | ||

| Line 89: | Line 89: | ||

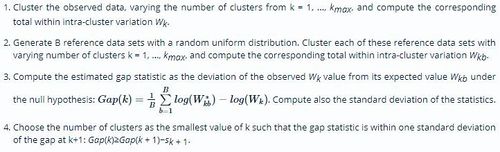

====Gap statistic method==== | ====Gap statistic method==== | ||

The gap statistic has been published by R. Tibshirani, G. Walther, and T. Hastie (Standford University, 2001). The approach can be applied to any clustering method. The gap statistic compares the total within intra-cluster variation for different values of k with their expected values under null reference distribution of the data. The estimate of the optimal clusters will be value that maximize the gap statistic (i.e, that yields the largest gap statistic). This means that the clustering structure is far away from the random uniform distribution of points. The algorithm works as follow: | The gap statistic has been published by R. Tibshirani, G. Walther, and T. Hastie (Standford University, 2001). The approach can be applied to any clustering method. The gap statistic compares the total within intra-cluster variation for different values of k with their expected values under null reference distribution of the data. The estimate of the optimal clusters will be value that maximize the gap statistic (i.e, that yields the largest gap statistic). This means that the clustering structure is far away from the random uniform distribution of points. The algorithm works as follow: | ||

| − | + | [[File:Gapalgo.jpg|500px|frameless]] | |

[[File:Gapstaticmethod.jpg|500px|frameless]] | [[File:Gapstaticmethod.jpg|500px|frameless]] | ||

The above result shows that the optimal number of k is 5. | The above result shows that the optimal number of k is 5. | ||

| − | <b>Conclusion: Through the utilization of three analysis's methods, we have found the optimal number of k-cluster to be <u>5</u>.</b> | + | <b>Conclusion: Through the utilization of the above three analysis's methods, we have found the optimal number of k-cluster to be <u>5</u>.</b> |

| + | |||

| + | ===Solution=== | ||

| + | [[File:Kclustertotal.png|1000px|frameless]] | ||

| + | <br/> | ||

| + | The Κ-means clustering algorithm uses iterative refinement to produce a final result. The algorithm inputs are the number of clusters Κ and the data set. The data set is a collection of features for each data point. The algorithms starts with initial estimates for the Κ centroids, which can either be randomly generated or randomly selected from the data set. The algorithm then iterates between two steps: | ||

| + | 1. Data assignment step: Each centroid defines one of the clusters. In this step, each data point is assigned to its nearest centroid, based on the squared Euclidean distance. More formally, if Ci is the collection of centroids in set C, then each data point x is assigned to a cluster based on | ||

| + | |||

| + | [[File:Kmean.jpg|300px|frameless]] | ||

| + | |||

| + | where dist( · ) is the standard (L2) Euclidean distance. Let the set of data point assignments for each i^th cluster centroid be Si. | ||

| + | 2. Centroid update step: In this step, the centroids are recomputed. This is done by taking the mean of all data points assigned to that centroid's cluster. | ||

| + | |||

| + | [[File:Kmean2.jpg|300px|frameless]] | ||

| + | |||

| + | The algorithm iterates between steps one and two until a stopping criteria is met (i.e., no data points change clusters, the sum of the distances is minimized, or some maximum number of iterations is reached). | ||

| + | |||

| + | This algorithm is guaranteed to converge to a result. The result may be a local optimum (i.e. not necessarily the best possible outcome), meaning that assessing more than one run of the algorithm with randomized starting centroids may give a better outcome. | ||

| + | |||

===Data Sources=== | ===Data Sources=== | ||

<table class="wikitable"> | <table class="wikitable"> | ||

| Line 119: | Line 137: | ||

<table> | <table> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | ==<div style="padding | + | ==<div style="padding: 20px; line-height: 0.3em; text-indent: 16px;letter-spacing:0.1em;font-size:26px;border-top:solid #8470ff; border-bottom:solid #8470ff;"><font color= #3c3c3c face="Bebas Neue">Project Storyboard</font></div>== |

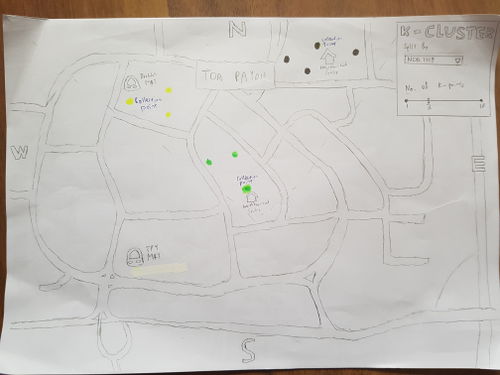

===First sketch=== | ===First sketch=== | ||

[[File:Maptimization_presketch.jpg|400px|frameless]]<br/> | [[File:Maptimization_presketch.jpg|400px|frameless]]<br/> | ||

| Line 152: | Line 156: | ||

For illustration purposes, we have shown that a user have selected the "North" Region and a K-Cluster of 3. The application shows that 3 K-Clusters are shown to the user. The user will then be able to make their way down to whichever is the nearest collection point or whichever is the densest cluster to be served quicker. | For illustration purposes, we have shown that a user have selected the "North" Region and a K-Cluster of 3. The application shows that 3 K-Clusters are shown to the user. The user will then be able to make their way down to whichever is the nearest collection point or whichever is the densest cluster to be served quicker. | ||

| − | ==<div style="padding | + | ==<div style="padding: 20px; line-height: 0.3em; text-indent: 16px;letter-spacing:0.1em;font-size:26px;border-top:solid #8470ff; border-bottom:solid #8470ff;"><font color= #3c3c3c face="Bebas Neue">Technologies used</font></div>== |

Technologies: <br/> | Technologies: <br/> | ||

- R Shiny <br/> | - R Shiny <br/> | ||

- R Studio <br/> | - R Studio <br/> | ||

- R Language <br/> | - R Language <br/> | ||

| − | - | + | - OSM <br/> |

- Excel <br/> | - Excel <br/> | ||

| − | + | ==<div style="padding: 20px; line-height: 0.3em; text-indent: 16px;letter-spacing:0.1em;font-size:26px;border-top:solid #8470ff; border-bottom:solid #8470ff;"><font color= #3c3c3c face="Bebas Neue">Challenges & Limitations</font></div>== | |

| − | ==<div style="padding | ||

'''Data''' | '''Data''' | ||

* There was lack of sufficient data available for Toa Payoh | * There was lack of sufficient data available for Toa Payoh | ||

| Line 177: | Line 180: | ||

| − | ==<div style="padding | + | ==<div style="padding: 20px; line-height: 0.3em; text-indent: 16px;letter-spacing:0.1em;font-size:26px;border-top:solid #8470ff; border-bottom:solid #8470ff;"><font color= #3c3c3c face="Bebas Neue">Future work</font></div>== |

This Spatial Clustering with kmean is an optimization model in aiding business to analyze the best collection point or clustering for business needs. <br/> | This Spatial Clustering with kmean is an optimization model in aiding business to analyze the best collection point or clustering for business needs. <br/> | ||

With the option to upload own data set, it would allow the flexibility to be transferable to other business functions and industry as long as they met the data set requirements. | With the option to upload own data set, it would allow the flexibility to be transferable to other business functions and industry as long as they met the data set requirements. | ||

<br/> | <br/> | ||

<br/> | <br/> | ||

| − | Furthermore, | + | Furthermore, based on the needs of the business, this model can be easily extended to include criteria in its consideration when doing spatial clustering. <br/>For example, we can add in distance into consideration such that the minimum number of distance should not be under 10km or maximum distance should not be over 15km. These would ensure a more fair distribution of k clustering base on the business needs. |

| − | ==<div style="padding | + | |

| + | ==<div style="padding: 20px; line-height: 0.3em; text-indent: 16px;letter-spacing:0.1em;font-size:26px;border-top:solid #8470ff; border-bottom:solid #8470ff;"><font color= #3c3c3c face="Bebas Neue">Meet the team</font></div>== | ||

[[File:Maptimization home.PNG |1000px|center|Jury]] | [[File:Maptimization home.PNG |1000px|center|Jury]] | ||

Latest revision as of 12:42, 13 April 2018

Contents

Motivation and Problem Discovery

Motivation

With families in Singapore moving towards modern times where both parents or couple work full-time, domestic household chores are performed much less due to time-constraints. Jelivery Service(JS) Pte Ltd aims to alleviate this issue by providing convenient laundry washing services.

Problem

Currently, Jelivery Services operations function on delivering laundry to each customer's doorsteps regardless of distribution demand nor distance. This creates an issue where deliveries are sometimes time-inefficient and very fuel-consuming for its employees. This results a high operational cost on the company's expenses, making their profit margin very thin.

Proposed Solution

Our group, Maptimization, aims to optimize Jelivery Service's business process by streamlining it via the creation of single-best-point(s) for laundry collections/returns based on the layout of the area and zone. This would increase efficiency and ultimately improve customer satisfaction by providing the best convenience to them possible. Through the use of K-means clustering and sufficient data points, we would be able to create an optimization model.

Related Works

EzBuy

A prime industrial example, ezBuy, utilizes 277 collection points nationwide, ranging from MRT stations, neighbourhood points and warehouse collection centres

Benefits: At a stipulated time, customers will be able to the three different types of location as stated above. Collection from self-collection from these locations saves each customer delivery charges of $5-$30.

Each type of location is also suited to different needs, such as time or parcel weight(e.g. MRT <8kg, Neighbourhood point <25kg, Warehouse <50kg), so customers are able to pick a point that will suit their convenience.

EzCollect

A new addition to EzBuy, EzCollect is a newly launched collection in November 2017 between EzBuy and 3rd party retail shop owners.

Benefits: With this, customers are provided a larger variety of options to collect their purchased items and parcels at their convenience, with even some ezCollect retail shops being 24/7.

This is compared to ezBuy’s collection points, where there are stipulated parcel collection timings imposed by MRT or neighbourhood collection points.

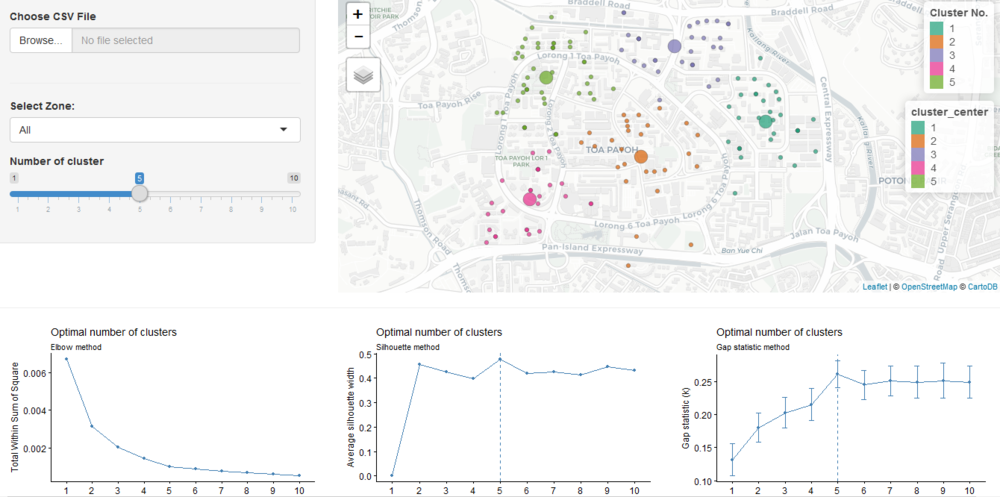

Application and Solution

Methodology - Three-pronged Approach

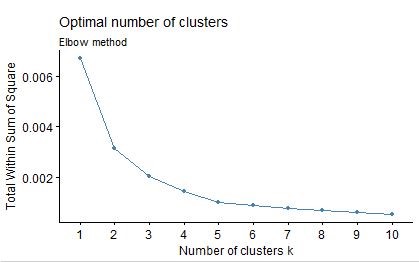

Elbow method

Kmean clustering is to define clusters such that the total intra-cluster variation [or total within-cluster sum of square (WSS)] is minimized. The total WSS measures the compactness of the clustering and we want it to be as small as possible. The Elbow method looks at the total WSS as a function of the number of clusters: One should choose a number of clusters so that adding another cluster doesn’t improve much better the total WSS.

The optimal number of cluster take on the following steps: 1. Compute clustering algorithm (e.g., k-means clustering) for different values of k. For instance, by varying k from 1 to 10 clusters. 2. For each k, calculate the total within-cluster sum of square (wss). 3. Plot the curve of wss according to the number of clusters k. 4. The location of a bend (knee) in the plot is generally considered as an indicator of the appropriate number of clusters.

From the above result, we observe that the decrease in marginal different start to occur around k=5, which recommend the optimal number of k cluster to be 5.

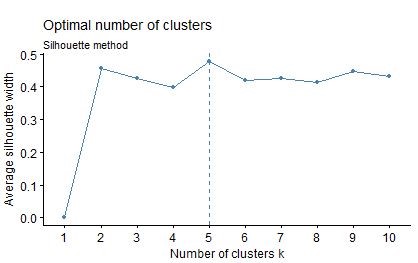

Average silhouette method

Average silhouette method

The average silhouette approach measures the quality of a clustering. That is, it determines how well each object lies within its cluster. A high average silhouette width indicates a good clustering. For a comprehensive description, refer to Lesson 10: Analysing and Visualising Geographically Referenced Attributes

Average silhouette method computes the average silhouette of observations for different values of k. The optimal number of clusters k is the one that maximize the average silhouette over a range of possible values for k (Kaufman & Rousseeuw, 1990).

The algorithm is similar to elbow method which take on the following steps:

1. Compute clustering algorithm (e.g., k-means clustering) for different values of k. For instance, by varying k from 1 to 10 clusters.

2. For each k, calculate the average silhouette of observations (avg.sil).

3. Plot the curve of avg.sil according to the number of clusters k.

4. The location of the maximum is considered as the appropriate number of clusters.

From the above result, we observe that the decrease in marginal different start to occur around k=5, which then recommends the optimal number of k cluster to be 5 to the user.

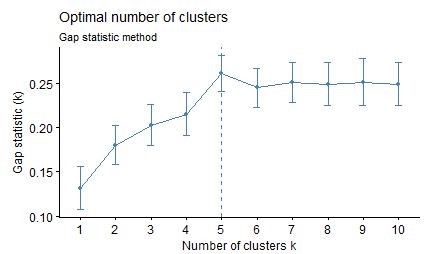

Gap statistic method

The gap statistic has been published by R. Tibshirani, G. Walther, and T. Hastie (Standford University, 2001). The approach can be applied to any clustering method. The gap statistic compares the total within intra-cluster variation for different values of k with their expected values under null reference distribution of the data. The estimate of the optimal clusters will be value that maximize the gap statistic (i.e, that yields the largest gap statistic). This means that the clustering structure is far away from the random uniform distribution of points. The algorithm works as follow:

The above result shows that the optimal number of k is 5.

Conclusion: Through the utilization of the above three analysis's methods, we have found the optimal number of k-cluster to be 5.

Solution

The Κ-means clustering algorithm uses iterative refinement to produce a final result. The algorithm inputs are the number of clusters Κ and the data set. The data set is a collection of features for each data point. The algorithms starts with initial estimates for the Κ centroids, which can either be randomly generated or randomly selected from the data set. The algorithm then iterates between two steps:

1. Data assignment step: Each centroid defines one of the clusters. In this step, each data point is assigned to its nearest centroid, based on the squared Euclidean distance. More formally, if Ci is the collection of centroids in set C, then each data point x is assigned to a cluster based on

where dist( · ) is the standard (L2) Euclidean distance. Let the set of data point assignments for each i^th cluster centroid be Si. 2. Centroid update step: In this step, the centroids are recomputed. This is done by taking the mean of all data points assigned to that centroid's cluster.

The algorithm iterates between steps one and two until a stopping criteria is met (i.e., no data points change clusters, the sum of the distances is minimized, or some maximum number of iterations is reached).

This algorithm is guaranteed to converge to a result. The result may be a local optimum (i.e. not necessarily the best possible outcome), meaning that assessing more than one run of the algorithm with randomized starting centroids may give a better outcome.

Data Sources

| Data | Source |

|---|---|

|

Street Directory |

http://www.streetdirectory.com/asia_travel/travel/travel_main.php?zonename=Toa+Payoh |

|

Singapore Commercial Guru SG |

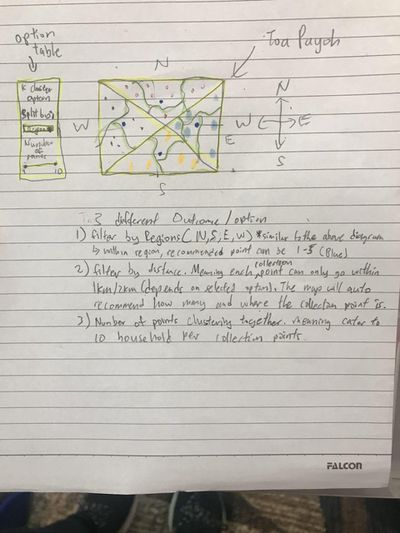

Project Storyboard

First sketch

Our first sketch sets a direction of what we would like users to be able to see and utilize in our final application. As seen above, we would like to provide three features to our users

1. Filtering by Region(North, South, East, West): This would allow users of different regions to only select laundry collection points near their regions. This is to facilitate convenience and disallow "noise(undesired)" collection points from showing up in their interface

2. Filter by Distance: This means that each collection point can only go up to a certain range from them(e.g. 1KM away from them). The application would recommend how many collection points are within the selected range

3. Filter by K-Clusters: This would allow users to know how many collection points are clustering together, as each collection point is catered to 10 household collection point. If a cluster is more dense, he will more likely be able to collect his laundry easier.

Final sketch

Our final sketch shows an in-depth view of what we would expect the application to look and feel like. Through the selection of options on the top right of our application, users will be able to select Jelivery Service laundry collection points to their own convenience.

For illustration purposes, we have shown that a user have selected the "North" Region and a K-Cluster of 3. The application shows that 3 K-Clusters are shown to the user. The user will then be able to make their way down to whichever is the nearest collection point or whichever is the densest cluster to be served quicker.

Technologies used

Technologies:

- R Shiny

- R Studio

- R Language

- OSM

- Excel

Challenges & Limitations

Data

- There was lack of sufficient data available for Toa Payoh

- Datasets found are often incomplete or had missing fields

Data cleaning and transformation

- Addresses found often had invalid postal codes or unknown postal codes, which required research to amend it

- Data transformation to respective regions(North/South/East/West) was resource-draining and tedious

Novel technologies

- Steep learning curve

- A lot of consultations were needed with Prof Kam

- Self-learning

Future work

This Spatial Clustering with kmean is an optimization model in aiding business to analyze the best collection point or clustering for business needs.

With the option to upload own data set, it would allow the flexibility to be transferable to other business functions and industry as long as they met the data set requirements.

Furthermore, based on the needs of the business, this model can be easily extended to include criteria in its consideration when doing spatial clustering.

For example, we can add in distance into consideration such that the minimum number of distance should not be under 10km or maximum distance should not be over 15km. These would ensure a more fair distribution of k clustering base on the business needs.