Difference between revisions of "Data Preparation VA Assignment"

| Line 1: | Line 1: | ||

| + | {|width=100%, style="background-color:#2B3856; font-family:Century Gothic; font-size:100%; text-align:center;" | ||

| + | |- | ||

| + | | | [[ISSS608_2017-18_T1_Assign_MATILDA TAN YING XUAN|<font color = "#ffffff">Overview</font>]] || [[Data Preparation_VA_Assignment|<font color = "#ffffff">Data Preparation</font>]] || [[Analysis|<font color = "#ffffff">Analysis and Results</font>]] | ||

| + | |- | ||

| + | | | ||

| + | |} | ||

| + | |||

| + | |||

<h2> Data Preparation </h2> | <h2> Data Preparation </h2> | ||

Revision as of 02:47, 16 October 2017

| Overview | Data Preparation | Analysis and Results |

Data Preparation

Strategy

As the bulk of the content for analysis lies in the microblog entries, which will allow for us to investigate 1)events that may have caused the spread of the illness and 2)the travel routes of the sick to allow for source tracing and also tracking of any areas that may potentially be at risk of high volumes of infection.

Extraction of entries of infected citizens

Python was employed in the process of data preparation for the extraction of microblog entries of infected citizens of Smartpolis as follows:

Step 1: Extraction of data

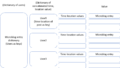

The 3 microblog entries were read into a Python dictionary, which held the following format:

Step 2: Compiling list of words related to sickness

The symptoms of the illness (such as coughing, chill, sweats, flu, fever, nausea) were read into an array, which was then updated with synonyms of these symptoms through the wordnet module found in the nltk package. However, certain words provided in the list have more than one meaning: sweats might refer to the cold sweats encountered in illness, or might also refer to slang for sweatshirts and sweatpants. To avoid the addition of such non-relevant words, allowance for user input was provided in the code, such that synonyms found are returned to the user interface, and only the words input by the user are used for updating the array of symptoms. Lastly, colloquial terms that refer to sickness that have not been added to the array are added, along with sickness related phrases returned in preliminary data analysis with JMP (more on this in the analysis and results section).

Step 3: Identification of sickness part 1 - Identification of symptoms

The microblog posts were searched for the words and phrases found in the symptoms array: As each post was read in as a string, the command if <word> in string would no longer serve as a valid condition, as search for shorter words such as 'ill' might return false positives be capturing words such as 'will' as 'ill'.As such, two separate loops were created: for phrases, the strings were searched for a is with the abovementioned condition. For words, each post was tokenized using the nltk package's RegexpTokenizer, and the words were search for among the resultant tokens.

Step 4: Identification of sickness part 2 - Identification of subject

As it is possible that the microblog post describes someone else's illness instead of the microblog owner's, an attempt to identify the subject of the illness was made with help from Point of Speech (POS) tagging via the nltk package, an array of common pronouns used for oneself, and an array of common pronouns for others. Each post was then searched for common pronouns used for oneself, and common pronouns used for others - should there be no pronouns present, the post was deemed to be about the microblog owner. Should the post contain more pronouns regarding oneself than that for others, the post was also deemed to be about the microblog owner. At this point, should the post not belong to both categories, POS tagging is carried out to identify proper nouns (denoted by NNP) - should this be present in the microblog entry, the entry is taken to be about another person. This is combined with the results of the previous step, to arrive at the list of users who are deemed to be infected.

Step 5: Preparation for possible spreading while asymptomatic

As certain illnesses have shown themselves to be capable of infecting another through contact with a carrier (who exhibits no symptoms), the first onset of the symptoms for the list of infected users was compiled by splitting the time-location key, and extracting the dates through user-defined functions due to certain anomalies in the data (where the date time values have anomalous alphabetical characters in them). A separate indicator array of the users and their post time-location keys was created, with time-location keys of infected users holding the value of 1 after the first onset of symptoms. This array was then compared with the original set of posts and list of symptoms, such that time-location keys of posts that include mention of symptoms have their '1's replaced with the flu-like symptom mentioned. This column would serve as the field from which the symptoms filter would be built upon.

Step 6: Reconstructing the dataset

The original dataset was combined with the symptom indicator column by matching the time-indicator key in the first dictionary of microblog posts with the time and location in the dictionary with the symptoms filter, and written to a csv file for usage in Tableau.

Step 1: Aggregating the keywords by day

With the dictionary of microblog posts read into Python, the RegexpTokenizer from nltk was used to tokenize each post. this was then aggregated by the day by appending each token into a separate array for each date. To avoid capturing commonly used words that have little significance, each token was checked against the list of stop words available in nltk.corpus.

Step 2: Checking each word for the frequency of its use

Each array of words is parsed through - the number of appearance of each word is calculated and put together with the word in a tuple, which is then returned to a new array. This array is then sorted via the operator.itemgetter such that the word with the largest number of appearances would be sent to the start of the array. This would then allow us to write to a text file the top 300 words for each date, for use in the data analysis to follow.